Friday, November 18th 2016

AMD Radeon GPUs Limit HDR Color Depth to 8bpc Over HDMI 2.0

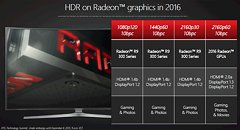

High-dynamic range or HDR is all the rage these days as the next big thing in display output, now that hardware has time to catch up with ever-increasing display resolutions such as 4K ultra HD, 5K, and the various ultra-wide formats. Hardware-accelerated HDR is getting a push from both AMD and NVIDIA in this round of GPUs. While games with HDR date back to Half Life 2, hardware-accelerated formats that minimize work for game developers, in which the hardware makes sense of an image and adjusts its output range, is new and requires substantial compute power. It also requires additional interface bandwidth between the GPU and the display, since GPUs sometimes rely on wider color palettes such as 10 bpc (1.07 billion colors) to generate HDR images. AMD Radeon GPUs are facing difficulties in this area.

German tech publication Heise.de discovered that AMD Radeon GPUs render HDR games (games that take advantage of new-generation hardware HDR, such as "Shadow Warrior 2") at a reduced color depth of 8 bits per cell (16.7 million colors), or 32-bit; if your display (eg: 4K HDR-ready TV) is connected over HDMI 2.0 and not DisplayPort 1.2 (and above). The desired 10 bits per cell (1.07 billion colors) palette is available only when your HDR display runs over DisplayPort. This could be a problem, since most HDR-ready displays these days are TVs. Heise.de observes that AMD GPUs reduce output sampling from the desired Full YCrBr 4: 4: 4 color scanning to 4: 2: 2 or 4: 2: 0 (color-sub-sampling / chroma sub-sampling), when the display is connected over HDMI 2.0. The publication also suspects that the limitation is prevalent on all AMD "Polaris" GPUs, including the ones that drive game consoles such as the PS4 Pro.

Source:

Heise.de

German tech publication Heise.de discovered that AMD Radeon GPUs render HDR games (games that take advantage of new-generation hardware HDR, such as "Shadow Warrior 2") at a reduced color depth of 8 bits per cell (16.7 million colors), or 32-bit; if your display (eg: 4K HDR-ready TV) is connected over HDMI 2.0 and not DisplayPort 1.2 (and above). The desired 10 bits per cell (1.07 billion colors) palette is available only when your HDR display runs over DisplayPort. This could be a problem, since most HDR-ready displays these days are TVs. Heise.de observes that AMD GPUs reduce output sampling from the desired Full YCrBr 4: 4: 4 color scanning to 4: 2: 2 or 4: 2: 0 (color-sub-sampling / chroma sub-sampling), when the display is connected over HDMI 2.0. The publication also suspects that the limitation is prevalent on all AMD "Polaris" GPUs, including the ones that drive game consoles such as the PS4 Pro.

126 Comments on AMD Radeon GPUs Limit HDR Color Depth to 8bpc Over HDMI 2.0

I've been working with 3D raytracing and the tool had option for 40bit image renering which significantly increased rendering time, but decreased or even eliminated color banding on the output image (especially when that got scaled down later to be stored as image file since mainstream formats like JPG, PNG or BMP don't support 40bits).

Of course human eye can see 10-bit palette accurately - just as human ear is capable to hear cats and dogs frequencies and distinguish clearly the debilitating difference between 22.1KHz and 24KHz (let alone 48KHz!). Also, all displays used accurately show all 100% of visible spectrum, having no technological limitations whatsoever, so this is of utmost importance! All humanity vision and hearing are flawless, too... And we ALL need 40MP cameras for pictures later reproduced pictures on FHD or, occasionally, 4k displays... especially on mobile phones displays...

[irony off]

I try to fight this kind of blind belief in ridiculous claims for many years, but basically it's not worth it...

On one side we have the dynamic range, which is the difference between the darkest and brightest colour. Th human eye has a certain dynamic range, but by adjusting the pupil, it can shift this range up and down.

On the other hand, we have the computer which handles colour in a discrete world. Discrete computing inevitably alters the data, thus we need to have the computer work at a level of detail the human doesn't see, otherwise we end up with wrong colours and/or banding.

In short, for various reasons, computers need more info to work with than the naked eye can see.

HDMI 2.0 maximum bandwidth: 14.4 Gb/s

I said it before and I'll say it again, HDMI sucks. They're working on HDMI 2.1 spec likely to increase the bandwidth. Since HDMI is running off the DVI backbone that was created over a decade ago, the only way to achieve more bandwidth is shorter cables. HDMI is digging its own grave and has been for a long time.

Edit : Oh i found this :

www.hdmi.org/manufacturer/hdmi_2_0/hdmi_2_0_faq.aspx

Look at a Chart :

4K@60 , 10 bit , 4.2.2 : Pass

4K@60 , 10 bit , 4:4:4 : Fail

Also, this is a limitation of the HDMI spec. That's the entire purpose for HDMI 2.0a's existence is to add the necessary metadata stream for UHD-HDR to work.

@btarunr Do you want to check zlatan's point and update the article if he's right?

Also not sure why those 4K HDR TVs are not provided with at least DP1.2 interfaces also...