Friday, November 18th 2016

AMD Radeon GPUs Limit HDR Color Depth to 8bpc Over HDMI 2.0

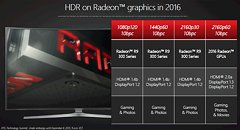

High-dynamic range or HDR is all the rage these days as the next big thing in display output, now that hardware has time to catch up with ever-increasing display resolutions such as 4K ultra HD, 5K, and the various ultra-wide formats. Hardware-accelerated HDR is getting a push from both AMD and NVIDIA in this round of GPUs. While games with HDR date back to Half Life 2, hardware-accelerated formats that minimize work for game developers, in which the hardware makes sense of an image and adjusts its output range, is new and requires substantial compute power. It also requires additional interface bandwidth between the GPU and the display, since GPUs sometimes rely on wider color palettes such as 10 bpc (1.07 billion colors) to generate HDR images. AMD Radeon GPUs are facing difficulties in this area.

German tech publication Heise.de discovered that AMD Radeon GPUs render HDR games (games that take advantage of new-generation hardware HDR, such as "Shadow Warrior 2") at a reduced color depth of 8 bits per cell (16.7 million colors), or 32-bit; if your display (eg: 4K HDR-ready TV) is connected over HDMI 2.0 and not DisplayPort 1.2 (and above). The desired 10 bits per cell (1.07 billion colors) palette is available only when your HDR display runs over DisplayPort. This could be a problem, since most HDR-ready displays these days are TVs. Heise.de observes that AMD GPUs reduce output sampling from the desired Full YCrBr 4: 4: 4 color scanning to 4: 2: 2 or 4: 2: 0 (color-sub-sampling / chroma sub-sampling), when the display is connected over HDMI 2.0. The publication also suspects that the limitation is prevalent on all AMD "Polaris" GPUs, including the ones that drive game consoles such as the PS4 Pro.

Source:

Heise.de

German tech publication Heise.de discovered that AMD Radeon GPUs render HDR games (games that take advantage of new-generation hardware HDR, such as "Shadow Warrior 2") at a reduced color depth of 8 bits per cell (16.7 million colors), or 32-bit; if your display (eg: 4K HDR-ready TV) is connected over HDMI 2.0 and not DisplayPort 1.2 (and above). The desired 10 bits per cell (1.07 billion colors) palette is available only when your HDR display runs over DisplayPort. This could be a problem, since most HDR-ready displays these days are TVs. Heise.de observes that AMD GPUs reduce output sampling from the desired Full YCrBr 4: 4: 4 color scanning to 4: 2: 2 or 4: 2: 0 (color-sub-sampling / chroma sub-sampling), when the display is connected over HDMI 2.0. The publication also suspects that the limitation is prevalent on all AMD "Polaris" GPUs, including the ones that drive game consoles such as the PS4 Pro.

126 Comments on AMD Radeon GPUs Limit HDR Color Depth to 8bpc Over HDMI 2.0

TVs aren't really meant for 60fps, because they're not primarily aimed at gamers. At 24 or 30 they can get away with older interfaces.

In the UK it's the PAL spec and 50Hz interlaced.

Most TVs these days run at full 60Hz, at full 4K at full gamut as well. This was only an issue for early adopters.

Looking at TSAA vs other AA effects, TSAA looks like it removes some lighting passes, and some detail while performing AA.

en.wikipedia.org/wiki/HDMI#Version_2.0

What I gleaned from this article is that AMD is unable to push HDR10 at any chroma resolution over HDMI, even 4:2:0. This is bad news for anyone that wants an AMD card in an HTPC, as it won't be able to output HDR movies or games to your fancy 4KTV. Let's hope they can somehow fix this in the drivers. Nvidia has driver support for HDR, but sadly there are almost no games that support it now and Hollywood is restricting access to 4k and HDR video content on PC. Nvidia apparently is working with Netflix and devs, but no news for a while. Also, Windows 10 does not support HDR in shared mode with the desktop, so until they fix that, HDR will only work with exclusive fullscreen. Lots of work to be done on the PC side, luckily at least Nvidia cards will be ready when the content is.

wccftech.com/nvidia-pascal-goes-full-in-with-hdr-support-for-games-and-4k-streaming-for-movies/\

What I find curious is that the PS4 Pro and Xbox One S have no issue with HDR at 4:2:0 over HDMI 2.0. Sony made a big deal of having more up-to-date architecture than the Polaris used in the RX 480, and Microsoft used a newer version of the architecture for their die shrink (as evidenced by the 16nm size and H265 support). I really hope for AMD owners' sake that the Polaris in the 480 isn't missing this feature, as HDR is awesome and makes a bigger difference than 4k for gaming, in my humble opinion.

www.vesa.org/wp-content/uploads/2010/12/DisplayPort-DevCon-Presentation-DP-1.2-Dec-2010-rev-2b.pdf

"video data bandwidth to 2160Mbytes/sec" which is 17.28 Gb/s as stated above.

HDMI 2.0 can only do 2160p60 10-bit if using 4:2:2 chroma sampling, as AMD's slide says.

Also, don't double post.

Also, I may have gotten the 8bit/10bit encoding stuff wrong, but FordGT90Concept, you haven't really answered why you think that all these other 4K devices, like UHD bluray players, Xbox One S, PS4 Pro, Nvidia Shield, Chromecast Ultra, can send HDR at 4K over HDMI 2.0 but AMD can't because of the spec. That's just stupid.

Edit:I have a 4K HDR TV, I realize that very well thanks. Probably better than you in fact. Some random journalist is comparing the image quality subjectively and that's somehow proof that Nvidia isn't sending HDR metadata? Please. The big issue is AMD is not supporting full chroma resolution over 4K even at 8 bit, which means they may not have the bandwidth to run HDR as 4:2:2 HDR uses similar bandwidth to 4:4:4 8-bit.

This was a problem before, when it was discovered HDMI 2.0 couldn't do 4k at some certain fps (I don't recall the exact number) together with HDCP 2.2, meanwhile DP 1.2 was more than happy to oblige.

EDIT:

This is what Vizio says their HDMI 2.0 ports support. Parens are my analysis of the article.

600MHz pixel clock rate:

2160p@60fps, 4:4:4, 8-bit (AMD doesn't support)

2160p@60fps, 4:2:2, 12-bit (PS4 Pro w/ AMD GPU supports, Polaris may not support)

2160p@60fps, 4:2:0, 12-bit (PS4 Pro w/ AMD GPU supports, Polaris may not support)

I'm not quite sure how chroma subsampling translates to number of bits in the stream so I can't do the math on 4:2:2 or 4:2:0

Edit: It's complicated... en.wikipedia.org/wiki/Chroma_subsampling ...not going to invest my time in figuring that out.

"According to c't demand, a manager of the GPU manufacturer AMD explained that the current Radeon graphics cards RX 400 (Polaris GPUs) in PC games produce HDR images only with a color depth of 8 bits instead of 10 bits per color channel , When output via HDMI 2.0 to a HDR-compatible 4K TV. AMD uses a special dithering method with respect to the gamma curve (Perceptual Curve), in order to display the gradients as smooth as possible. HDR TVs still recognize an HDR signal and switch to the appropriate mode."

What is missing in there is an acknowledgement that Windows 10 doesn't support HDR at the moment, and it's suspect that Shadow Warrior is actually outputting HDR metadata. They don't give any proof that the TV's are actually entering HDR mode. I apologize to others for trying to take this article at face value. At present, there is no confirmed support for HDR content of any sort on PC, irrespective of the GPU you have.

I expected better from Techpowerup.

It's widely reported that Shadow Warrior 2 is the first PC title to support HDR (because of backing from NVIDIA).

To make HDR work, you need a GPU that supports 10 or more bits/color (I believe GTX 2## or newer and HD 7### series or newer qualifies), an operating system that supports it (Windows XP and newer should), a monitor that supports it (there's some...often spendy), and software that uses it (Shadow Warrior 2 is apparently the only game that meets that criteria now).

The article doesn't take into consideration HDR is end to end

Like your TV M-series didn't have HDR until a firmware update in August and even then HDR is still limited to the original standard tv spec of around 1k nits which is basic SDR. No different than any other monitor/TV but with a 20% dynamic backlight 80%-100% which would be neat if it went pass standard nits. The TV could have HDMI 2.0b+ but would be neglected by what the actual "image/color processor" inside the TV T-Com can handle inside not what GPU, cable. input can transmit

www.techpowerup.com/forums/threads/do-10-bit-monitors-require-a-quadro-firepro-card.198031/#post-3068138

Since you obviously have the 1080 card, a 4K HDR monitor, and the time to spare, why not test it yourself and make a relevant post about something instead of thread crapping.

mspoweruser.com/microsoft-bringing-native-hdr-display-support-windows-year/

Shadow Warrior will work with HDR on PC, but only in exclusive mode. Again, there's no proof from the original article that AMD is not pushing 10 bits in HDR mode. I think that's what is so frustrating is that the headline makes it sound like a fact but there's no actual proof that it's a deficiency on AMD's part or if the game is "faking" HDR in some way (not using 10 bit pixels in the entire rendering pipeline).

To Steevo, thanks for confirming you have no experience with HDR 10 or any 4K HDR format. Not that your shitbox could even push 4K anything.

To Xzibit, I completely missed the tiny source link, whoops. It looks like the source article is quoting yet another article, which makes this third hand news. Still a whole bunch of non-news. As for my TV, I have a P series now, and though the 1000 nit mastering is a big part of HDR, it's only part. The big deal is 10 bit color for better reds and greens and little to no dithering. I play UHD blurays and Forza Horizon 3 on my TV and the difference is shocking. I never realized how much tone mapping they did in 8 bit content until I saw dark shadow detail and the sun in the same shot.