Tuesday, January 31st 2017

Backblaze's 2016 HDD Failure Stats Revealed: HGST the Most Reliable

Backblaze has just revealed their HDD failure rates statistics, with updates regarding 2016's Q4 and full-year analysis. These 2016 results join the company's statistics, which started being collected and collated in April 2013, to shed some light on the most - and least reliable - manufacturers. A total of 1,225 drives failed in 2016, which means the drive failure rate for 2016 was just 1.95 percent, a improving over the 2.47 percent that died in 2015 and miles below the 6.39 percent that hit the garbage bin in 2014.

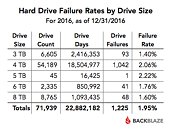

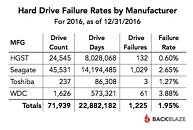

Organizing 2016's failure rates by drive size, independent of manufacturer, we see that 3 TB hard drives are the most reliable (with 1,40% failure rates), with 5 TB hard drives being the least reliable (at a 2,22% failure rate). When we organize the drives by manufacturer, HGST, which powers 34% (24,545) of the total drives (71,939), claims the reliability crown, with a measly 0,60% failure rate, and WDC bringing up the rear on reliability terms, with an average 3,88% failure rate, while simultaneously being one of the least represented manufacturers, with only 1,626 HDDs being used from the manufacturer.

Source:

Backblaze

Organizing 2016's failure rates by drive size, independent of manufacturer, we see that 3 TB hard drives are the most reliable (with 1,40% failure rates), with 5 TB hard drives being the least reliable (at a 2,22% failure rate). When we organize the drives by manufacturer, HGST, which powers 34% (24,545) of the total drives (71,939), claims the reliability crown, with a measly 0,60% failure rate, and WDC bringing up the rear on reliability terms, with an average 3,88% failure rate, while simultaneously being one of the least represented manufacturers, with only 1,626 HDDs being used from the manufacturer.

42 Comments on Backblaze's 2016 HDD Failure Stats Revealed: HGST the Most Reliable

I do believe that the faillure rate for Seagate is much higher, since i used to fix HDD's (datarecovery) for over 2 years. The majority of discs i had where seagate which either had a corrupted firmware, a booted PCB or toasted heads.

Personally i had many Seagate's in the past untill i had a severe crash which caused data loss. Never, seagate again. I have 2 samsung disks which are over 6 years old now, STILL running properly and maintaining my data for 24/7.

There are better brands then Seagate and google clarifies this as well. Seagate has a much higher faillure rate then any other brand.

Like I said before, it's not really the brand that matters, it's the specific model. Some are exceptionally reliable (<1% failure rate), some break a lot (>5% failure rate), and most are just normal (1-5%).

As for the thread - I did notice that HSGT drives are very reliable (and pretty speedy). I've had both seagate and WD drives fail on me (less then 2 years old), but never a HSGT drive die so far. Samsung drives used to be very good but as well - I still have a working 4GB drive that came in my K6 PC back in the day, and it still runs fine.

www.backblaze.com/blog/cloud-storage-hardware/

www.backblaze.com/blog/open-source-data-storage-server/

www.backblaze.com/blog/hard-drive-temperature-does-it-matter/

Incidentally, according to Google's data sheets on their own datacenters, drives actually like to run warmer than they are in BB's pods...

research.google.com/archive/disk_failures.pdf

storagemojo.com/2007/02/19/googles-disk-failure-experience/

www.45drives.com/ - Check out that solution and their client list...

www.google.com/search?q=storage+blade&client=safari&hl=en&prmd=sivn&source=lnms&tbm=isch&sa=X&ved=0ahUKEwin6NKlxvfRAhVJ3IMKHQk-D3AQ_AUICCgC&biw=667&bih=331

Given that their annual failure rates aren't too dissimilar to Google's, I never understood where the doubt comes from...

Don't get me wrong - I also think drives deserve more protection, but you might hate me if I recommended this ;): www.silverstonetek.com/product.php?pid=665&area=en

P.S. Preventing vibration is worst for drives then allowing it... Rubber bands and the like, that allow the disk to vibrate, are probably better than the sleds most racks and blades use. Then, I haven't found any data, so who knows? :eek:

www.techpowerup.com/forums/search/16381259/?page=4&q=BackBlaze&o=date

The Google info is more credible, and actually is useful, especially regarding when drives normally die, and the fact that they do not like it too cool.

The google "info" seems to indicate that unknown (enterprise?) drives in Google's unknown chassis (? maybe they suspend their disks across a room?) in a top secret datacenter fail about as often as consumer drives in BackBlaze's open source (and, in some's opinion, vibration prone) storage pod datacenter... Telling me to search for a source that I literally just pointed to isn't going to change the correlation, and I wholly doubt it will debunk any of the claims I've made thus far, let alone indicate that organizations like NASA, Lockheed, Netflix, MIT, livestream, and Intel don't use racks that look oddly similar to BackBlaze's (hint: they were designed by the same company)...

You said "source on BB," I provided BB sources, and now those sources don't matter? Sorry, but this logic doesn't make any sense.

I've only made claims based on widely available public data. I've been a datacenter tech for over a decade, have done several petabyte installs, managed hundreds of RAIDs, and have even built a few storage pods for fun.

Conveniently, I don't need any of that life experience to be able to recognize cogency. It doesn't take a genius to look at annual failure rates from both data sets and be mesmerized by how BB manages failure rates as low as Google's with their supposedly bad chassis, or to see some authority in actual data centers (Intel and MIT are apparently incompetent and unknowledgable?) using older versions of BBs storage pods for their servers... You haven't provided a single warrant to your claims other than that some article that BB published that you can't refer to that supposedly (I guess we'll never know) contradicts the BB articles that I linked to in order to support my claims that were, you know, published by BB. All this says to me is that your claim hasn't no warrant at all (or the dog ate it).

You can hide behind patronizing arrogance as much as you'd like, but stupid is as stupid does. I take these data sets with several grains of salt, but most of the arguments against the data are either based on erroneous information or bad assumptions about how disks work (and how much work it is to build a datacenter).