Thursday, February 2nd 2017

MSI M.2 Shield is Snake Oil Say Tests, Company Refutes Charges

With its 200-series chipset motherboards, MSI introduced its exclusive M.2 Shield accessory on some of its more premium products. This aluminium heatspreader with a thermal pad, according to its makers, is designed to lower temperatures on M.2 SSDs, and reduce thermal throttling of performance. Tests by Gamers Nexus claim that far from reducing throttling, the M.2 Shield creates a "heat trap" that throttles performance further.

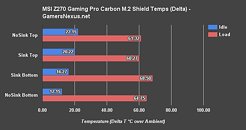

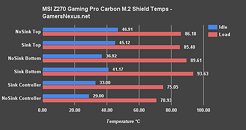

The M.2 Shield accessory was tested by Gamers Nexus using a Kingston HyperX Predator PCIe M.2 drive, on an MSI Z270 Gaming Pro Carbon motherboard. Driven by a Marvell 88SS9293 controller, the drive is known to heat up and lose performance to overheating. The data presented by the publication is Delta T (temperatures subtracting ambient temperature). MSI on its part, stands by the efficacy of the M.2 Shield accessory, and is sharing testing methods and data of its own with media sites. Tests by other publications such as Bit-Tech and KitGuru show positive results for the M.2 Shield.

Sources:

GamersNexus, ComputerBase.de

The M.2 Shield accessory was tested by Gamers Nexus using a Kingston HyperX Predator PCIe M.2 drive, on an MSI Z270 Gaming Pro Carbon motherboard. Driven by a Marvell 88SS9293 controller, the drive is known to heat up and lose performance to overheating. The data presented by the publication is Delta T (temperatures subtracting ambient temperature). MSI on its part, stands by the efficacy of the M.2 Shield accessory, and is sharing testing methods and data of its own with media sites. Tests by other publications such as Bit-Tech and KitGuru show positive results for the M.2 Shield.

38 Comments on MSI M.2 Shield is Snake Oil Say Tests, Company Refutes Charges

It looks cool though...

Which brings us to our second issue, these VRM packages are like any other silicon chip - the core is much hotter than the plastic packaging since the heat is generated in the silicon parts underneath. The manufacturer of the VRM chips usually assumes a given amount of cooling and hence an expected thermal gradient range, which is why the VRM as a whole package is specified for Tcase temperatures, when really, the MOSFETs are the main sources of heat. It is up to the GPU maker to implement such a thermal gradient. Given this thermal gradient, it is not clear what the core temperature of these VRM chips are. This is further complicated due to the power MOSFETs in the VRMs being typically specified for a steady-state thermal impedance and a transient thermal impedance (and in general the whole package features this distinction as well, due to thermal capacitance of any finite-sized object). A small burst workload (<1 second) close enough to the maximal internal channel temperatures at already high duty may trigger a transient over-temperature condition (in the silicon parts and not measurable as steady-state increases of temperature outside the package) and cause eventual failure through aggregated damage if not immediate.

For the longevity and reliability of these VRM chips, I would think a good design would be to keep the MOSFETs several 10s of degrees below the 125°C maximum to ensure actual MOSFET channel temperatures are always below the maximal channel temperatures specified. To do so, you would need to ensure the thermal gradient across the VRM chips to be as low as possible, which means effective cooling coupling in the form of a heatsink (that lowers the thermal impedance of the VRMs to the surrounding air).

It means it's a fugazzi.

Anyway, we do not have any means to figure this out completely. I do think there are issues with the tests conducted with thermal probes.

1-Most SSDs don't have chips on the back or not a lot of chips.

2-The back of the SSD will be the same with or without the shield, the shield only covers the front surface so how is the shield blocking the back at all.