Sunday, April 30th 2017

NVIDIA to Support 4K Netflix on GTX 10 Series Cards

Up to now, only users with Intel's latest Kaby Lake architecture processors could enjoy 4K Netflix due to some strict DRM requirements. Now, NVIDIA is taking it upon itself to allow users with one of its GTX 10 series graphics cards (absent the 1050, at least for now) to enjoy some 4K Netflix and chillin'. Though you really do seem to have to go through some hoops to get there, none of these should pose a problem.

The requirements to enable Netflix UHD playback, as per NVIDIA, are so:

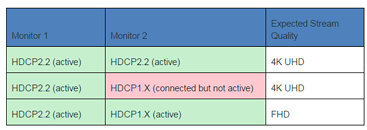

In case of a multi monitor configuration on a single GPU or multiple GPUs where GPUs are not linked together in SLI/LDA mode, 4K UHD streaming will happen only if all the active monitors are HDCP2.2 capable. If any of the active monitors is not HDCP2.2 capable, the quality will be downgraded to FHD.What do you think? Is this enough to tide you over to the green camp? Do you use Netflix on your PC?

Sources:

NVIDIA Customer Help Portal, Eteknix

The requirements to enable Netflix UHD playback, as per NVIDIA, are so:

- NVIDIA Driver version exclusively provided via Microsoft Windows Insider Program (currently 381.74).

- No other GeForce driver will support this functionality at this time

- If you are not currently registered for WIP, follow this link for instructions to join: insider.windows.com/

- NVIDIA Pascal based GPU, GeForce GTX 1050 or greater with minimum 3GB memory

- HDCP 2.2 capable monitor(s). Please see the additional section below if you are using multiple monitors and/or multiple GPUs.

- Microsoft Edge browser or Netflix app from the Windows Store

- Approximately 25Mbps (or faster) internet connection.

In case of a multi monitor configuration on a single GPU or multiple GPUs where GPUs are not linked together in SLI/LDA mode, 4K UHD streaming will happen only if all the active monitors are HDCP2.2 capable. If any of the active monitors is not HDCP2.2 capable, the quality will be downgraded to FHD.What do you think? Is this enough to tide you over to the green camp? Do you use Netflix on your PC?

59 Comments on NVIDIA to Support 4K Netflix on GTX 10 Series Cards

At the same time, I'm really curious about what's going on here, because Intel has claimed we need Kaby Lake because of some hardware feature absent from other platforms, yet Nvidia seems to be implementing this in software. Or maybe there's some hardware in Pascal GPUs that is now being taken advantage of?

8600GTGTX660 couldn't run 4K netflix.Edit: 8600gt can't output 4k, derp. My point still stands :)

It doesn't take much to do 4k as long as you have HDMI 2.0 with HDCP 2.2...problem is getting Widevine certified... Pretty sure Nvidia would have to submit for permission every single time it updated it's drivers...

Polaris is also capable, but is not included in PlayReady 3.0(the DRM) yet.

I really hate HDCP.

Is it provided by the GPU? Do I have the latest from the GPU or does the monitor determined what version it runs at?

Maybe Netflix's DRM team has been infiltrated by Piratebay?

Too many DRM services.... By law they should only be allowed one single standard...

I don't care how many platforms their are... 1 standard.

That 3GB memory minimum of what exactly: Vram or main memory?

My Dell Dimension XPS Gen 1 laptop supported 1920x1080 before it was popular, It ran movies just fine at that resolution, it was only games it had to be toned back, that was with a Gallatin Core P4 and an ATi R9800 256MB.

Not on PC but on the Widevine DRM they have security levels... Most devices only get 720p HD and very very few devices get full access.... Actually only high end Sony, Samsung and Vizio TVs get full access.. Other than that you have the Nvidia shield TV, Chromecast Ultra and the MiBox..

And under Fairplay I think only the newest Apple TV and the IPhone 6 get full UHD

Iron Fist, Marco Polo, Sense8, Travelers etc... The Netflix shows are literally better in UHD....

I hate the DRM but it's there so they can afford to make more shows in UHD thus worth it.

Edit:

Forgot my point..

I'm glad Nvidia is doing this..

The reason I went to Android TV over my HTPC was because I didn't have the option for 4K....

I'm not going to upgrade my PC for this alone since Android is obviously the better cheaper way to go these days but it's nice to have the option.

Almost everything 4k on Netflix is owned Netflix.

Just 4k...eh kind if see what you mean but I can tell that 4k HDR is very much with it...

Iron Fist...the ground pound scene is completely different in HDR... The fireworks scene in Sense8...All those fantastic night fight scenes on Marco Polo.. All way better in HDR... Completely worth the extra cost.