Thursday, July 6th 2017

AMD RX Vega Reportedly Beats GTX 1080; 5% Performance Improvement per Month

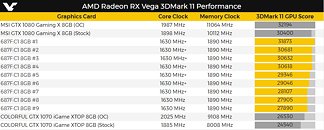

New benchmarks of an RX Vega engineering sample video card have surfaced. There have been quite a few benchmarks for this card already, which manifests with the 687F:C1 identifier. The new, GTX 1080 beating benchmark (Gaming X version, so a factory overclocked one) comes courtesy of 3D Mark 11, with the 687F:C1 RX Vega delivering 31,873 points in its latest appearance (versus 27,890 in its first). Since the clock speed of the 687F:C1 RX Vega has remained the same throughout this benchmark history, I think it's fair to say these improvements have come out purely at the behest of driver and/or firmware level performance improvements.The folks at Videocardz have put together an interesting chart detailing the 687F:C1 RX Vega's score history since benchmarks of it first started appearing, around three months ago. This chart shows an impressive performance improvement over time, with AMD's high-performance GPU contender showing an improvement of roughly 15% since it was first benchmarked. That averages out at around a 5% improvement per month, which bodes well for the graphics card... At least in the long term. We have to keep in mind that this video card brings with it some pretty extensive differences from existing GPU architectures in the market, with the implementation of HBC (High Bandwidth Cache) and HBCC (High Bandwidth Cache Controller). These architectural differences naturally require large amounts of additional driver work to enable them to function to their full potential - full potential that we aren't guaranteed RX Vega GPUs will be able to deliver come launch time.

Sources:

Videocardz, 3D Mark's latest 687F:C1, 3D Mark's first 687F:C1

141 Comments on AMD RX Vega Reportedly Beats GTX 1080; 5% Performance Improvement per Month

Not saying you dont get it, just stating what one believes is rational and understamds the tech, doesnt mean tbey are right either since its in their head.

Forums, lol!

No it wont. All the tricks in the world cant make up for a half baked arch.So VEGA will only perform properly once it is 3 years old and once it no longer matters from a sales perspective?

Would be nice to have a Ti for less than $700 lol

It may but that'd be a radical departure from GCN uarch all the way upto Polaris, GCN is a compute heavy platform & even if we take the a better GF process node into account it's hard to see Vega clock that high unless it's radically different from anything before it. I wasn't adding anything to your PoV, I was just expanding on what potential Vega holds or how it can clock that high.

Maybe I am too, not having a Nvidia card in my PC since 2002...

NVIDIA was throwing money into R&D and it paid off in a form of efficiency. Framebuffer compression, TBR rasterizer, it probably cost them a lot, but it paid off. AMD used a different approach saving costs by tweaking what they already had and worked with year old design which, despite being a bit more power hungry still delivered performance. Hawaii core tweaked a bit into Grenada made R9 390X competitive against GTX 980. They were essentially trading blows through games. Only reason why I grabbed GTX 980 was because I was curious. I've had Radeons for years and there was a lot of buzz around GTX 980 being efficient and on paper delivering higher DX12 support level. Which was a bit gimped by the lack of functional async, but whatever. So I said, lets give it a try. One may argue inferiority, but in my books, if it delivers performance, I frankly don't care how, be it through finesse of Maxwell 2 or through brute force of R9 390X. They both worked.

1. Recent AMD cards have been "trading blows" with Nvidia only if you disregard power draw. I can certainly understand if you're not concerned with it, but that doesn't mean other aren't.

2. You say AMD did what they did because their money was tied up with Ryzen. Well, they did pour money into HBM on consumer cards and it wasn't their most inspired choice.

People think AMD is just throwing tech around randomly, but if you look through years, it were all really slow, but strategic decisions. From merging CPU and GPU into APU's and evolving all that into giving GPU's ability to really fully share memory with system RAM to high speed, high bandwidth interconnections like Infinity Fabric. When you look at it all, it all makes a lot of sense when you're trying to build an ecosystem. Consoles with AMD's guts kinda showcase that already. I mean, Xbox One X features only GDDR5 memory fully shared between CPU and GPU (there is no "normal" RAM, it's just insanely fast GDDR5).

Wow, take my money AMD!! :rolleyes:

Now, again, before all you AMD apologists start foaming at the mouth at me for being "anti-AMD" and an "NVIDIA fanboy", you should instead be annoyed with AMD for continuing to put out disappointing products, not the guy (me) pointing out their failings.

For the record, I would have loved Vega to leapfrog the performance of the 1080 Ti and make NVIDIA play catch up for a change. That's real competition and results in better products for us at lower prices.

In another thread you gave me the crown of #1 hater. So who is the actual hater here? Me or @cdawall ?

:D :D :D

That being said. If it doesnt release for another month and barely edges out a card released spring of last year I will not touch it. This is getting silly and the hypetrain is full runaway lately. Amd is so far behind volta was delayed for a refresh card out of nvidia. They are stagnating rhe market. That makes me mad. Also being delayed for 7 months, that also makes me mad. Ryzen not working correctly on launch, leaves me zero faith in amd as of late which is sad. I guess we will see what comes down the pipelining, but as it sits I would have more luck hearding two year old's than promising a solid amd release.I still hate nothing other than amds release schedule. You know the book of lies.

Well that and driver crashes. After mining in the current market for a bit I can tell you amds drivers make my soul hurt and habe significantly colored my view of amd lately.

Reality is regardless how this card turns out, they need a whole new GPU lineup.

With this logic you been praising Nvidia for several weeks as well. So you did with Intel. and??

BTW yeah the increase in Intel's CPU's IPC of previous CPU putting kaby as the latest is practically none. isn't that the truth? So what's the reason to bring it up here?

www.3dmark.com/fs/13051960