Monday, August 7th 2017

Intel "Coffee Lake" Platform Detailed - 24 PCIe Lanes from the Chipset

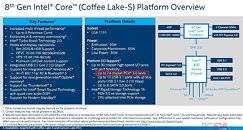

Intel seems to be addressing key platform limitations with its 8th generation Core "Coffee Lake" mainstream desktop platform. The first Core i7 and Core i5 "Coffee Lake" processors will launch later this year, alongside motherboards based on the Intel Z370 Express chipset. Leaked company slides detailing this chipset make an interesting revelation, that the chipset itself puts out 24 PCI-Express gen 3.0 lanes, that's not counting the 16 lanes the processor puts out for up to two PEG (PCI-Express Graphics) slots.

The PCI-Express lane budget of "Coffee Lake" platform is a huge step-up from the 8-12 general purpose lanes put out by previous-generation Intel chipsets, and will enable motherboard designers to cram their products with multiple M.2 and U.2 storage options, besides bandwidth-heavy onboard devices such as additional USB 3.1 and Thunderbolt controllers. The chipset itself integrates a multitude of bandwidth-hungry connectivity options. It integrates a 10-port USB 3.1 controller, from which six ports run at 10 Gbps, and four at 5 Gbps.Other onboard controllers includes a SATA AHCI/RAID controller with six SATA 6 Gbps ports. The platform also introduces PCIe storage options (either an M.2 slot or a U.2 port), which is wired directly to the processor. This is drawing inspiration from AMD AM4 platform, in which an M.2/U.2 option is wired directly to the SoC, besides two SATA 6 Gbps ports. The chipset also integrates a WLAN interface with 802.11ac and Bluetooth 5.0, though we think only the controller logic is integrated, and not the PHY itself (which needs to be isolated for signal integrity).

Intel is also making the biggest change to onboard audio standards since the 15-year old Azalia (HD Audio) specification. The new Intel SmartSound Technology sees the integration of a "quad-core" DSP directly into the chipset, with a reduced-function CODEC sitting elsewhere on the motherboard, probably wired using I2S instead of PCIe (as in the case of Azalia). This could still very much be a software-accelerated technology, where the CPU does the heavy lifting with DA/AD conversion.

According to leaked roadmap slides, Intel will launch its first 8th generation Core "Coffee Lake" processors along with motherboards based on the Z370 chipset within Q3-2017. Mainstream and value variants of this chipset will launch only in 2018.

Sources:

VideoCardz, PCEVA Forums

The PCI-Express lane budget of "Coffee Lake" platform is a huge step-up from the 8-12 general purpose lanes put out by previous-generation Intel chipsets, and will enable motherboard designers to cram their products with multiple M.2 and U.2 storage options, besides bandwidth-heavy onboard devices such as additional USB 3.1 and Thunderbolt controllers. The chipset itself integrates a multitude of bandwidth-hungry connectivity options. It integrates a 10-port USB 3.1 controller, from which six ports run at 10 Gbps, and four at 5 Gbps.Other onboard controllers includes a SATA AHCI/RAID controller with six SATA 6 Gbps ports. The platform also introduces PCIe storage options (either an M.2 slot or a U.2 port), which is wired directly to the processor. This is drawing inspiration from AMD AM4 platform, in which an M.2/U.2 option is wired directly to the SoC, besides two SATA 6 Gbps ports. The chipset also integrates a WLAN interface with 802.11ac and Bluetooth 5.0, though we think only the controller logic is integrated, and not the PHY itself (which needs to be isolated for signal integrity).

Intel is also making the biggest change to onboard audio standards since the 15-year old Azalia (HD Audio) specification. The new Intel SmartSound Technology sees the integration of a "quad-core" DSP directly into the chipset, with a reduced-function CODEC sitting elsewhere on the motherboard, probably wired using I2S instead of PCIe (as in the case of Azalia). This could still very much be a software-accelerated technology, where the CPU does the heavy lifting with DA/AD conversion.

According to leaked roadmap slides, Intel will launch its first 8th generation Core "Coffee Lake" processors along with motherboards based on the Z370 chipset within Q3-2017. Mainstream and value variants of this chipset will launch only in 2018.

119 Comments on Intel "Coffee Lake" Platform Detailed - 24 PCIe Lanes from the Chipset

gfxbench.com/device.jsp?benchmark=gfx40&os=Windows&api=gl&cpu-arch=x86&hwtype=iGPU&hwname=Intel(R)%20UHD%20Graphics%20620&did=50174229&D=Intel(R)%20Core(TM)%20i7-8550U%20CPU%20with%20UHD%20Graphics%20620

gfxbench.com/device.jsp?benchmark=gfx40&os=Windows&api=gl&cpu-arch=x86&hwtype=iGPU&hwname=Intel(R)%20UHD%20Graphics%20620&did=52275942&D=Intel(R)%20Core(TM)%20i5-8250U%20CPU%20with%20UHD%20Graphics%20620

gfxbench.com/device.jsp?benchmark=gfx40&os=Windows&api=gl&cpu-arch=x86&hwtype=iGPU&hwname=Intel(R)%20HD%20Graphics%20620&did=48651203&D=Intel(R)%20Core(TM)%20i5-8250U%20CPU%20with%20HD%20Graphics%20620

gfxbench.com/device.jsp?benchmark=gfx40&os=Windows&api=gl&cpu-arch=x86&hwtype=iGPU&hwname=Intel(R)%20HD%20Graphics%20620&did=49370014&D=Intel(R)%20Core(TM)%20i7-8650U%20CPU%20with%20HD%20Graphics%20620

gfxbench.com/device.jsp?benchmark=gfx40&os=Windows&api=gl&cpu-arch=x86&hwtype=iGPU&hwname=Intel(R)%20UHD%20Graphics%20620&did=50817616&D=Intel(R)%20Core(TM)%20i7-8650U%20CPU%20with%20UHD%20Graphics%20620

I'm looking forward to seeing how coffee lake does, this whole debate about pcie lanes and bandwidth is daft, it's got plenty for mainstream use. As others have said having multiple m.2 drives, multiple gpus and 10gbit ethernet isn't common and absolutely qualifies as enthusiast. That's not the market segment this is aimed at.

2 GPUs - 32 lanes

2 m.2 PCI-E drives - 8 lanes

10GbE - 4 lanes

Which brings us to a grand total of 44 lanes. Now... 16 lanes by CPU, 24 by chipset, so... that's 40 lanes. You can drop the lanes requirement here by running the GPUs in 8x/8x, which is plenty even for the most powerful GPUs. Now you need only 28 lanes for all that. Or, you could even run 16x/8x and you would need 36 lanes. Still 4 to go before you hit the total of 40 offered by this platform. Is running one GPU in 8x mode really gonna hurt that bad? I think not.

Well, AMD took care of that.

You lost me, Intel.

Depends... some boards funnel at least one m.2 through cpu avoiding dmi anyway. Just do some reasearch. ;)

Not with cpu connected lanes, though. Pcie riser card comes to mind. Or a mixed RAID with a cpu connected drive and chipset attached drive (which would cap lower than using all cpu attached lanes

For daily usage, 24PCH+16PCIE is more than enough for 2GPU with one M.2 (960PRO) + SATA SSD (850 EVO) setup, but anything goes beyond that configuration I would suggest the HEDT platform.

Plus, you're really misinformed. Yes, the number if lanes hasn't gone up much, but the speed of each lane did. And since you can split lanes, you can actually connect a lot more PCIe 2.0 devices at one than you could a few years ago. But all in all, PCIe lanes have already become a scare resource with the advent of NVMe. Luckily we don't need NVMe atm, but I expect this will change in a few years, so we'd better get more PCIe lanes by then.

Yes, the link back to the CPU can become a bottleneck, but that is a 4GB/s bottleneck. If you have a few NVMe SSDs in RAID the link to the CPU could be the limiting factor. But, will you notice it during actual use? Not likely. You won't be able to get those sweet sweet benchmark scores for maximum sequential read/write. But normal use isn't sequential read/write, so it doesn't really matter. And even still, 4GB/s of read/write speed is still damn fast.

But DMA means that data doesn't have to flow up to the CPU all the time. If you have a 10Gb/s NIC, and a NVMe SSD, data will flow directly from the SSD to the NIC.