Monday, September 25th 2017

AMD Phasing Out CrossFire Brand With DX 12 Adoption, Favors mGPU

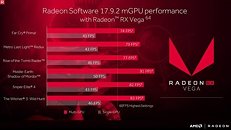

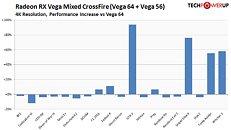

An AMD representative recently answered PC World's query regarding the absence of "CrossFire" branding on their latest Radeon Software release, which introduced multi-GPU support for AMD's Vega line of graphics cards. According to the AMD representative, it goes down to a technicality, in that "CrossFire isn't mentioned because it technically refers to DX11 applications. In DirectX 12, we reference multi-GPU as applications must support mGPU, whereas AMD has to create the profiles for DX11. We've accordingly moved away from using the CrossFire tag for multi-GPU gaming."The CrossFire branding has been an AMD staple for years now, even before it was even AMD - it was introduced to the market by ATI on 2005, as a way to market multiple Radeon GPUs being used in tandem. For years, this was seen as a semi-viable way for users to space out their investment in graphics card technology by putting in lesser amounts of money at a time - you'd buy a mid-range GPU now, then pair it with another one later to either update your performance capabilities to the latest games, or achieve the same performance levels as a more expensive, single-GPU solution. This has always been a little hit or miss with both vendors, due to a number of factors.

But now, the power to implement CrossFire or SLI isn't solely on the GPU vendor's (AMD and NVIDIA) hands. With the advent of DX 12 and explicit multi-adapter, it's now up to the game developers to explicitly support mGPU technologies, which could even allow for different graphics cards from different manufacturers to work in tandem. History has proven this to be more of a pipe-dream than anything, however. AMD phasing out the CrossFire branding is a result of the times, particular times nowadays where the full responsibility of making sure multi-GPU solutions work shouldn't be placed at AMD or NVIDIA's feet - at least on DX 12 titles.

Source:

PCWorld

But now, the power to implement CrossFire or SLI isn't solely on the GPU vendor's (AMD and NVIDIA) hands. With the advent of DX 12 and explicit multi-adapter, it's now up to the game developers to explicitly support mGPU technologies, which could even allow for different graphics cards from different manufacturers to work in tandem. History has proven this to be more of a pipe-dream than anything, however. AMD phasing out the CrossFire branding is a result of the times, particular times nowadays where the full responsibility of making sure multi-GPU solutions work shouldn't be placed at AMD or NVIDIA's feet - at least on DX 12 titles.

55 Comments on AMD Phasing Out CrossFire Brand With DX 12 Adoption, Favors mGPU

while crossfire has become mgpu as amd's marketing term for the thing, nothing really changed in technology itself.

story with dx11 and opengl stays the same. vulkan is a bit up in the air.

for dx12 it matters if we are talking about implicit or explicit multiadapter.

implicit is pretty much the same as it is with dx11.

dx12 and explicit is what you are talking about. also, that comes in two flavours - linked (two gpu-s, logically similar to sli/xf) and unlinked (appear as one adapter).

GPUs stand to benefit the most from MCM because of their massive die size. As your increase die size, cost goes up exponentially. MCM technology in GPUs would reduce the cost to produce high end GPUs by a large amount and it would allow them to scale the product up to almost any size. I say almost because there is a limit on the number of dies that can put put on a single chip with current tech.

I can only name two games that use GameWorks off the top of my head: Arkham series and Witcher 3. PhsyX was open sourced not too long ago because outside of UE4 and a few other games, it doesn't get used.

GameWorks is more a "We pay the devs so they can put in features that heavily favor our new cards to push sales".

Sure, GameWorks put additional stress on the GPU (additional features do that), but inferring GameWorks was developed solely as a means to push newer cards is a little out there, imho. Especially since the competition at the high end is pretty much MIA for a few years now.

PS Witcher 3 is a GameWorks title that wows. But then again, Withcher 3 wasn't GameWorks, it still wowed in its time (many couldn't believe it was "only" a DX9 title).

This is an acceptable gain (though in practice I find myself often skipping a generation). But an IGP will not add as much HP to your rig, thus you'll gain a lot less. Is it worth the hassle?

I guess the question is whats next. If Nvidia and AMD are not going to be making profiles anymore (At least less than before) are they going to focus on new ecosystems for developers or something else?