Monday, October 2nd 2017

AMD Radeon Vega 64 Outperforms NVIDIA GTX 1080 Ti in Forza Motorsport 7, DX 12

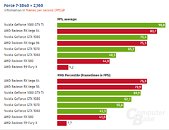

In an interesting turn of events, AMD's latest flagship videocard, RX Vega 64, has seen a gaming performance analysis from fellow publication computerbase.de, which brought about some interesting - and somewhat inspiring findings. In their test system, which was comprised of a 4.3 GHz Intel Core i7-6850K (6 cores), paired with 16 GB of DDR4-3000 memory in quad-channel mode, and Crimson Relive 17.9.3 / GeForce 385.69 drivers, the publication found that the Vega 64 was outperforming the GTX 1080 Ti by upwards of 23%, and that percentage increases to 32% when compared to NVIDIA's GTX 1080. The test wasn't based on the in-game benchmark, so as to avoid specifically-optimized scenarios.8x MSAA was used in all configurations, since "the game isn't all that demanding". Being it demanding or not, the fact is that AMD's solutions are one-upping their NVIDIA counterparts in almost every price-bracket in the 1920 x 1080 and 2560 x 1440 resultions, and not only by average framerates, but by minimum framerates as well. This really does seem to be a scenario where AMD's DX 12 dominance over NVIDIA comes to play - where in CPU-limited scenarios, AMD's implementation of DX 12 allows their graphics cards to improve substantially. So much so, in fact, that even AMD's RX 580 graphics card delivers higher minimum frame-rates than NVIDIA's almighty GTX 1080 Ti. AMD's lead over NVIDIA declines somewhat on 2560 x 1440, and even further at 4K (3840 x 2160). In 4K, however, we still see AMD's RX Vega 56 equaling NVIDIA's GTX 1080. Computerbase.de contacted NVIDIA, who told them they were seeing correct performance for the green team's graphics cards, so this doesn't seem to be just an unoptimized fluke. However, these results are tremendously different from typical gaming workloads on these graphics cards, as you can see from the below TPU graph, taken from our Vega 64 review.

Source:

Computerbase.de

56 Comments on AMD Radeon Vega 64 Outperforms NVIDIA GTX 1080 Ti in Forza Motorsport 7, DX 12

Drivers don't optimize games in real time, in fact, driver optimizations are mainly limited to profiles with driver parameters, with rare cases of tweaked shader programs or special code paths in the driver. The driver never knows the internal state of the game.No, this is another case of a console game ported to PC. There is nothing inherent in Direct3D 12 that benefits AMD hardware.

Mind you, it's not really a port anymore, since they share the same x86 processor, it is more of a 'tweak the game differently'.

Didn't they have to adjust a config file on the beta to get it to run past the 60 fps limit or something?

Then, oddly enough, as the res increases, typically AMD cards with HBM.HBM2 do better, however, the less bandwidth NVIDIA cards are catching up... I don't get that. And as such, part of the reason I'm not sold on this becoming a norm.

As I said in the other thread, so far, it is an anomolous result.

www.techpowerup.com/gpudb/2086/xbox-one-gpu ---Made by AMD.

Personally this makes zero difference to me as I'll be playing on my Xbox.

All that being said I'd hate to have to swap cards for whenever I want to play a DX12 game though.

Also, this doesn't happen in other DX12 titles... so, is it really DX12 in the first place? We hope, but are we sure?

Same applies to GPUs. Consoles use AMD GPUs, so when porting to PC, developers have to optimize for nvidia too, specially with DX12 where the driver plays a much smaller role compared to DX11, although some don't bother to.We had 9 non-store DX12 games in 2016 and a grand total of 3 in 2017 so far. That's a decrease. Maybe 2018 would be better?

We could also count store games but that's a slippery slope. Store games can only be DX12 so developers have no choice even if they didn't want to use it. Look at quantum break. It's a DX12 game on store but when it came to steam it became DX11.

We had 5 store only DX12 games in 2016 and 2 in 2017 so far, all published by MS.

With CPU's as fast as they are now I don't think this will crop up too often, but just one example of hardware beating fast software.

Meantime, in the real world the competition looks better than ever.

This one just swings way back the other way

1080P\1440P - HBM2's lower latency is feeding the GPU faster, even though bandwidth similar to GDDR5X.

4K - Vega VRAM buffer exceeded and caching from system, textures still fully in VRAM for 1080 Ti. Lower FPS also slows the amount of fill requests.

Here's a link from 2016 showing AMD with 56% of the x86+GPU market--I'm projecting that it's higher now, since consoles generally outsell PC graphics cards (if anyone has anything more recent, please post): www.pcgamesn.com/amd/57-per-cent-gamers-on-radeon

Game developers know this, and they are in fact migrating their coding over to Radeon-optimized code paths. And before anybody jumps on this and says 'but nVidia customers spend more money on hardware, well, it's important to understand that to a game developper, some millionaire with two GTX1080 Ti's is only worth the same $60 as a 12 year old with an XBox One. They're both spending $60 on the latest World of Battlefield 7 (yes, made up game) title, so in the end, the developer is looking at which GPU is in the most target platforms.

If I'm Bethesda, for example, I realize that over 60% of the x86 machines that have sufficiently powerful GPUs (XBox, Playstation, PC) that I'd like to put my game on are powered by AMD Radeons. It's only logical that I'm therefore going to build the game engine to run very smoothly on Radeons. All Radeons since the HD7000-series in 2011 have hardware schedulers, which makes them capable of efficiently scheduling/feeding the compute/rendering pipelines from multiple CPU cores right in the GPU hardware. This makes them DX12 optimized, whereas nVidia GPUs, even up to today's 1000-series, still don't have this built-in hardware.

The extra hardware does increase the Radeon's power draw somewhat, and nVidia has made much of how their GPUs are more 'power efficient', but in reality, they're simply missing additional hardware schedulers, which if included in their design, would probably put them on an even level with AMD's power consumption. It's a bit like saying my car is slightly more fuel efficient than yours because I removed the back seats. Sure, you will use a little less gas, but you can't carry any passengers in the back seat, so it's a dubious 'advantage' you're pushing there.Well, I bought a Radeon R9 290 for $259 Canadian back in December of 2013, which has played every game I like extremely well, and it might just run upcoming fully optimized DX12 titles like Forza-7 as well as or better than an $800 1080 Ti in the 99% frame times, so I'd hardly call that a fail. In fact, when the custom-cooled Vegas hit, I'm probably going to upgrade to one of those in my own rig, and put the R9 290 in my HTPC, because it'll probably still be pumping out great performance for a couple more years to come.