Monday, July 23rd 2018

Performance Penalty from Enabling HDR at 4K Lower on AMD Hardware Versus NVIDIA

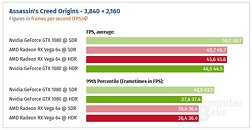

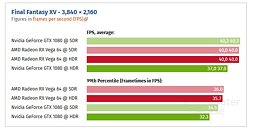

The folks over at Computerbase.de have took it into their hands to study exactly how much of an impact >(if any) would activating HDR on a 4K panel affect performance cross different hardware configurations. Supposedly, HDR shouldn't impose any performance penalty on GPUs that were designed to already consider that output on a hardware level; however, as we know, expectations can sometimes be wrong.Comparing an AMD Vega 64 graphics card against an NVIDIA GeForce 1080, the folks ate Computerbase arrived at some pretty interesting results: AMD hardware doesn't incur in as big a performance penalty (up to 2%) as NVIDIA's graphics card (10% on average) when going from standard SDR rendering through to HDR rendering. Whether due to driver-level issues or not is unclear; however, it could also have something to do with the way NVIDIA's graphics cards process 4K RGB signals by applying color compression down to reduced chroma YCbCr 4:2:2 in HDR - an extra amount of work that could reduce frame rendering. However, it's interesting to note how Mass Effect Andromeda, one of the games NVIDIA gave a big marketing push for and that showcased HDR implementation, sees no performance differential on the green hardware.I also seem to remember some issues regarding AMD's frame time performance being abysmal - looking at Computerbase's results, howveer, those times seem to be behind us.

Source:

Computerbase

26 Comments on Performance Penalty from Enabling HDR at 4K Lower on AMD Hardware Versus NVIDIA

I'm guessing thats the difference between HBM and GDDR.

I wonder if this will fix the Sprite/sparkles seen on their hardware.

Either programmers are really sloppy or (more likely) there's something not obvious about Nvidia's drivers/APIs that isn't readily visible to developers. Whatever it is, let's hope it gets fixed/ironed out before HDR monitors become mainstream.

I am of the opinion that the way it's meant to be played is optimised more than people are aware of ,Hdr and high bit rate RGB limit some of their optimization, until they re optimise that is.

Thank god their SDR color techniques are at least lossless.

Don't get me wrong people I still like Nvidia...

But in reality Nvidia cheats for the numbers... Always have and probably always will... At least they make it look good enough for most not to notice.

But now in the age of HDR... We get to see performance leveled and AMD is looking good..... Just in time to get stomped again..

Take-took-taken

Why? The also German site GameStar.de also featured such tests on HDR (Link, in German) – and which impacts on performance would to be expected from using it. Already back then in '16.

The results? They were virtually identical, at least pattern-wise (nVidia took serious hits while AMD's ones were pretty much negligible). The outcome were literally the very same as the one Computerbase.de comes up with now in '18.The thing just is, nVidia was made aware of such outcomes already back then, they still have yet to bring in any fix driver-wise. It seems it's a architectural problem for them and a rather undesirable side effect of their infamous texture-compression and as such, can't just easily avoided without losing a good chunk of their performance … and as such, nVidia rather loves to avoid talking that much about it.

The obvious fact that they haven't fixed it yet since 2016 literally implies it's a architectural problem which can't be solved easily. It's a known phenomenon for a while now and all intellectual games and scenarios being thought through came to the very same result – most likely it's nVidia's texture-compression algorithm which causes this. … which isn't magically fixed overnight using some mighty wonder driver, at least not without having a rather huge impact on performance in the first place.

Did I mentioned that i love

blowbacksboomerangs? Their paths are so awesomely easy predictable! ♥Computerbase.de is rather known to don't have collective-tests which feature any GTX 1080 Ti, and so does many other reviewers too.The reason for this is, that you can calculate the given placing of a Ti by adding (I believe 20%, correct me if I'm wrong here). Anyway, the placing of a Ti is literally calculable if a given test features a GTX 1080 reference card. So, benching a dedicated Ti if you already have the results of a reference-clocked 1080 is just a waste of time, thus, often just skipped (for obvious reasons).

en.wikipedia.org/wiki/High-dynamic-range_rendering

which might explains it. If that's true, then Volta should be doing very well with HDR.

As for performance difference, the only thing I can think of is that GeForce has to complete some shader operations in two clocks instead of one because its hardware can't do it in one pass.

@R-T-B are you sure about Sd i thought different and there is a visible difference between brands imho.

That's 15 monitors if you include the DisplayHDR 400 ones, which are really just standard monitors (i.e. not bottom of the barrel crap).

Plus, how do you think you look when you reply to "most monitors aren't really HDR" with "Yes mate ,they are ,I play any games i solo play at 4k"?

Also quote my other sentence, could it be better , couldn't it always.

Ah you did:( apologies.

they have done this before, when hdmi 2.0 was new, they would reduce to signal to 4:2:0 to push it to televisions/monitors, yes it works in 4k, but looks like crap