Sunday, August 26th 2018

AMD Announces Dual-Vega Radeon Pro V340 for High-Density Computing

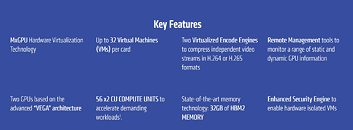

AMD today at VMworld in Las Vegas announced their new, high-density computing, dual-GPU Radeon Pro V340 accelerator. This graphics card (or maybe accelerator) is based on the same Vega that makes AMD's consumer graphics card lineup, and crams its dual GPUs into a single card with a dual-slot design. 32 GB of second-generation Error Correcting Code (ECC) high-bandwidth memory (HBM) greases the wheels for the gargantuan amounts of data these accelerators are meant to crunch and power through, even as media processing requirements go through the roof."As the flagship of our new Radeon Pro V-series product line, the Radeon Pro V340 graphics card employs advanced security features and helps to cost effectively deliver and accelerate modern visualization workloads from the datacenter," said Ogi Brkic, general manager of Radeon Pro at AMD.

"The AMD Radeon Pro V340 graphics card will enable our customers to securely leverage desktop and application virtualization for the most graphically demanding applications," said Sheldon D'Paiva, director of Product Marketing at VMware. "With Radeon Pro for VMware, admins can easily set up a VDI environment, rapidly deploy virtual GPUs to existing virtual machines and enable hundreds of professionals with just a few mouse clicks.""With increased density, faster frame buffer and enhanced security, the AMD Radeon Pro V340 graphics card delivers a powerful new choice for our customers to power their Citrix Workspace, even for the most demanding applications," said Calvin Hsu, VP of Product Marketing at Citrix.

Sources:

AMD, via Tom's Hardware

"The AMD Radeon Pro V340 graphics card will enable our customers to securely leverage desktop and application virtualization for the most graphically demanding applications," said Sheldon D'Paiva, director of Product Marketing at VMware. "With Radeon Pro for VMware, admins can easily set up a VDI environment, rapidly deploy virtual GPUs to existing virtual machines and enable hundreds of professionals with just a few mouse clicks.""With increased density, faster frame buffer and enhanced security, the AMD Radeon Pro V340 graphics card delivers a powerful new choice for our customers to power their Citrix Workspace, even for the most demanding applications," said Calvin Hsu, VP of Product Marketing at Citrix.

54 Comments on AMD Announces Dual-Vega Radeon Pro V340 for High-Density Computing

AMD has issued a dual GPU powerhouse to compete with NVIDIA on several occasions and it doesn't surprise me that they're attacking Turing from the same angle... but there is no way in hell this is passively cooled. Somebody on the marketing team FUBAR'd the render image on this one.

Btw, is it just me or the "dual GPU on one card" thing has become AMD's standard answer to any situation in which one card isn't/wouldn't be enough?

The gaming market is a different story... they'll push out dual GPU cards just to have the top product... but that hasn't happened for a while. In fact, the gaming industry is kinda moving away from mutli GPU.

Airflow is constant and evenly distributed in these chassis designs, and the cards are built to fit that. This actually makes them more flexible, not less, as it allows enterprise OEMs to pack in more cards at no scaling penalty. Packing in cards back-to-front across the whole chassis doesn't strangle the cards in between since they're all receiving the exact same cooling. For lower-density deployments (1-2 cards), or lower airflow chassis, some OEMs offer a small fan-rack that attaches to the back of each card such as the one pictured below:

So the takeaway from this is that if the V340 ever sees the light of day in the general consumer space, it'll absolutely be sporting an active cooler. As it stands, it's not a card for the general consumer, and as such is not designed for one.

Better put 2 more GPUs just for the shits&giggles.

And then claim your way from there. Maybe with 32x GPUs AMD can finally beat SLI 2080Ti:

So, maybe. If they're making these, we might get the "defective" dual Vega cards as gaming products, or maybe these are the best Vega chips from already making Vega cards for so long, and they went to the top tier product.

@Prima.Vera if SLI/xfire was better, I wouldn't mind seeing a card like that, even if it had midrange chips on it (GTX1060 or RX560). Or maybe one day they'll figure out how to just "make" them work. It shouldn't really be enormously difficult; GPUs are already massively streamline processors by nature. I don't quite understand this enormous hurdle they have to overcome just to make two cards work together (properly) when a single chip is already comprised of thousands of cores anyway...

That's why these servers must be closed all the time to force the air flow in the correct direction, If you open the case while the server is working you will quickly face an overheating issue, the server will first throttle but shutdown eventually. But most things are meant to be hot-plugged, even these fans, when one fan fails the server application will give you a notice/alarm you then go open the case and replace the fan quickly. before the server shutdown.

While genius, The main issue with this design is noise. Most servers uses small fans that operate in very high RPM to get proper airflow which rises the noise levels beyond comfort, but they're already designed to be used in a dedicated server room.

Connections between different GPUs are slow, compared to that. AMD had never said how fast their IF inside or out of a Vega is (they have said that memory controller, GPU, media engine etc are connected using IF but no real technical details). For comparison, Nvidia's new Turing has 2 NVLinks - 50GB/s both ways. IF scaling for connecting Vega to outside of chip is likely in the same range. PCI-e is shared with other uses, most notably moving data from RAM or storage to VRAM and does not have quaranteed latency as well as being slower (PCI-e 3.0 x16 has 16GB/s of bandwidth). These interconnects (both IF and NVLink in this case) are scalable and can be widened but it comes with cost of both die space and power usage.

Due to the way graphics pipeline works there needs to be a lot of synchronization between different GPUs if you want to use multiple GPUs for gaming. There are both bandwidth as well as (related) latency issues because real-time rendering has strict timing requirements.

Multiple GPUs work fine for applications where either requirements are not as strict - massive computations and GPGPU - or there is inherent resource sharing the other way around - multiple users for the same GPU like Desktop virtualization which this V340 is intended for.

tl;dr

There are technical reasons why multiGPU gaming is difficult. XF/SLI are being wound down and that is not likely to change.