Sunday, August 26th 2018

AMD Announces Dual-Vega Radeon Pro V340 for High-Density Computing

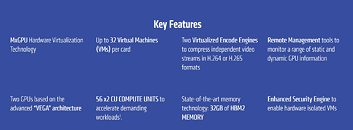

AMD today at VMworld in Las Vegas announced their new, high-density computing, dual-GPU Radeon Pro V340 accelerator. This graphics card (or maybe accelerator) is based on the same Vega that makes AMD's consumer graphics card lineup, and crams its dual GPUs into a single card with a dual-slot design. 32 GB of second-generation Error Correcting Code (ECC) high-bandwidth memory (HBM) greases the wheels for the gargantuan amounts of data these accelerators are meant to crunch and power through, even as media processing requirements go through the roof."As the flagship of our new Radeon Pro V-series product line, the Radeon Pro V340 graphics card employs advanced security features and helps to cost effectively deliver and accelerate modern visualization workloads from the datacenter," said Ogi Brkic, general manager of Radeon Pro at AMD.

"The AMD Radeon Pro V340 graphics card will enable our customers to securely leverage desktop and application virtualization for the most graphically demanding applications," said Sheldon D'Paiva, director of Product Marketing at VMware. "With Radeon Pro for VMware, admins can easily set up a VDI environment, rapidly deploy virtual GPUs to existing virtual machines and enable hundreds of professionals with just a few mouse clicks.""With increased density, faster frame buffer and enhanced security, the AMD Radeon Pro V340 graphics card delivers a powerful new choice for our customers to power their Citrix Workspace, even for the most demanding applications," said Calvin Hsu, VP of Product Marketing at Citrix.

Sources:

AMD, via Tom's Hardware

"The AMD Radeon Pro V340 graphics card will enable our customers to securely leverage desktop and application virtualization for the most graphically demanding applications," said Sheldon D'Paiva, director of Product Marketing at VMware. "With Radeon Pro for VMware, admins can easily set up a VDI environment, rapidly deploy virtual GPUs to existing virtual machines and enable hundreds of professionals with just a few mouse clicks.""With increased density, faster frame buffer and enhanced security, the AMD Radeon Pro V340 graphics card delivers a powerful new choice for our customers to power their Citrix Workspace, even for the most demanding applications," said Calvin Hsu, VP of Product Marketing at Citrix.

54 Comments on AMD Announces Dual-Vega Radeon Pro V340 for High-Density Computing

More like: "A main reason for shortage of graphic cards and biggest global warming contributor."

Despite the name Radeon Pro these are meant to compete with nvidia Teslas at gpu virtualization. Someone should say their marketing department that Tesla V100 32GB exists though.

V100 is a hell of a lot more expensive, so "not in the same class" and V100 is also sold primarily for compute, not virtualization :)

Wonder what would be mine effectivity, pretty good I guess?