Friday, November 9th 2018

TechPowerUp Survey: Over 25% Readers Game or Plan to Game at 4K Resolution

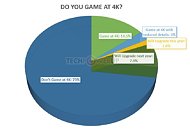

More than a quarter of TechPowerUp readers either already game at 4K Ultra HD resolution, or plan to do so by next year, according to our front-page survey poll run over the past 50 days. We asked our readers if they are gaming at 4K. Among the 17,175 respondents at the time of this writing, 14.5 percent said that they are already gaming at 4K UHD (3840 x 2160 pixels), which includes not just a 4K display, but also having their games render at that resolution. 3 percent say that while they have a 4K display, they game at lower resolutions or with reduced level of detail, probably indicating that their PC hardware isn't yet capable of handling 4K.

Almost a tenth of the respondents (9.5 percent to be precise), say that while they don't game at 4K, they plan to do so in the near future. 1.6 percent responded that they expect to go 4K within 2018, and 7.9 percent in 2019. The majority 73 percent of our readers neither game at 4K nor plan to any time soon. These results are particularly encouraging as a reasonably big slice of our readership is drawn to 4K, the high-end gaming resolution of this generation, which can provide four times the detail as Full HD (1080p). Of the 9.5 percent lining up to upgrade, a near proportionate amount could upgrade not just their display, but also other hardware such as graphics cards, and perhaps even the rest of their platforms, to cope with 4K.

Almost a tenth of the respondents (9.5 percent to be precise), say that while they don't game at 4K, they plan to do so in the near future. 1.6 percent responded that they expect to go 4K within 2018, and 7.9 percent in 2019. The majority 73 percent of our readers neither game at 4K nor plan to any time soon. These results are particularly encouraging as a reasonably big slice of our readership is drawn to 4K, the high-end gaming resolution of this generation, which can provide four times the detail as Full HD (1080p). Of the 9.5 percent lining up to upgrade, a near proportionate amount could upgrade not just their display, but also other hardware such as graphics cards, and perhaps even the rest of their platforms, to cope with 4K.

99 Comments on TechPowerUp Survey: Over 25% Readers Game or Plan to Game at 4K Resolution

From this perspective... the two technologies are very similar.

G-Sync provides adaptive sync starting at about 30 fps and its impact trails off greatly after 60 fps.

Free-Sync provides adaptive sync starting at about 40 fps and its impact trails off greatly after 60 fps

From this perspective... the two technologies are very dissimilar.

G-Sync monitors include a Hardware Module which is the reason for the difference in cost between the two technologies. When the 1st G-Sync ready monitors came out, you could buy a module as an add on for $200. Adaptove sync is intended to eliminate display issues that occur from when fps is below 60Hz. The hardware module provides Motion Blur Reduction which is quite useful above 60 Hz when the problems that adaptive sync solves are no longer a significant issue. Of course average fps means min fps is lower so some cushion is needed; so when fps averages 70 fps or so, the MBR technology provides a superior visual experience. The typical 1440p 144 / 165 Hz owner, assuming they have the GFX horsepower, is playing with G-Sync OFF and ULMB ON until that rare game that won't let them maintain fps above 60.

Freesync monitors are not equipped with this hardware module. Yes, adaptive sync continues to work after 60 fps but the effects are greatly diminished as the fps increases. But there is no high fps alternative to switch to because Freesync includes fo MBR hardware module.

So, when ya are ready to go 4k ....

With AMD, grab a decent 60 / 75 Hz panel and wait for a next gen AMD card that can handle the games you want to play play @ 40 - 75 fps

With nVidia, wait till ya can afford to grab what is now a $2500 144Hz panel as well as reasonably affordable nVidia card(s) that can handle the games you want to play play at 75 - 144 Hz

Personally, I won't give up 144Hz ULMB to go to 4k and do it at reasonable expense .... I'm thinking 2020

I know that optimism is often described as seeing glass half full, while pessimists say that its half empty.

What is presenting that 25% readers of TPU "almost play at 4K"? Lying or just bending truth a bit?

Your goal is become fake news server or what?It will become standard when GPUs are cheap enough to feed 4K without effort and same goes for LCDs.

Which means 2-5 years or more. Depending on situation on the market.

Personally, I want a 30" 16:10 8K or more (7680x4800) screen. more useful for highly multimonitor work.. unless they go massively all out and build a 48:10 monster (23040x4800) :D

I remember when I went from 1080p gaming to 1440p gaming, the only barriers for me were about $650 for a nice wide colour gamut, high refresh rate Korean monitor, and a GeForce 970. I had no other issues to deal with, as the rest of the system was good enough, so the cost was manageable. If I wanted to go after the 4K experience now, I would need a totally new PC and about $2000 worth of monitor first. I just cannot justify that, plus the experience is simply not good enough, or will last long enough, to match the cost.

But yes, I have no doubt that in 10 years, 4K HDR will be the new 1080p.

i think 2560x1440 is my max resolution and now i play FHD and its ok when you have good monitor.

palying 4K needso much cash more,bcoz with 4K monitor what is very expensive you need then high performance gpu, i think 2080 ti.

so i say,very few ppl go 4K for now and 85% ppl sill stay FHD and WHQL resolution.

4K gaming dont give you anything more feels than FHD,its fact...afer few week playing....

Frankly, I really don't understand all the talk about how 4K isn't ready. I've been hearing it for years now and have been doing it for several years with no issues.

I currently game with a 1080Ti on a 43" LG panel 4K monitor. One of the Korean ones with low input lag and built as a PC monitor, no TV Tuner. I paid $500 for it 2 years ago and can game with 444, low input lag. Can't tell the difference in frame rates since its 60Hz, but Battlefield is a consistent 100+ FPS on the 1080Ti with all settings on Ultra.

4K is glorious on a big screen and I would never go back to 1440p even with a higher Hz.You really don't need a lot of cash. A large screen 4K monitor off EBay is not that expensive and you can get a used 1080Ti and good to go. The talk that it's expensive or not ready is just not true.

Hell, my dad only has 1 app he uses often that doesn't support scaling properly, but given he uses 250% scaling on his 13" 4K laptop, there's more than enough pixels to keep things only slightly blurry. Meanwhile, everything else he uses scales perfectly.

And even then, we're not talking 20% of all people. Just 20% of the tech enthusiasts that frequent TPU.

I'm sure we'll all game at 4k eventually. But today, it's a bridge too far for me.

You really think next year, or the year after that, those numbers will magically change?

1080p took 15 years to became "standard" and we STILL have 1/3 of ALL the gamers in the World playing at LOWER resolutions!!!

2018-1995=23 years have passed. And we still have people playing at 1280x720.

Man, we all have dreams. But money is the biggest issue.

As long our equipment works (and 1080p is easy dealt with 200$ GTX1060s), we won't jump in the 4K bandwagon.

No need to. Simple as that.

(oldness incoming) I remember a time when 150-200$ got you a good mid-end video card witch let you play ANYTHING fluently and at a good resolution, and a high end card witch had spectacular performance was 400$. That 400$ is still my maximum budget for a video card. I got my GTX 1080 about this time last year for 360$, and I'll stick with it until something 40-50% faster for under 400$ comes out. Right now I'm gaming @ 2k (Dell U2713h), and I'm in no hurry to spend lots of money for 4k gear.

In blind test people noticed 240Hz 1080p being lower res in images anf games yet difference between 1440p 144Hz vs 4K 60Hz was much harder to spot on still images. In games 144Hz obviously felt smoother.