Wednesday, January 9th 2019

AMD Announces the Radeon VII Graphics Card: Beats GeForce RTX 2080

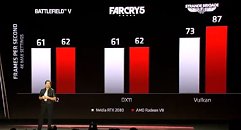

AMD today announced the Radeon VII (Radeon Seven) graphics card, implementing the world's first GPU built on the 7 nm silicon fabrication process. Based on the 7 nm "Vega 20" silicon with 60 compute units (3,840 stream processors), and a 4096-bit HBM2 memory interface, the chip leverages 7 nm to dial up engine clock speeds to unprecedented levels (above 1.80 GHz possibly). CEO Lisa Su states that the Radeon VII performs competitively with NVIDIA's GeForce RTX 2080 graphics card. The card features a gamer-friendly triple-fan cooling solution with a design focus on low noise. AMD is using 16 GB of 4096-bit HBM2 memory. Available from February 7th, the Radeon VII will be priced at USD $699.

Update: We went hands on with the Radeon VII card at CES.

Update: We went hands on with the Radeon VII card at CES.

157 Comments on AMD Announces the Radeon VII Graphics Card: Beats GeForce RTX 2080

Edit: Not seeing 300 here either...

Nobody knows power consumption figures at this point.

and if radeon VII needs 3 fans for reference model, whta AIB can offer?

looks it high oc'd...lets see.

what i heard ,rtx 2080 is faster than radeon VII,easily,and if we compare efficiency, rtx 2080 crushed radeon VII.

radeon VII,even it has 7nm line arhcitech are not engineer hurray hardware, faraway it.

amd fans dissapoint ...again.

well,wait for test,few week and see yourself.

with 7nm line arhitech and still over 300 gaming powerdraw,and still loose rtx 2080 and clear rtx 2080 ti its NOT and cant be editor choice' its fact.

amd has all aces their hands,but result is average.

well,lets see shwn next nvidia release their next rtx 300 series,its for sure 7nm line builded also, and its NOT go ANY model over 250W!

bad work amd,again.

Intriguing...

You can get a vega 64 for $400, $500 if you dont want to wait and just pick one up anywhere.

OR, you can get 30% more performance at $800. Which makes no sense in terms of perf/$. And much like how I think turing sucks due to perf/$ compared to pascal, I think radeon VII sucks compared to vega. This chip should be $500, not $800.

This also doesnt look good for AMD's next generation IMO. They need 7nm to meet the performance and power consumption of 14nm turing. When turing inevitably is ported to 7nm, AMD will once again be several steps behind.

Sure, prices probably wont reach early 2010s levels anytime soon, but the current jacked up prices can solely be laid at the feet of no competition. Nvidia has had sole control of the market for 3 years now, and AMD still isnt releasing much in the way of competition, seeing as their 7nm chip manages to be less efficient then a 14nm nvidia chip.

The moment AMD bothers to compete with Navi, prices will magically begin to fall as team red and team green begin to undercut each other. Much like the CPU space, where the ability to economically make an 8 core intel chip on the mainstream socket went from impossible to publicly available once ryzen hit the scene.

www.quora.com/How-much-does-it-cost-to-tapeout-a-28-nm-14-nm-and-10-nm-chip

AMD showed a similar chart itself. Costs are growing exponentially, not linearly. 7 nm likely costs double what 16-12 nm did. Sprinkle in the fact that HBM2 and GDDR6 supply is limited, and you got a perfect storm of higher prices.

HBM2 has been in "short supply" for over 18 months now, that was the excuse when vega 64 came out. So either that excuse is complete bunk, or "short supply" is actually "normal supply" and AMD really needs to stop using it. Either way it is a poor decision by AMD, resulting in a lack of proper competition leading to massive price rises.

Go look at nvidia's quarterly earnings reports. 47% Y/Y increase in net income for Q3 2018 for instance, despire 14nm being 3X more expensive then 28nm. If the price increases mattered as much as you say they did, that net income would not be so freaking high. Nvidia and AMD are taking advantage of the market to pump up prices and net incomes through the roof, the higher prices would result in a marginal cost increase for GPUs, not the massive doubling we have seen the last 3 years. This is only possible because Nvidia has no competition, and now AMD is trying to get a piece of that pie.

If we had proper GPU competition, prices would likely be 30-40% lower then they are now. The high GPU prices in the current market are a direct result of Nvidia wanting to line their pockets with fatter margins, and their financial results show that quite blatantly with record revenues, record net profits, and higher cash dividends and stock buybacks. The "rising costs" excuse is simple gaslighting to mislead people into accepting higher prices. While it is highly unlikely wee will ever see $300 x80 GPUs again, they shouldnt cost anywhere near $800.

You're forgetting that smaller nodes also mean more defects/higher failure rates. Why do you think AMD went full chiplet design for 7nm Ryzen? It's a cost-containing measure.

The bulk of HBM2 goes into cards that sell for many thousands of dollars (MI25 apparently still goes for $4900 or more). Radeon Vega cards get whatever is left.

AMD put the squeeze on Intel because of the chiplet design that let them make processors cheaper. Chiplet isn't something that really works for GPUs. Navi's original stated goal was to do exactly that; however, Navi being chiplet in design was apparently descoped years ago. NVIDIA hasn't even dabbled in chiplet as far as I know. Without chiplet GPU designs, costs will only get worse as process shrinks.