Wednesday, January 9th 2019

AMD Announces the Radeon VII Graphics Card: Beats GeForce RTX 2080

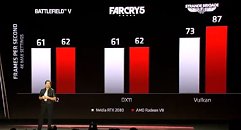

AMD today announced the Radeon VII (Radeon Seven) graphics card, implementing the world's first GPU built on the 7 nm silicon fabrication process. Based on the 7 nm "Vega 20" silicon with 60 compute units (3,840 stream processors), and a 4096-bit HBM2 memory interface, the chip leverages 7 nm to dial up engine clock speeds to unprecedented levels (above 1.80 GHz possibly). CEO Lisa Su states that the Radeon VII performs competitively with NVIDIA's GeForce RTX 2080 graphics card. The card features a gamer-friendly triple-fan cooling solution with a design focus on low noise. AMD is using 16 GB of 4096-bit HBM2 memory. Available from February 7th, the Radeon VII will be priced at USD $699.

Update: We went hands on with the Radeon VII card at CES.

Update: We went hands on with the Radeon VII card at CES.

157 Comments on AMD Announces the Radeon VII Graphics Card: Beats GeForce RTX 2080

Instead, we see a regression in the number of CUs, and yes, double the ROPs but no clear gains from an almost 40% smaller fab process.

It's mind boggling. Nvidia screwed up by dedicating more silicon to RT and tensor units, silicon that could've been used for more cuda cores, and more performance, but they bet the farm on ray tracing and DLSS.

If you ask me, AMD is making a very similar mistake, less CUs, but double the HBM2 raising the price to the point where they cannot be competitive with Nvidia, like they've always have been.

A missed opportunity for both camps, but hindsight is 20/20.

AMD is betting the gaming house on Navi.

You might be onto something.

A shrunk Vega splitting the difference in CU's, double the memory, overclocked to use the same 300w... double the ROPS too yet not a lot to show for it? Well it's a stop gap, a cheap one that cost them little to no R&D and they get to use dies that didn't cut it for instinct products. Not a whoooole lot to get exited about, especially since they are following Nv's pricepoint for that level of performance, without RT/DLSS/VRS(?) and at significantly higher power consumption and thus probably more heat to dissipate.

I do believe however this card will do decently as the res increases, as a vague example goes lets say it might trail a 2080 by 5% @ 1440p but lead a 2080 by 5% at 2160p across more than 3 games. These vendor slides are always whimsical as hell, Nv and AMD both do it.

As always I eagerly await W1z's full review before truly being able to judge the card against the competition.

Just because they are ahead of getting contract from TSMC 7nm doesnt mean they are better..

when nvidia releases 7nm..only then will it be real comparsion.

Lisa isnt responding with hate but she did drop some interesting tid-bits in thereMight allude to the PlayStations upcoming Gran Turismo recent announcement that it was working on in-house Ray-Tracing.

NVIDIA GeForce RTX 2080 Founders Edition 8 GB Review

Just look at Vega 56 vs 64, they perform the same at equal clocks 99% of the time.

Hell, even the Fury vs Fury X is like that.

These chip are mostly Geometry limited in games, throwing more CUs at it won't make it any better.

But hey, I bet this Radeon 7 mines crypto coins like no tomorrow! 16GB of frreaking HBM2 at 1TB/s bandwidth?? If crypto coins take off again thse cards will sell like gold!

the card is good for now if it can really match 2080 and has 16gb onboard. the problem will be 2020 when 7nm nvidia will have NO competition. NONE.

16GB of HBM2 is a gimmick that is gonna be hella pricey though; while I doubt AMD will make a loss on this card, there's no way their margins are anywhere near NVIDIA's. So this is just an attempt to save face, and as others have mentioned, it rather points to the fact that Navi is not progressing as well as AMD had hoped.