Wednesday, January 9th 2019

AMD Announces the Radeon VII Graphics Card: Beats GeForce RTX 2080

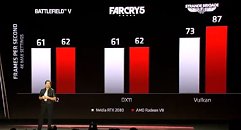

AMD today announced the Radeon VII (Radeon Seven) graphics card, implementing the world's first GPU built on the 7 nm silicon fabrication process. Based on the 7 nm "Vega 20" silicon with 60 compute units (3,840 stream processors), and a 4096-bit HBM2 memory interface, the chip leverages 7 nm to dial up engine clock speeds to unprecedented levels (above 1.80 GHz possibly). CEO Lisa Su states that the Radeon VII performs competitively with NVIDIA's GeForce RTX 2080 graphics card. The card features a gamer-friendly triple-fan cooling solution with a design focus on low noise. AMD is using 16 GB of 4096-bit HBM2 memory. Available from February 7th, the Radeon VII will be priced at USD $699.

Update: We went hands on with the Radeon VII card at CES.

Update: We went hands on with the Radeon VII card at CES.

157 Comments on AMD Announces the Radeon VII Graphics Card: Beats GeForce RTX 2080

16gb HBM??? Holy cow that's ridiculous. But how much does it help?

Wish this was competing with the RTX 2080ti...Sadly still seems the only card worth getting would still be the 2080ti in terms of an upgrade for me.

I read the clocks are expected to be up to 1.8ghz, if it can overclock to that 2.4 listed on here that would be interesting to see!

AMD would probably be better off with 8 GB and cutting the price.Well, Radeon Pro Duo(Fiji) was $1499 and Vega 64 Liquid $699 (to the extent it actually sold).

But I guess the argument over Nvidia overpricing is much weaker now.

Great value for video content creators and computing, not so great for gaming. It's FP64 performance 6.7 TFLOPs is on pair with Titan V (costing $3K). This is not a true gaming card.

If true power draw will be around 300W. Vega 64 deja vu all over again :(

Sad sad times on the GPU market, absolutely depressing. Im glad I bought a PS4 Pro last year...

I do wonder why AMD goes with this HBM memory rather than GDDR6 or something?? Meh, what do I know :D

j/k of course.

This is great news for the gaming landscape.

My best guess is that AMD realized their best case scenario for Navi 10 would be in the fall, and there could be a risk of further delays. Still, Vega 20 with 16GB HBM2 is expensive to produce.

That doesn't make you wrong an the Navi front though. I mean, AMD has announced their plans for CPUs till Q2 or Q3. If they said nothing about GPUs, it's likely nothing is planned.