Tuesday, December 10th 2019

Intel Core i9-10900K 10-core Processor and Z490 Chipset Arrive April 2020

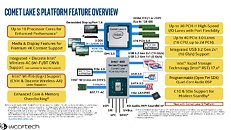

Intel is expected to finally refresh its mainstream desktop platform with the introduction of the 14 nm "Comet Lake-S" processors, in Q2-2020. This sees the introduction of the new LGA1200 socket and Intel 400-series chipsets, led by the Z490 Express at the top. Platform maps of these PCI-Express gen 3.0 based chipsets make them look largely similar to current 300-series platform, with a few changes. For starters, Intel introducing its biggest ACPI change since C6/C7 power states that debuted with "Haswell;" with the introduction of C10 and S0ix Modern Standby power-states, which give your PC an iPad-like availability while sipping minimal power. This idea is slightly different from Smart Connect, in that your web-connected apps and processor work at an extremely low-power (fanless) state, rather than waking your machine up from time to time for the apps to refresh. 400-series chipset motherboards will also feature updated networking interfaces, such as support for 2.5 GbE wired LAN with an Intel i225-series PHY, 802.11ax WiFi 6 WLAN, etc.

HyperThreading will play a big role in making Intel's processor lineup competitive with AMD's given that the underlying microarchitecture offers an identical core design to "Skylake" circa 2015. The entry-level Core i3 chips will be 4-core/8-thread, Core i5 6-core/12-thread, Core i7 8-core/16-thread; and leading the pack will be the Core i9-10900K, a 10-core/20-thread processor. According to a WCCFTech report, this processor will debut in April 2020, which means at CES 2020 in January, we'll get to see some of the first socket LGA1200 motherboards, some even based on the Z490. The platform also mentions an interesting specification: "enhanced core and memory overclocking." This could be the secret ingredient that makes the i9-10900K competitive with the likes of the Ryzen 9 3900X. The LGA1200 platform could be forwards-compatible with "Rocket Lake," which could herald IPC increases on the platform by implementing "Willow Cove" CPU cores.

Source:

WCCFTech

HyperThreading will play a big role in making Intel's processor lineup competitive with AMD's given that the underlying microarchitecture offers an identical core design to "Skylake" circa 2015. The entry-level Core i3 chips will be 4-core/8-thread, Core i5 6-core/12-thread, Core i7 8-core/16-thread; and leading the pack will be the Core i9-10900K, a 10-core/20-thread processor. According to a WCCFTech report, this processor will debut in April 2020, which means at CES 2020 in January, we'll get to see some of the first socket LGA1200 motherboards, some even based on the Z490. The platform also mentions an interesting specification: "enhanced core and memory overclocking." This could be the secret ingredient that makes the i9-10900K competitive with the likes of the Ryzen 9 3900X. The LGA1200 platform could be forwards-compatible with "Rocket Lake," which could herald IPC increases on the platform by implementing "Willow Cove" CPU cores.

96 Comments on Intel Core i9-10900K 10-core Processor and Z490 Chipset Arrive April 2020

April 2020 and it'll be couple months away from going up against Ryzen 4000 which is two generations ahead in terms of process node and well, even performance given the rumours.

- Less cores

- Slower IPC

- Much less efficient

- Slower multithreading

- Slower single core

- Loses in 720p gaming eith 2080 Ti tests (likely given the +10% IPC and +5% frequency rumours of 4000 series).

But 5Ghz all-core drawing 450W. Yay Intel!

On the other side, AMD's frequent architectural jumps mean that by the time hackers glean enough info about Zen 2, 3, 4 to start exploiting it, AMD will have moved on to newer architecture anyway.

Server Atoms (C-series) are still in production, but haven't been updated since 2018.

Ultimately the Atom lineup will be replaced by ARM.

Consumer Atom lineup was dropped.

I'm not sure what will happen when Tremont arrives.

I've seen rumors that server chips (and everything with ECC) will be unified under Xeon brand...

Intel is faster at our of order operations, due to AMD using a chiplet design.

When gaming at resolutions of 1080 or above AMD are neck and neck. At 720 or lower Intel wins, but who games at that resolution?

AMD is significantly faster at 80% of other actual work due to more cores, and more cache.

www.techpowerup.com/review/intel-core-i9-9900ks/21.html

Do you even read reviews?

Rumors - not relevant

Cores - Don't care

IPC - Don't care

Efficiency - Don't care

Mutithreading - don't care

Single core - don't care

720p gaming - who plays @ 720p ... and "likely" has no place in real world discussions.

All that is relevant is how fast a CPU runs the apps they use and the games they play. And won't pay any attention to any new release till i see it tested here... and then only in the apps I actually use and games I actually play ... chest beating and benchmark scores are meaningless. Fanbois beating their chests about the chip they like being faster at tasks that are never or rarely done things that they never do isn't . When I looked at the 9900KF versus the 3900x test results here in TPU ... here's what I see ...

3900X kicks tail in rendering which would be relevant if my user did rendering

3900X kicks tail in game and software development which would be relevant if my user did those things

3900x shares wins in browser performance but differences are too small to observe anyway.

3900X kicks tail in scientific applications which would be relevant if my user did rendering

3900x shares wins in office apps but differences (0.05 seconds) are too small to affect user experience.

3900X lose in Photoshop by 0.05 seconds... but loses by 10 seconds ... finally something that matters to my user

Skipping a few more things 99% of us don't ever do

File compression / media encoding ...also not on the list

Encoding ... use does an occasional MP3 encode and the 3900x trailing by 12 secs might be significant if it was more than an occasional thing.

3900x loses in overall 720 game performance by 7% ... as he plays at 1440p, it's entirely irrelevant

3900x loses in 1440p overall game performance but 2.5% ... not a big deal but 2.5% is an advantage more than most of what we have seen so far.

3900x losses all the power consumption comparisons ... 29 watts in gaming

3900x runs 22 C hotter

3900X doesn't OC as well

3900x is more expensive.

AMD did good w/ the 3900x .... but despite the differences in cores, IPC, die size whatever ... the only think that matters is performance. The are many things that the 3900x does better than the 9900KF, but most users aren't doing this things. You can look at a football player and how much he can bench or how fast he can run the 40 ... but none of those things determine his value to the team, his contribution to the score or how much he gets paid.

In reversed positions AMD offered the Phenom I the TRUE quad core vs the glued together two C2D into Q6600.

Guess what we don't care what's true and what's not it's all about performance and this time around AMD are the ones who made those chiplets to work.

And for inter it's not just going to 10/7nm because I bet that they won't be able to hit those 5 GHz on those nodes for years to come.

So for them it will be a step back at first but I'm sure they will bounce back eventually like they did with C2Duo by getting "inspired" by AMD architecture.

And guys don't for get ARM is coming 8CX ....

3900X is more well rounded for all tasks. Not just one. And more secure. The difference in price is rather small. 9900 variants should cost no more than 3700X. Not what they are now.

Heat i would say is more of an issue for Intel due to higher power consumption. 3900X does not need to OC well because out of the box it already boosts to it's highest speed and with higher IPC it can afford to run at lower clocks. People need to let go of the 5Ghz or bust mentality. Remember Bulldozer was also 5Ghz. I hope no one is missing that.

Of course I would buy the CPU that wins in games - even if it gives just few fps more. It doesn't matter if the other CPU can render 3D 50% faster. Why would it? Am I really building a PC for gaming, or am I more into screenshots from Cinebench?

This is exactly the problem with many people here. They buy the wrong CPU.

Lets say you're buying a GPU for gaming and you play just 3 games that TPU - luckily - tests in their reviews.

Would you buy the GPU that wins in these 3 games or the one with better average over 20 titles?

I think this is also why some people didn't understand low popularity of 1st EPYC CPUs. They had good value and looked very competitive in many benchmarks.

But they fell significantly behind in databases. So the typical comment on gaming forums was: but it's just one task. Yeah, but it's the task that 95% real life systems are bought for (or at least limited by).Whenever I see an argument like this one, I ask the same question.

I assume you game, right?

What are the 3 other tasks that you frequently do on your PC? But just those that you're really limited by performance.

Because having 56 vs 60 fps in games may not seem a lot, but it's a real effect that could affect your comfort.

Most people can't name a single thing.

Some say crap like: they encode videos. A lot? Nah, few times a year, just a few GB.

I game, in emulators too, and Skylake is starting to become useless there too.

And this gap between Intel and AMD is greatly exaggerated in reviews due to low resolutions and using the fastest GPU around. I bet most of these people buying i7 or i9 for "only gaming" also do bunch of other stuff (even if lightly threaded) and do no ever notice the miniscule performance difference with a naked eye vs AMD. This is not a FX vs Sandy Bridge or Ryzen 1xxx vs 7700K situation any more where you can easily tell the difference. Since AMD is so close in performance and much better in nearly everything else people are buying AMD 9 to 1 compared to Intel.

Plus there is the matter of priorities. For a gaming the GPU is always #1. A person with a cheaper R7 3700X and a RTX 2080S will always achieve better performance than the next guy with i9 9900KS with a RTX 2070S. The only case where getting the i9 for gaming makes any sense is when money is not a problem and the person already owns a 2080 Ti.The one that gets the better average. Obviously. I won't be playing those 3 games forever. Case in point: AMD cards that are really fast in some games like Dirt Rally 4 or Forza Horizon. These are outlier results. I can't and won't base my purcase on one off results that are not representative of overall performance.Is not everything performance limited?

I would say web browser is very much performance limited. I noticed massive speed boost when launching Firefox after upgrading from 2500K to 3800X. Going to 3900X or 3950X i would be able to give Firefox even more threads to work with.

Also i feel like im IO limited and need faster PCI-E 4.0 NVME SSD to replace my SATA SSD. Just waiting on Samsung to announce their client drives next year. Current Phison controller based drives are just a stopgap and not very compelling.

Also network speed is becoming a major bottleneck for me. What can i say - VDSL 20/5 just does not cut it for me. Ideally i would upgrade to symmetrical 300/300 or 500/500 speed. 1G/1G sounds nice but then the web itself would become a bottleneck.

There are Xeon-Ws that parallel the HEDT 2066 platform yes.

There are also Xeon E's on the 1151 socket.

So desktop platform intel has whatever-lake 2-chan mem

HEDT refresh-lake 4chan mem

3647 stillnotfrozen-lake 6 chan mem

BGA abomination Glued-lake 12-chan mem

I saw many tests done on channels and from 2 to 4 thare is diffrence in synthetic but real applications don't realy increase in speed

I think if intel would have unganged it could be a improvement with more channels

Other than that, big yawn. More 14nm. I assume more 200-300W power consumption with AVX, and probably impossible to fully stresstest your OCs unless you hit the super golden sample stuff. It's kind of hilarious to see so many people on the web complaining about how their 9900K throttles at 5GHz MCE/OC if they dare to try Prime95 or LinpackX.

The sad part is that I saved money even for what I assumed to be a 8/16 9700K. When I saw it's now an i9, I kept the cash. Looks like I'll keep the cash even longer, which is fine, until we get a CPU that you can play with and tweak without the caveats of the Ryzen platform (like lower clocks to go with undervolting, no OC space) or those of Intel's (super high power consumption to the point where it's impossible to cool the beast and stresstest OCs properly).

and cmon, my sandy at 5 ghz still rocks. dont bash sandy :D