Friday, October 16th 2020

NVIDIA Updates Video Encode and Decode Matrix with Reference to Ampere GPUs

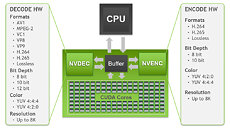

NVIDIA has today updated its video encode and decode matrix with references to the latest Ampere GPU family. The video encode/decode matrix represents a table of supported video encoding and decoding standards on different NVIDIA GPUs. The matrix has a reference dating back to the Maxwell generation of NVIDIA graphics cards, showing what video codecs are supported by each generation. That is a useful tool for reference purposes, as customers can check if their existing or upcoming GPUs support a specific codec standard if they need any for video reproduction purposes. The update to the matrix comes in a form of Ampere GPUs, which are now present there.

For example, the table shows that, while supporting all of the previous generations of encoding standards, the Ampere based GPUs feature support for HEVC B Frame standard. For decoding purposes, the Ampere lineup now includes support for AV1 8-bit and 10-bit formats, while also supporting all of the previous generation formats. For a more detailed look at the table please go toNVIDIA's website here.

Source:

NVIDIA Video Encode and Decode Matrix

For example, the table shows that, while supporting all of the previous generations of encoding standards, the Ampere based GPUs feature support for HEVC B Frame standard. For decoding purposes, the Ampere lineup now includes support for AV1 8-bit and 10-bit formats, while also supporting all of the previous generation formats. For a more detailed look at the table please go toNVIDIA's website here.

30 Comments on NVIDIA Updates Video Encode and Decode Matrix with Reference to Ampere GPUs

At some time, single PC configuration for video encoding will become reality.

Besides, many CPU-burn tasks (rendering, for example, I could name some others) is not exactly multitasking-friendly...

Because I don't want to be misunderstood again, I'll stop this pointless discussion now - I suggested a thing, it's upon the staff to decide is it worthy or representative, I'm fine either way.