Friday, October 16th 2020

NVIDIA Updates Video Encode and Decode Matrix with Reference to Ampere GPUs

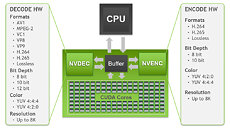

NVIDIA has today updated its video encode and decode matrix with references to the latest Ampere GPU family. The video encode/decode matrix represents a table of supported video encoding and decoding standards on different NVIDIA GPUs. The matrix has a reference dating back to the Maxwell generation of NVIDIA graphics cards, showing what video codecs are supported by each generation. That is a useful tool for reference purposes, as customers can check if their existing or upcoming GPUs support a specific codec standard if they need any for video reproduction purposes. The update to the matrix comes in a form of Ampere GPUs, which are now present there.

For example, the table shows that, while supporting all of the previous generations of encoding standards, the Ampere based GPUs feature support for HEVC B Frame standard. For decoding purposes, the Ampere lineup now includes support for AV1 8-bit and 10-bit formats, while also supporting all of the previous generation formats. For a more detailed look at the table please go toNVIDIA's website here.

Source:

NVIDIA Video Encode and Decode Matrix

For example, the table shows that, while supporting all of the previous generations of encoding standards, the Ampere based GPUs feature support for HEVC B Frame standard. For decoding purposes, the Ampere lineup now includes support for AV1 8-bit and 10-bit formats, while also supporting all of the previous generation formats. For a more detailed look at the table please go toNVIDIA's website here.

30 Comments on NVIDIA Updates Video Encode and Decode Matrix with Reference to Ampere GPUs

AMD RDNA2 graphics cards will provide hardware acceleration for AV1 (AOMedia Video 1) video codec.

Fascinating, too.

Still lack of 10bit 4:2:2 HW Decoding

So f*kin BRILLIANT

Movies/series' from Bluray or practically all streaming services should be 4:2:0 and RGB/no subsampling is 4:4:4.

Why investing in a sector that there is no profit (video encode) ?

[This thesaurus-like sentence is because I had some misunderstanding last time when I made suggestion, I was misunderstood and want to be clear this time - it's just a suggestion]

I'm sure that the staff knows and understands AV1, but I'll put a couple of lines about it, for people not so familiar with codecs.

AV1 is, in my opinion, The Next God of video compression codecs. x.264 is quite old (but really fast to encode, real-time in most cases). Same goes for x.265/HEVC, which is several times slower in encoding, but with considerably better quality. VP9 (made by google, used partially on YouTube) is even better quality, but much slower to encode - it's the newest codec in relatively wide use, and... it's from 2013th.

AV1 is a huge step forward, but (guess) slow to decode - though mostly everything can do it, even with just software decoder; in fact, hardware decoder just takes off some load from CPU (if we use anything reasonably capable - mobile phones can do it, if I remember correctly). Encoding is, however, painfully slow and far, far away from real-time encoding. AV1 compressed files can be found on certain, dedicated places on YouTube.

Who needs this test? Well, people who are into streaming, who do lots of uncompressed material encoding - if speed problem is solved, probably everyone would use it in some way (note that we can't watch AV1 compressed files, because people who do actual encoding need too much time) - same applies to VP9, to a point... In a way, it's of more importance than, say, Cinebench

General note: I'm aware of the current good encoder problems, but believe that adequate solution will be found soon...

I am 90% certain the 960 Ti exists.....

...Meanwhile people who use their graphics cards for a living would quite like to see progress made on these non-gamer features.

Now, calling corporations like Nvidia “ngreedia” merely demonstrates your immaturity and complete lack of understanding of economics. You know, the world of grownups. It’s nice that you were able to share with us though.

Oh, and with the you know nothing about me, I don’t play fortnite and never will. I guess that was your go to for obvious reasons. If you use gaming gpu’s for your living, you’re doing it wrong...unless you’re playing fortnite professionally. ;)

Same goes for Intel CPUs, which have hardware support for both encoding and decoding.

As for decoding, so far hardware acceleration on GPU just lessens the burden on CPU side - virtually anything can decode file in real-time, except load is bigger if there isn't hardware support (but then again, both AMD and NVIDIA have support for decoding, we just don't know how much - the only results I found were for 3080 and I can't remember which CPU - utilization dropped from ~90% to some 50-70%, but not all cores were utilized).

Perhaps it would be interesting to test if 16 core 5950x can decode faster then Intel offering with HW support, or how many FPS which offering makes (though I doubt anything will allow real-time).

VP9 is considerably faster, and ffdmpeg supports both.

I realize this isn't something that everybody needs, but it means something to streamers or to people who compress raw video material - AV1 gives considerably better quality than VP9 at lower bitrate, and same goes for VP9 and x.265. Currently, most real-time encoding is done by x.264, which is rather old (VP9 is from 2013th, I think, and AV1 is from 2018th, x.264 is ancient).

Just an idea, encoding is a highly CPU-intensive test, HW does help - but only to the point.

Back in the days, I did some testing on VP9, but hardware was much less advanced (I mean just CPUs, I don't have a number of CPUs to test, and HW support was non-existent at that time).

I don't know how much is ffdmpeg optimized for either Intel, AMD or NVIDIA, too...