Monday, February 4th 2008

ATI Radeon HD 3870 X2 CrossFireX Tested

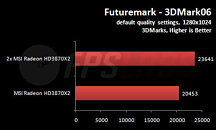

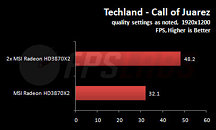

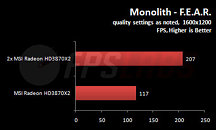

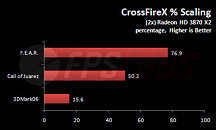

Admittedly this isn't the first review of AMD's new 3870 X2 running in CrossFireX, but it does seem to give a better indication of real world performance. As in the previous test, this article shows unimpressive 3DMark scores, but as the author is quick to point out, 3DMark06 is known to be quite heavily dependent on the CPU so doesn't always give a reliable indication of real world GPU performance. More interesting are the benchmarks of Call of Juarez and F.E.A.R., which show performance increases of around 50% and 75% respectively. It's important to remember that AMD's CrossFireX driver is still in development, but these figures should give a rough idea of what is to be expected. You can read FPSLABS' full article here.

Source:

FPSLABS

24 Comments on ATI Radeon HD 3870 X2 CrossFireX Tested

(at the Nvidia Command Post)

I plan to eventually have CrossfireX, once I've got the most out a single card. If they don't release a 2nd revision of the card that uses a updated PCI-bridge chip I may just stick with one. Depends...

On second thought, I detect extreme bottlenecking of everything.

With the addition of my second card, on average I only saw 15-25% increase in performance (whereas I was hoping to see the typical 25-35% increase), depending on the app and how heavily GPU intensive it was.

Damn, I can't wait to get away from this CPU, though :banghead:

Still, though, from what all we've seen, there's some massive potential behind these cards - ATI's prob burning the midnight oil on them.

I really want one of these cards, I hope to manage to sell my pro and for the GDDR4 version to come out soon, I just won't settle for GDDR3, i'm sorry.

back on topic how about 3/4x3870X2?

What systems have you tested this theory on?

and an extra 12fps is nice if it went from 12fps to 24fps but i doubt that it did that would show cpu bottlenecking going from 100fps to 112fps however does not