Primemas Announces Availability of Customer Samples of Its CXL 3.0 SoC Memory Controller

Primemas Inc., a fabless semiconductor company specializing in chiplet-based SoC solutions through its Hublet architecture, today announced the availability of customer samples of the world's first Compute Express Link (CXL) memory 3.0 controller. Primemas has been delivering engineering samples and development boards to select strategic customers and partners, who have played a key role in validating the performance and capabilities of Hublet compared to alternative CXL controllers. Building on this successful early engagement, Primemas is now pleased to announce that Hublet product samples are ready for shipment to memory vendors, customers, and ecosystem partners.

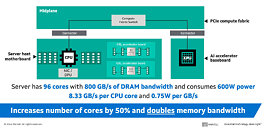

While conventional CXL memory expansion controllers are limited by fixed form factors and capped DRAM capacities, Primemas leverages cutting-edge chiplet technology to deliver unmatched scalability and modularity. At the core of this innovation is the Hublet—a versatile building block that enables a wide variety of configurations.

While conventional CXL memory expansion controllers are limited by fixed form factors and capped DRAM capacities, Primemas leverages cutting-edge chiplet technology to deliver unmatched scalability and modularity. At the core of this innovation is the Hublet—a versatile building block that enables a wide variety of configurations.