NVIDIA to Showcase AI-generated "Large Nature Model" at GTC 2024

The ecosystem around NVIDIA's technologies has always been verdant—but this is absurd. After a stunning premiere at the World Economic Forum in Davos, immersive artworks based on Refit Anadol Studio's Large Nature Model will come to the U.S. for the first time at NVIDIA GTC. Offering a deep dive into the synergy between AI and the natural world, Anadol's multisensory work, "Large Nature Model: A Living Archive," will be situated prominently on the main concourse of the San Jose Convention Center, where the global AI event is taking place, from March 18-21.

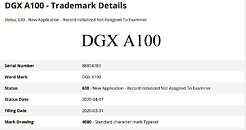

Fueled by NVIDIA's advanced AI technology, including powerful DGX A100 stations and high-performance GPUs, the exhibit offers a captivating journey through our planet's ecosystems with stunning visuals, sounds and scents. These scenes are rendered in breathtaking clarity across screens with a total output of 12.5 million pixels, immersing attendees in an unprecedented digital portrayal of Earth's ecosystems. Refik Anadol, recognized by The Economist as "the artist of the moment," has emerged as a key figure in AI art. His work, notable for its use of data and machine learning, places him at the forefront of a generation pushing the boundaries between technology, interdisciplinary research and aesthetics. Anadol's influence reflects a wider movement in the art world towards embracing digital innovation, setting new precedents in how art is created and experienced.

Fueled by NVIDIA's advanced AI technology, including powerful DGX A100 stations and high-performance GPUs, the exhibit offers a captivating journey through our planet's ecosystems with stunning visuals, sounds and scents. These scenes are rendered in breathtaking clarity across screens with a total output of 12.5 million pixels, immersing attendees in an unprecedented digital portrayal of Earth's ecosystems. Refik Anadol, recognized by The Economist as "the artist of the moment," has emerged as a key figure in AI art. His work, notable for its use of data and machine learning, places him at the forefront of a generation pushing the boundaries between technology, interdisciplinary research and aesthetics. Anadol's influence reflects a wider movement in the art world towards embracing digital innovation, setting new precedents in how art is created and experienced.