NVIDIA DGX A100 is its "Ampere" Based Deep-learning Powerhouse

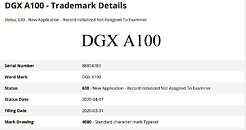

NVIDIA will give its DGX line of pre-built deep-learning research workstations its next major update in the form of the DGX A100. This system will likely pack number of the company's upcoming Tesla A100 scalar compute accelerators based on its next-generation "Ampere" architecture and "GA100" silicon. The A100 came to light though fresh trademark applications by the company. As for specs and numbers, we don't know yet. The "Volta" based DGX-2 has up to sixteen "GV100" based Tesla boards adding up to 81,920 CUDA cores and 512 GB of HBM2 memory. One can expect NVIDIA to beat this count. The leading "Ampere" part could be HPC-focused, featuring a large CUDA-, and tensor core count, besides exotic memory such as HBM2E. We should learn more about it at the upcoming GTC 2020 online event.