AMD Powers El Capitan: The World's Fastest Supercomputer

Today, AMD showcased its ongoing high performance computing (HPC) leadership at Supercomputing 2024 by powering the world's fastest supercomputer for the sixth straight Top 500 list.

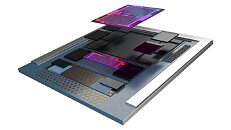

The El Capitan supercomputer, housed at Lawrence Livermore National Laboratory (LLNL), powered by AMD Instinct MI300A APUs and built by Hewlett Packard Enterprise (HPE), is now the fastest supercomputer in the world with a High-Performance Linpack (HPL) score of 1.742 exaflops based on the latest Top 500 list. Both El Capitan and the Frontier system at Oak Ridge National Lab claimed numbers 18 and 22, respectively, on the Green 500 list, showcasing the impressive capabilities of the AMD EPYC processors and AMD Instinct GPUs to drive leadership performance and energy efficiency for HPC workloads.

The El Capitan supercomputer, housed at Lawrence Livermore National Laboratory (LLNL), powered by AMD Instinct MI300A APUs and built by Hewlett Packard Enterprise (HPE), is now the fastest supercomputer in the world with a High-Performance Linpack (HPL) score of 1.742 exaflops based on the latest Top 500 list. Both El Capitan and the Frontier system at Oak Ridge National Lab claimed numbers 18 and 22, respectively, on the Green 500 list, showcasing the impressive capabilities of the AMD EPYC processors and AMD Instinct GPUs to drive leadership performance and energy efficiency for HPC workloads.