Lightmatter Unveils Six‑Chip Photonic AI Processor with Incredible Performance/Watt

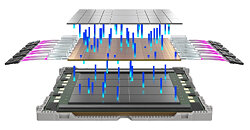

Lightmatter has launched its latest photonic processor, representing a fundamental shift from traditional computing architectures. The new system integrates six chips into a single 3D packaged module, each containing photonic tensor cores and control dies that work in concert to accelerate AI workloads. Detailed in a recent Nature publication, the processor combines approximately 50 billion transistors with one million photonic components interconnected via high-speed optical links. The industry has faced numerous computing challenges as conventional scaling approaches plateau, with Moore's Law, Dennard scaling, and DRAM capacity doubling, all reaching physical limits per silicon area. Lightmatter's solution implements an adaptive block floating point (ABFP) format with analog gain control to overcome these barriers. During matrix operations, weights and activations are grouped into blocks sharing a single exponent determined by the most significant value, minimizing quantization errors.

The processor achieves 65.5 trillion 16-bit ABFP operations per second (sort of 16-bit TOPs) while consuming just 78 W of electrical power and 1.6 W of optical power. What sets this processor apart is its ability to run unmodified AI models with near FP32 accuracy. The system successfully executes full-scale models, including ResNet for image classification, BERT for natural language processing, and DeepMind's Atari reinforcement learning algorithms without specialized retraining or quantization-aware techniques. This represents the first commercially available photonic AI accelerator capable of running off-the-shelf models without fine-tuning. The processor's architecture fundamentally uses light for computation to address next-generation GPUs' prohibitive costs and energy demands. With native integration for popular AI frameworks like PyTorch and TensorFlow, Lightmatter hopes for immediate adoption in production environments.

The processor achieves 65.5 trillion 16-bit ABFP operations per second (sort of 16-bit TOPs) while consuming just 78 W of electrical power and 1.6 W of optical power. What sets this processor apart is its ability to run unmodified AI models with near FP32 accuracy. The system successfully executes full-scale models, including ResNet for image classification, BERT for natural language processing, and DeepMind's Atari reinforcement learning algorithms without specialized retraining or quantization-aware techniques. This represents the first commercially available photonic AI accelerator capable of running off-the-shelf models without fine-tuning. The processor's architecture fundamentally uses light for computation to address next-generation GPUs' prohibitive costs and energy demands. With native integration for popular AI frameworks like PyTorch and TensorFlow, Lightmatter hopes for immediate adoption in production environments.