IBM z16 and LinuxONE 4 Get Single Frame and Rack Mount Options

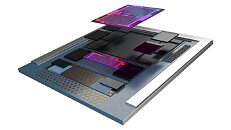

IBM today unveiled new single frame and rack mount configurations of IBM z16 and IBM LinuxONE 4, expanding their capabilities to a broader range of data center environments. Based on IBM's Telum processor, the new options are designed with sustainability in mind for highly efficient data centers, helping clients adapt to a digitized economy and ongoing global uncertainty.

Introduced in April 2022, the IBM z16 multi frame has helped transform industries with real-time AI inferencing at scale and quantum-safe cryptography. IBM LinuxONE Emperor 4, launched in September 2022, features capabilities that can reduce both energy consumption and data center floor space while delivering the scale, performance and security that clients need. The new single frame and rack mount configurations expand client infrastructure choices and help bring these benefits to data center environments where space, sustainability and standardization are paramount.

Introduced in April 2022, the IBM z16 multi frame has helped transform industries with real-time AI inferencing at scale and quantum-safe cryptography. IBM LinuxONE Emperor 4, launched in September 2022, features capabilities that can reduce both energy consumption and data center floor space while delivering the scale, performance and security that clients need. The new single frame and rack mount configurations expand client infrastructure choices and help bring these benefits to data center environments where space, sustainability and standardization are paramount.