Saturday, November 13th 2010

Disable GeForce GTX 580 Power Throttling using GPU-Z

NVIDIA shook the high-end PC hardware industry earlier this month with the surprise launch of its GeForce GTX 580 graphics card, which extended the lead for single-GPU performance NVIDIA has been holding. It also managed to come up with some great performance per Watt improvements over the previous generation. The reference design board, however, made use of a clock speed throttling logic which reduced clock speeds when an extremely demanding 3D application such as Furmark or OCCT is run. While this is a novel way to protect components saving consumers from potentially permanent damage to the hardware, it does come as a gripe to expert users, enthusiasts and overclockers, who know what they're doing.

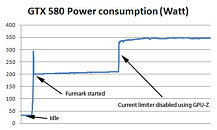

GPU-Z developer and our boss W1zzard has devised a way to make disabling this protection accessible to everyone (who knows what he's dealing with), and came up with a nifty new feature for GPU-Z, our popular GPU diagnostics and monitoring utility, that can disable the speed throttling mechanism. It is a new command-line argument for GPU-Z, that's "/GTX580OCP". Start the GPU-Z executable (within Windows, using Command Prompt or shortcut), using that argument, and it will disable the clock speed throttling mechanism. For example, "X:\gpuz.exe /GTX580OCP" It will stay disabled for the remainder of the session, you can close GPU-Z. It will be enabled again on the next boot.As an obligatory caution, be sure you know what you're doing. TechPowerUp is not responsible for any damage caused to your hardware by disabling that mechanism. Running the graphics card outside of its power specifications may result in damage to the card or motherboard. We have a test build of GPU-Z (which otherwise carries the same-exact feature-set of GPU-Z 0.4.8). We also ran a power consumption test on our GeForce GTX 580 card demonstrating how disabling that logic affects power consumption.

DOWNLOAD: TechPowerUp GPU-Z GTX 580 OCP Test Build

GPU-Z developer and our boss W1zzard has devised a way to make disabling this protection accessible to everyone (who knows what he's dealing with), and came up with a nifty new feature for GPU-Z, our popular GPU diagnostics and monitoring utility, that can disable the speed throttling mechanism. It is a new command-line argument for GPU-Z, that's "/GTX580OCP". Start the GPU-Z executable (within Windows, using Command Prompt or shortcut), using that argument, and it will disable the clock speed throttling mechanism. For example, "X:\gpuz.exe /GTX580OCP" It will stay disabled for the remainder of the session, you can close GPU-Z. It will be enabled again on the next boot.As an obligatory caution, be sure you know what you're doing. TechPowerUp is not responsible for any damage caused to your hardware by disabling that mechanism. Running the graphics card outside of its power specifications may result in damage to the card or motherboard. We have a test build of GPU-Z (which otherwise carries the same-exact feature-set of GPU-Z 0.4.8). We also ran a power consumption test on our GeForce GTX 580 card demonstrating how disabling that logic affects power consumption.

DOWNLOAD: TechPowerUp GPU-Z GTX 580 OCP Test Build

116 Comments on Disable GeForce GTX 580 Power Throttling using GPU-Z

- Are there any performance gains when not limiting?

- Is it worth it to remove the limiter?

Funny though, 350w makes it seem like the card isn't anymore power efficient at all.

Back in topic: nice performance/watt ratio for this 580, rly :laugh: compared to 'old' gtx480... 480 has no power throttling cheat, right?

www.youtube.com/watch?v=eYJh5YVgDZE

We put a wood block under the throttle.

As was advised, user beware is the relevant issue here.

This throttling system wasn't NVIDIA saying the cards can't go higher than what they rated them for, it's NVIDIA just trying to make the card look like it's not as high of a power hungry card.

8 cpu board.

So your 16 phases.. do you need them ? i've taken world records on 4! average joe doesnt need so many phases, all that crap.

Motherboards can be cheap, the stock performance rarely differ, overclocking on the otherhand, you may require some expensiveness, and add cf and sli.

Back on topic, its funny tho.

350 W

ati manages to push a dualgpu card, with the lowered per/watt due to scaling, and having a double set of memory one of them doing nothing, and yet having better perf/watt.

I hope ati's engineers are getting a little bonus!

As W1zzard said to me earlier and you did just now, the card can consume any amount of power and run just fine with it, as long as the power circuitry and the rest is designed for it.

And that's the rub.

Everything is built to a price. While those POWER computers are priced to run flat out 24/7 (and believe me they really charge for this capability) a power-hungry consumer grade item, including the expensive GTX 580 is not. So, the card will only gobble huge amounts of power for any length of time when an enthusiast overclocks it and runs something like FurMark on it. Now, how many of us do you think there are to do this? Only a tiny handful. Heck, even out of the group of enthusiasts, only some of them will ever bother to do this. The rest of us (likely me included) are happy to read the articles about it and avoid unecessarily stressing out their expensive graphics cards. I've never overclocked my current GTX 285 for example. I did overclock my HD 2900 XT though.

The rest of the time, the card will be either sitting at the desktop (hardly taxing) or running a regular game at something like 1920x1080, which won't stress it anywhere near this amount. So nvidia are gonna build it to withstand this average stress reliably. Much more and reliability drops significantly.

The upshot, is that they're gonna save money on the quality of the motherboard used for the card, it's power components and all the other things that would take the strain when it's taxed at high power. This means that Mr Enthusiast over here at TPU is gonna kill his card rather more quickly than nvidia would like and generate lots of unprofitable RMAs. Hence, they just limit the performance and be done with it. Heck, it also helps to guard against the clueless wannabe enthusiast that doesn't know what he's doing and maxes the card out in a hot, unventilated case. ;)

Of course, now that there's a workaround, some enthusiasts are gonna use it...

And dammit, all this talk of GTX 580s is really making me want one!! :)

Or is just just abnormal power usage by the VRM's to protect them from burning out ? A few GTX's where also reported with fried VRM's using Furmark.

EDIT: You might also want to read my post, the one before yours that explains why this kind of limiter is being put in.

And I don't have 16 power phases on my motherboard if that is what you were referring to. Power phases mean nothing. It's how they are implemented. My Gigabyte X58A-UD3R with 8 analog power phases can overclock HIGHER than my friends EVGA X58 Classified with 10 digital power phases.Exactly.

The built to a price argument still stands though.

If anything, adding throttling has added to the price of the needed components.

It is software based, it detects OCCT and Furmark and that is it. It will not effect any other program at all. Anyone remember ATi doing this with their drivers so that Furmark wouldn't burn up their cards?Ummmm...there most certainly has been.I really have a hard time believing that they did it to make power consumption look better. Any reviewer right away should pick up on the fact that under normal gaming load the card is consuming ~225w and under Furmark it is only consuming ~150w. Right there it should throw up a red flag, because Furmark consumption should never be drastically lower, or lower at all, than normal gaming numbers. Plus the performance different in Furmark would be pretty evident to a reviewer that sees Furmark performance numbers daily. Finally, with the limitter turned off the power consumption is still lower, and for a Fermi card that is within 5% of the HD5970 to have pretty much the same power consumption, that is an impressive feat that doesn't need to be artificially enhanced.