Saturday, November 13th 2010

Disable GeForce GTX 580 Power Throttling using GPU-Z

NVIDIA shook the high-end PC hardware industry earlier this month with the surprise launch of its GeForce GTX 580 graphics card, which extended the lead for single-GPU performance NVIDIA has been holding. It also managed to come up with some great performance per Watt improvements over the previous generation. The reference design board, however, made use of a clock speed throttling logic which reduced clock speeds when an extremely demanding 3D application such as Furmark or OCCT is run. While this is a novel way to protect components saving consumers from potentially permanent damage to the hardware, it does come as a gripe to expert users, enthusiasts and overclockers, who know what they're doing.

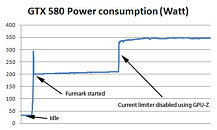

GPU-Z developer and our boss W1zzard has devised a way to make disabling this protection accessible to everyone (who knows what he's dealing with), and came up with a nifty new feature for GPU-Z, our popular GPU diagnostics and monitoring utility, that can disable the speed throttling mechanism. It is a new command-line argument for GPU-Z, that's "/GTX580OCP". Start the GPU-Z executable (within Windows, using Command Prompt or shortcut), using that argument, and it will disable the clock speed throttling mechanism. For example, "X:\gpuz.exe /GTX580OCP" It will stay disabled for the remainder of the session, you can close GPU-Z. It will be enabled again on the next boot.As an obligatory caution, be sure you know what you're doing. TechPowerUp is not responsible for any damage caused to your hardware by disabling that mechanism. Running the graphics card outside of its power specifications may result in damage to the card or motherboard. We have a test build of GPU-Z (which otherwise carries the same-exact feature-set of GPU-Z 0.4.8). We also ran a power consumption test on our GeForce GTX 580 card demonstrating how disabling that logic affects power consumption.

DOWNLOAD: TechPowerUp GPU-Z GTX 580 OCP Test Build

GPU-Z developer and our boss W1zzard has devised a way to make disabling this protection accessible to everyone (who knows what he's dealing with), and came up with a nifty new feature for GPU-Z, our popular GPU diagnostics and monitoring utility, that can disable the speed throttling mechanism. It is a new command-line argument for GPU-Z, that's "/GTX580OCP". Start the GPU-Z executable (within Windows, using Command Prompt or shortcut), using that argument, and it will disable the clock speed throttling mechanism. For example, "X:\gpuz.exe /GTX580OCP" It will stay disabled for the remainder of the session, you can close GPU-Z. It will be enabled again on the next boot.As an obligatory caution, be sure you know what you're doing. TechPowerUp is not responsible for any damage caused to your hardware by disabling that mechanism. Running the graphics card outside of its power specifications may result in damage to the card or motherboard. We have a test build of GPU-Z (which otherwise carries the same-exact feature-set of GPU-Z 0.4.8). We also ran a power consumption test on our GeForce GTX 580 card demonstrating how disabling that logic affects power consumption.

DOWNLOAD: TechPowerUp GPU-Z GTX 580 OCP Test Build

116 Comments on Disable GeForce GTX 580 Power Throttling using GPU-Z

if you buy a $30 motherboard then the boss of those guys told them to save 5 cents to meet their price target -> possible damage when drawing too much current for a long time

So, basically, you are saying the limiter is basically to prevent crappy boards from burning out, not for the benefit of the card itself? (Which is pretty much what I suspected from the beginning)

www.anandtech.com/show/2841/11

T

350 watts @ 12v = 30 amps - the 75-100 that the pcie-buss can supply so you are right on the edge of what the wires from you're psu can handle ..

most wires are only rated for about 8-10 AMPS > then.melt

I put forth this question when does performance out weight the extra power usage and risk

A quality board and quality psu has no problems handling these kinds of loads.

bad things will happen

unless you can find a psu with 15g wiring most are 20-18

vCore=1.138V, stock fan, FAN set 85%, Room Temp=25oC. I rename Furmark to nemesis and run over 40'. Here is my result:

Maximum Temp 70oC

What more you can say :D

is this correct?.

Uploaded with ImageShack.us

www.techpowerup.com/reviews/HIS/Radeon_HD_6970/27.html

Thanks in advance!

//encor3