Thursday, March 22nd 2012

Did NVIDIA Originally Intend to Call GTX 680 as GTX 670 Ti?

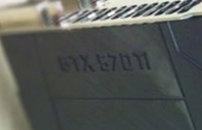

Although it doesn't matter anymore, there are several bits of evidence supporting the theory that NVIDIA originally intended for its GK104-based performance graphics card to be named "GeForce GTX 670 Ti", before deciding to go with "GeForce GTX 680" towards the end. With the advent of 2012, we've had our industry sources refer to the part as "GTX 670 Ti". The very first picture of the GeForce GTX 680 disclosed to the public, early this month, revealed a slightly old qualification sample, which had one thing different from the card we have with us today: the model name "GTX 670 Ti" was etched onto the cooler shroud, our industry sources disclosed pictures of early samples having 6+8 pin power connectors.

Next up, while NVIDIA did re-christian GTX 670 Ti to GTX 680, it was rather sloppy at it. The first picture below shows the contents of the Boardshots (stylized) folder in NVIDIA's "special place" for the media. It contains all the assets NVIDIA allows the press, retailers, and other partners to use. Assets are distributed in various formats, the TIFF is a standard image-format used by print-media, for its high dot-pitch. Apart from a heavy payload, the TIFF image file allows tags, that can be read by Windows Explorer, these tags help people at the archives. The tags for images in TIFF format, of the GTX 680 distributed to its partners in the media and industry contain the tag "GTX 670 Ti".It doesn't end there. Keen-eyed users, while browsing through NVIDIA Control Panel, with their GTX 680 installed, found the 3D Vision Surround displays configuration page refer to their GPU as "GTX 670 Ti". This particular image was used by NVIDIA on their 3D Vision Surround guide.

We began this article by saying that frankly, at this point, it doesn't matter. Or does it? Could it be that GK104 rocked the boardroom at NVIDIA Plex to the point where they decided that since it's competitive (in fact, faster) than AMD's Radeon HD 7970, it makes more business sense selling it as "GTX 680"?

What's in the name? Well for one, naming it "GTX 680" instead of "GTX 670 Ti", releases pressure off NVIDIA to introduce a part based on its "big chip" based on the GeForce Kepler architecture (GK1x0). It could also save NVIDIA tons of R&D costs for its GTX 700 series, because it can brand GK1x0 in the GTX 700 series, and invest relatively less, on a dual-GK104 graphics card to ward off the threat of Radeon HD 7990 "New Zealand", and save (read: sandbag) GK1x0 for AMD's next-generation Sea Islands family based on "Enhanced Graphics CoreNext" architecture, slated for later this year, if all goes well. Is it a case of mistaken identity? Overanalysis on our part? Or is there something they don't want you to know ?

Next up, while NVIDIA did re-christian GTX 670 Ti to GTX 680, it was rather sloppy at it. The first picture below shows the contents of the Boardshots (stylized) folder in NVIDIA's "special place" for the media. It contains all the assets NVIDIA allows the press, retailers, and other partners to use. Assets are distributed in various formats, the TIFF is a standard image-format used by print-media, for its high dot-pitch. Apart from a heavy payload, the TIFF image file allows tags, that can be read by Windows Explorer, these tags help people at the archives. The tags for images in TIFF format, of the GTX 680 distributed to its partners in the media and industry contain the tag "GTX 670 Ti".It doesn't end there. Keen-eyed users, while browsing through NVIDIA Control Panel, with their GTX 680 installed, found the 3D Vision Surround displays configuration page refer to their GPU as "GTX 670 Ti". This particular image was used by NVIDIA on their 3D Vision Surround guide.

We began this article by saying that frankly, at this point, it doesn't matter. Or does it? Could it be that GK104 rocked the boardroom at NVIDIA Plex to the point where they decided that since it's competitive (in fact, faster) than AMD's Radeon HD 7970, it makes more business sense selling it as "GTX 680"?

What's in the name? Well for one, naming it "GTX 680" instead of "GTX 670 Ti", releases pressure off NVIDIA to introduce a part based on its "big chip" based on the GeForce Kepler architecture (GK1x0). It could also save NVIDIA tons of R&D costs for its GTX 700 series, because it can brand GK1x0 in the GTX 700 series, and invest relatively less, on a dual-GK104 graphics card to ward off the threat of Radeon HD 7990 "New Zealand", and save (read: sandbag) GK1x0 for AMD's next-generation Sea Islands family based on "Enhanced Graphics CoreNext" architecture, slated for later this year, if all goes well. Is it a case of mistaken identity? Overanalysis on our part? Or is there something they don't want you to know ?

55 Comments on Did NVIDIA Originally Intend to Call GTX 680 as GTX 670 Ti?

What I am afraid is that both manufacturers are sort of happy with current situation. They make a lot of profit as long as prices remain this high.

* And considering what Nvidia achieved with the thing, that's hard to believe.GK110 should be ready for August this year, since it taped out in January/February. That's why the situation with it is so complex. ~5 months for a new gen?

and imho they are only showing part of their hand, i think they have done an Amazeing job with the silicon they have been passed ,but i honestly believe this chip still isnt quite how they wanted it tobe and the enforced power gateing and clock control is some very very clever way of increasing yields, in my head they had a chip with more shaders per smx and then have per shader redundancy ,ie cut out individual shaders plus the abillity to bin on smx units and the forced abillity to control the power ,so i see a lot of high shader count card varieties all called roughly the same thing but clocked very differently , 680 eco ,R GT GTX GS TI then with reduced SMX take ten off 680 per smx and add prefix per binned clock speed = less free oc all round but a good market spread.

If GK110 is possibly ready for August, either the life of the 6xx series is extended and lasts well into AMD's 8xxx series lifetime, or the 6xx series is truncated in its card rollout and the 7xx series quickly takes its place at the high end, before the end of the year.

Power control is there from the very beginning, as is the SMX's, the amount, the way they are etc. It's not something you can change on the fly. It is what it was meant to be from the beginning. Except that it has launched at 1000 Mhz instead of at 950 Mhz which was probably the plan. But then AMd released the "Ghz Edition campaign" and robably Nvidia marketing team started :banghead: "this should have been our idea!!"

That leaves no need for GK110 now, definately would be logical to sell them as quadros till there is need for ah the new 7xx series.

I don't think we'll see any of GK110 until AMD launches the 8000 series. The game is over at the top. Based on how GTX680 looks like - 100% gaming card - the big boy is reserved to teslas and quadros and HPC. It might be that NV is making a clearer distinction between professional and gaming.

but your deffinately wrong about it having to be designed in from the start ,the shader domain level clocks would have to be designed in but the gpu power gated clocks though most definitely designed in to some degree can easily be added to any card with the right power control circuitry, this i KNow .

My only point regarding this was not that they added it but that they have had to run tighter volt and temp control then they expected /would have liked IMHO.

you could easily have designed in 2056 shaders with some expected to fail in every smx ,given the high speed nvidia are running the shaders at this would be intelligent and reasonable design , redundancy is what engineering does . if you pre aligned the shaders you could eliminate particulate damage to a shader array by disableing that and the one for or aft row of shaders pre ,designed in redundancy think 480 but intelligently done to guarantee/optimize yields, it seems to be their main goal.

create a chip thats capable and very dynamic/flexible

Dynamic yes, flexible, probably not, It doesn't seem like its another G92.

The truth of the naming should have been in the prerelease drivers though . . . . .

in wich kind of planet we live?? do you really think that nvidia probably launch a "gtx 680" (the suposed real one) at 40%~60% more performance than last gen? even this "gtx670 ti" is almost powerful than a gtx590. So, are they some kind of company wich totally destroys last gen of graphics card on performance, making them totally obsolete?

we live in the kind of planet where the money rules, so all you're trying to understand, and discuss, is a total waste of time.

i think that, they obviously can't compete with amd at the time amd released it, so they invented all this fantasy (in case of fail), because they didn't know how fast the arquitecture could be. does anybody remember that chart with the 660ti being 10% more powerfull than the 7970? that's some big kind of shit

do you really belive this crappy marketing strategy?

i really love this gtx680. but with all this around, makes it unprofessional. too much fanboyism.