Monday, December 31st 2012

Arctic Leaks Bucket List of Socket LGA1150 Processor Model Numbers

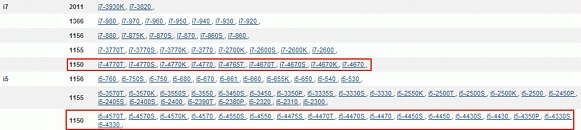

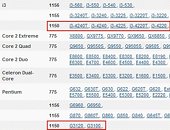

CPU cooler manufacturer Arctic (aka Arctic Cooling) may have inadvertently leaked a very long list of 4th generation Intel Core processors based on its LGA1150 socket. Longer than any currently posted lists of Core "Haswell" processors, the leak includes model numbers of nine Core i7, seventeen Core i5, five Core i3, and two Pentium models. Among the Core i7 models are already known i7-4770K flagship chip, i7-4770S, and a yet-unknown i7-4765T. The Core i5 processor list is exhaustive, and it appears that Intel wants to leave no price-point unattended. The Core i5-4570K could interest enthusiasts. In comparison to the Core i5 list, the LGA1150 Core i3 list is surprisingly short, indicating Intel is serious about phasing out dual-core chips. The Pentium LGA1150 list is even shorter.

The list of LGA1150 processor models appears to have been leaked in the data-sheets of one of its coolers, in the section that lists compatible processors. LGA1150 appears to have the same exact cooler mount-hole spacing as LGA1155 and LGA1156 sockets, and as such upgrading CPU cooler shouldn't be on your agenda. Intel's 4th generation Core processor family is based on Intel's spanking new "Haswell" micro-architecture, which promises higher performance per-core, and significantly faster integrated graphics over previous generation. The new chips will be built on Intel's now-mature 22 nm silicon fabrication process. The new chips will begin to roll out in the first-half of 2013.

Source:

Expreview

The list of LGA1150 processor models appears to have been leaked in the data-sheets of one of its coolers, in the section that lists compatible processors. LGA1150 appears to have the same exact cooler mount-hole spacing as LGA1155 and LGA1156 sockets, and as such upgrading CPU cooler shouldn't be on your agenda. Intel's 4th generation Core processor family is based on Intel's spanking new "Haswell" micro-architecture, which promises higher performance per-core, and significantly faster integrated graphics over previous generation. The new chips will be built on Intel's now-mature 22 nm silicon fabrication process. The new chips will begin to roll out in the first-half of 2013.

58 Comments on Arctic Leaks Bucket List of Socket LGA1150 Processor Model Numbers

but too bad new socket = different retention

I'm also getting a strong sense of deja vu back to the Pentium 4's and Intel trying to push the clocks as high as they could reasonably go.

Then I get this knot in my stomach that computers are getting slower, not faster, because consumers aren't demanding faster (e.g. explosion of tablet and smartphone sales). Then again, developers aren't really pushing for faster hardware like they did in the 1990s and early 2000s.

Maybe it's because AMD is on a crash course and Intel has nothing better to do?

So depressing. :(

Now they have tablets and phones with graphics superior to the Xbox 360 and PS3 (if you are unaware of this, check it out. One good example is the Unreal engine, but there are a few others).

These devices are much more powerful in their graphics than laptops with IGPs. I will be doing another build this year, probably my final build, and I will keep it in tact to remember the days when we could build our own computer.

We're inventing ways to make computers dumber and slower (to the point they're virtualized on "clouds"), not smarter and faster (which created tons of optimism up until about 2008). Someone needs to modify AMD's logo and change it to "Gaming Devolved" and stamp it on the entire industry.

A Core i7 today is the equivilent of a supercomputer 20 years ago.

Also Minecraft is huuuggeellly overrated as a game. I'm not sure I want to call it a game. But that is OP.

Sandy Bridge was THAT GOOD!!!

it's skewing your perceptions