Friday, January 17th 2014

AMD Readies 16-core Processors with Full Uncore

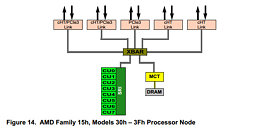

AMD released developer documentation for a new processor it's working on, and the way it's worded describes a chip with 8 modules, working out to 16 cores, on a single piece of silicon, referred to as Family 15h Models 30h - 3fh. This is not to be confused with the company's Opteron 6300-series "Abu Dhabi" chips, which are multi-chip modules of two 8-core dies, in the G34 package.

What's more, unlike the current "Abu Dhabi" and "Seoul" chips, the new silicon features a full-fledged uncore, complete with a PCI-Express gen 3.0 root complex that's integrated into the processor die. In what's more proof that it's a single die with 8 modules and not an MCM of two dies with 4 modules each, the document describes the die as featuring four HyperTransport links; letting it pair with four other processors in 4P multi-socket configurations. Such systems would feature a total core count of 64. There's no clarity on which exact micro-architecture the CPU modules are based on. Without doubt, AMD is designing this chip for its Opteron enterprise product stack, but it should also give us a glimmer of hope that AMD could continue to serve up high-performance client CPU, only ones that can't be based on socket AM3+.

Source:

Planet3DNow.de

What's more, unlike the current "Abu Dhabi" and "Seoul" chips, the new silicon features a full-fledged uncore, complete with a PCI-Express gen 3.0 root complex that's integrated into the processor die. In what's more proof that it's a single die with 8 modules and not an MCM of two dies with 4 modules each, the document describes the die as featuring four HyperTransport links; letting it pair with four other processors in 4P multi-socket configurations. Such systems would feature a total core count of 64. There's no clarity on which exact micro-architecture the CPU modules are based on. Without doubt, AMD is designing this chip for its Opteron enterprise product stack, but it should also give us a glimmer of hope that AMD could continue to serve up high-performance client CPU, only ones that can't be based on socket AM3+.

92 Comments on AMD Readies 16-core Processors with Full Uncore

The less the better, no matter if 1 or 1/x. Just my stupid opinion.

Why? LED or LCD TV costs a lot here, I can't invest in one at the moment. But I would buy if I could, no doubt. Sometimes, to save watts costs a lot too. It's not the same with computers because I can build a low power mini ITX for much less instead of more. But I prefer micro ATX as mini ITX has some disadvantages...No.I do not live alone. Other people prefer light... What can I do?!

But that atom (8 cores!) looks nice on paper, I would like to try it out, seems a good one, but still at 20watts.

I found on amazon an ECS KBN motherboard with an A6-5200, quad core, Radeon GPU, 25watts.get an intel...!! :lovetpu:

If You don't already do this, I'd say Your "Intel because every single watt counts" is hypocrisy.

Back on topic:

8 core Atom? Please do enlighten me. Because I kinda remember that Atoms were friggin' slow compared to AMD's low power CPUs AKA equivalents.

Someone has to tell me why this doesn't look awesome as a cheap server.

ASRock C2750D4I Mini ITX Server Motherboard FCBGA1283 DDR3 1600/1333

And I prefer low-end NVIDIA chips as I never had any problems with their drivers and I am sure they will work for my needs, so why to change?!Not only every single watt but also every single instruction per second. Single core performance is crucial as I like to disable all the unnecessary cores to use even less power.

And maybe the opposite of overclocking in extreme cases. I did that already! :D

EDIT:

And to mention other DECISIVE factor for me to choose Intel instead of AMD: CPU pins. I let my E2200 to fall onto the ground in December 2013 and I was happy it was not an AMD. Ohterwise, it would bend many pins and give me a lot of headache and a non-working processor.

Ever bent a pin on a motherboard with LGA? It's a bitch to fix and more often than not, you can't fix it. While I like LGA in general because of how the CPU is secured, I'm less worried about bending a pin on a CPU than on an LGA motherboard though.

Your a big telly owning amd hating fool who should stick to using a pda or pad imho, efficiency wtf and as for this last reply he was removing a heatsink harshly .

You dropped ya soddin chip er your worse.

And what the feck any of this has to do with the Op is beyond me.

I think extreme violence would be required if that is possible, with my cooler. Just need to follow simple rules and it'll be fine.