Monday, March 23rd 2015

AMD Bets on DirectX 12 for Not Just GPUs, but Also its CPUs

In an industry presentation on why the company is excited about Microsoft's upcoming DirectX 12 API, AMD revealed its most important feature that could impact on not only its graphics business, but also potentially revive its CPU business among gamers. DirectX 12 will make its debut with Windows 10, Microsoft's next big operating system, which will be given away as a free upgrade for _all_ current Windows 8 and Windows 7 users. The OS will come with a usable Start menu, and could lure gamers who stood their ground on Windows 7.

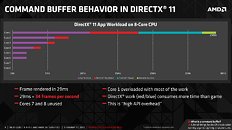

In its presentation, AMD touched upon two key features of the DirectX 12, starting with its most important, Multi-threaded command buffer recording; and Asynchronous compute scheduling/execution. A command buffer is a list of tasks for the CPU to execute, when drawing a 3D scene. There are some elements of 3D graphics that are still better suited for serial processing, and no single SIMD unit from any GPU architecture has managed to gain performance throughput parity with a modern CPU core. DirectX 11 and its predecessors are still largely single-threaded on the CPU, in the way it schedules command buffer.A graph from AMD on how a DirectX 11 app spreads CPU load across an 8-core CPU reveals how badly optimized the API is, for today's CPUs. The API and driver code is executed almost entirely on one core, and this is something that's bad for even dual- and quad-core CPUs (if you fundamentally disagree with AMD's "more cores" strategy). Overloading fewer cores with more API and driver-related serial workload makes up the "high API overhead" issue that AMD believes is holding back PC graphics efficiency compared to consoles, and it has a direct and significant impact on frame-rates.DirectX 12 heralds a truly multi-threaded command buffer pathway, which scales up with any number of CPU cores you throw at it. Driver and API workloads are split evenly between CPU cores, significantly reducing API overhead, resulting in huge frame-rate increases. How big that increase is in the real-world, remains to be seen. AMD's own Mantle API addresses this exact issue with DirectX 11, and offers a CPU-efficient way of rendering. Its performance-yields are significant on GPU-limited scenarios such as APUs, but on bigger setups (eg: high-end R9 290 series graphics, high resolutions), the performance gains though significant, are not mind-blowing. In some scenarios, Mantle offered the difference between "slideshow" and "playable." Cynics have to give DirectX 12 the benefit of the doubt. It could end up doing a better job than even Mantle, at pushing paper through multi-core CPUs.

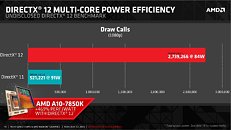

AMD's own presentation appears to agree with the way Mantle played out in the real world (benefits for APUs vs. high-end GPUs). A slide highlights how DirectX 12 and its new multi-core efficiency could step up draw-call capacity of an A10-7850K by over 450 percent. Sufficed to say, DirectX 12 will be a boon for smaller, cheaper mid-range GPUs, and make PC gaming more attractive for the gamer crowd at large. The fine-grain asynchronous compute-scheduling/execution, is another feature to look out for. It breaks down complex serial workloads into smaller, parallel tasks. It will also ensure that unused GPU resources are put to work on these smaller parallel tasks.So where does AMD fit in all of this? DirectX 12 support will no doubt help AMD sell GPUs. Like NVIDIA, AMD has preemptively announced DirectX 12 API support on all its GPUs based on the Graphics CoreNext architecture (Radeon HD 7000 series and above). AMD's real takeaway from DirectX 12 will be how its cheap 8-core socket AM3+ CPUs could gain tons of value overnight. The notion that "games don't use >4 CPU cores" will dramatically change. Any DirectX 12 game will split its command buffer and API loads between any number of CPU cores you throw at it. AMD sells you 8-core CPUs for as low as $170 (the FX-8320). Intel's design strategy of placing stronger but fewer cores on its client processors, could face its biggest challenge with DirectX 12.

In its presentation, AMD touched upon two key features of the DirectX 12, starting with its most important, Multi-threaded command buffer recording; and Asynchronous compute scheduling/execution. A command buffer is a list of tasks for the CPU to execute, when drawing a 3D scene. There are some elements of 3D graphics that are still better suited for serial processing, and no single SIMD unit from any GPU architecture has managed to gain performance throughput parity with a modern CPU core. DirectX 11 and its predecessors are still largely single-threaded on the CPU, in the way it schedules command buffer.A graph from AMD on how a DirectX 11 app spreads CPU load across an 8-core CPU reveals how badly optimized the API is, for today's CPUs. The API and driver code is executed almost entirely on one core, and this is something that's bad for even dual- and quad-core CPUs (if you fundamentally disagree with AMD's "more cores" strategy). Overloading fewer cores with more API and driver-related serial workload makes up the "high API overhead" issue that AMD believes is holding back PC graphics efficiency compared to consoles, and it has a direct and significant impact on frame-rates.DirectX 12 heralds a truly multi-threaded command buffer pathway, which scales up with any number of CPU cores you throw at it. Driver and API workloads are split evenly between CPU cores, significantly reducing API overhead, resulting in huge frame-rate increases. How big that increase is in the real-world, remains to be seen. AMD's own Mantle API addresses this exact issue with DirectX 11, and offers a CPU-efficient way of rendering. Its performance-yields are significant on GPU-limited scenarios such as APUs, but on bigger setups (eg: high-end R9 290 series graphics, high resolutions), the performance gains though significant, are not mind-blowing. In some scenarios, Mantle offered the difference between "slideshow" and "playable." Cynics have to give DirectX 12 the benefit of the doubt. It could end up doing a better job than even Mantle, at pushing paper through multi-core CPUs.

AMD's own presentation appears to agree with the way Mantle played out in the real world (benefits for APUs vs. high-end GPUs). A slide highlights how DirectX 12 and its new multi-core efficiency could step up draw-call capacity of an A10-7850K by over 450 percent. Sufficed to say, DirectX 12 will be a boon for smaller, cheaper mid-range GPUs, and make PC gaming more attractive for the gamer crowd at large. The fine-grain asynchronous compute-scheduling/execution, is another feature to look out for. It breaks down complex serial workloads into smaller, parallel tasks. It will also ensure that unused GPU resources are put to work on these smaller parallel tasks.So where does AMD fit in all of this? DirectX 12 support will no doubt help AMD sell GPUs. Like NVIDIA, AMD has preemptively announced DirectX 12 API support on all its GPUs based on the Graphics CoreNext architecture (Radeon HD 7000 series and above). AMD's real takeaway from DirectX 12 will be how its cheap 8-core socket AM3+ CPUs could gain tons of value overnight. The notion that "games don't use >4 CPU cores" will dramatically change. Any DirectX 12 game will split its command buffer and API loads between any number of CPU cores you throw at it. AMD sells you 8-core CPUs for as low as $170 (the FX-8320). Intel's design strategy of placing stronger but fewer cores on its client processors, could face its biggest challenge with DirectX 12.

87 Comments on AMD Bets on DirectX 12 for Not Just GPUs, but Also its CPUs

So what, we should just stick with DX9 - 11 based games forever & never innovate to create/ move to a new & better API? o_O

I don't know if you remember how things worked back in the day. ATi was never really able to get developers to use their proprietary tech, not in any meaningful way anyway. AMD have been far more successful in getting the tech they develop adopted by developers and especially Microsoft. Not really surprising considering that ATi/AMD GPUs are powering the last two console generations from Microsoft (Xbox 360 and Xbone). At any rate, AMD get their technologies (well, the big stuff anyway) implemented in DirectX, which means mass adoption by developers, and Microsoft get to spend less developing new tech to put into newer versions of DirectX. It's a win-win for everyone, really: AMD, Microsoft, the users... hell, even nVidia, since they get full access to everything.Believe it or not, DX12 will be most important for people using CPUs like that A10-7850 and the like. The gap between lower end CPUs and the high end will be a whole helluva lot smaller with DX12, which means that entry level and especially lower mainstream CPUs will be far more appealing to the gaming crowd. Think back to the times when you could overclock your cheap CPU to the point where you'd get similar performance to high end models. It wasn't that long ago.Mantle is AMD's insurance policy: they don't have to wait for a new DirectX to have an API that allows developers to use whatever technology they want to push. Furthermore, they'll have an extra advantage over nVidia next time Nintendo and Sony will need a new GPU/APU for their consoles: not having to rely on their competitor's own API or OpenGL is a pretty big selling point. As for how good AMD's current architecture is in DX12 versus nVidia's Maxwell, that doesn't really matter. AMD is going to launch a new generation of GPUs and it's very likely that said GPUs will use an improved version of the current GCN architecture. That's what matters to them. Comparing current GCN used for Radeon HD 7000 series cards with nVidia's (mostly) brand new Maxwell isn't entirely relevant.What DX9-11 game are you playing, that requires more than an 8-core FX or a Phenom II X6 for smooth gameplay? I'm asking because I don't play every game that's out there and maybe I'm missing something. Me, I'm using a Phenom II X6 1055T and I really can't find a single game I can't max out. I had a Core i7 2600K before. I sold it and bought the Phenom II X6 with about 40% of the money I got for the Core i7 and I really can't see the difference in any of the games that I play.andUmm, what? No offense, but do you even know what you're talking about? What does Intel have to do with anything? And why sue Microsoft? You're really missing the big picture here. Low-level APIs are the "secret weapon" the consoles have. Basically, having low-level APIs and fixed hardware specs allows developers to create games with graphics that would normally require a lot more processing power to pull off on the PC.

Windows operating systems have always been bloated, one way or another. The same is true for DirectX. Lately, though, Microsoft has been trying to sort things out in order to create a more efficient software ecosystem. That's right, not just the operating system(s), but everything Microsoft, including DirectX. They need to be able to offer software that is viable for mobile devices (as in, phones and tablets), not just PCs, or they won't stand a chance against Android. Furthermore, they want to offer the users devices that can communicate and work with each others across the board. That means they need a unified software ecosystem, which in turn means making an operating system that can work on all these devices, even if there will be device-specific versions that are optimized to some degree (ranging from UIs customised for phones/consoles/PCs to power consumption algorithms and so on).

In order to create such a Jack-of-all-trades operating system, they need it to have the best features in one package: low-level APIs from consoles, draconian power consumption profiles from phones, very light processing power requirements from both phones and consoles, excellent backwards compatibility through virtualization from PCs, the excellent diversity of software you can only get from a PC, and so on. Creating such an operating system takes a lot of money and effort, not to mention time. They've already screwed up on the UI front with Windows 8 (yeah, apparently it wasn't a no-brainer for them that desktops and phones can never use the same UI). Hopefully, they've learned their lesson and Windows 10 will turn out fine.

DX12 is a major step forward in the right direction, not some conspiracy between Microsoft and Intel to keep AMD CPUs down in the gutter. Nobody would have given all this conspiracy theory a second thought if AMD would have had the money they needed to stay on track with CPU development. But reality is like a kick in the teeth, and AMD CPUs are sadly not nearly as powerful as they'd need to be in order to give Intel a run for their money. Intel stands to lose more ground because they offer the highest performing CPUs, but I think we can all agree on the fact that they didn't get here (having the best performance) by conspiring with Microsoft. Besides, gamers are by far not the only ones that are buying Intel CPUs. Actually, DX12 will be detrimental to AMD as well: fewer people will feel the need to upgrade their CPUs to prepare for upcoming games.

But i'd be thrilled if they're confirmed as soon as the consumer gets its hands on W10 and DX12. Especially if AMD processors are maintained at the current price ranges. Can't even imagine the new landscape that would surge in the gaming community. "Value-oriented gaming" would change completely.

Also, agreement between Microsoft and Intel for negative influences on the progress.

AMD probably couldn't do anything because they were happy to have that x86 license at all.

Still a mystery why x86 still exists and why AMD doesn't jump on anything else. Maybe because in the beginning they simply copied Intel's processors.

For me it sounds like the real benefit will be in low gaming and main gaming rigs.

Lets just hope the use of dx12 in games will turn up fast, so we finally can wave goodbye to dx9-11

It would be nice if it did, i havent spend 3500 euros for CPUs for nothing, like most of my friends, or followers of my build log would kie to think.

Theoretically there would be no problem to handle as many threads as you like.

Compared to this my 2x12core/48 thread CPUs seem outdated.

Perhaps we will one day see an ASUS ROG board made for these kinds of CPUs, who knows!

Sure, AMD started out by copying Intel's CPUs, but that was a very long time ago and it was part of the agreement between AMD, Intel and IBM. AMD hasn't copied an Intel design for well over a decade now. As for why AMD doesn't "jump on anything else", well, that's not exactly true. They've been tinkering with ARM designs for a while now, and the results are going to show up pretty soon. Developing an entirely new standard to compete with x86 would take incredible amounts of money and time, and AMD has neither of those in ample supply. Not to mention that PC software was built from the ground up for x86 machines and that you'd have better luck drawing up an entirely new GPU on a napkin during lunch break than convincing software developers to remake all their software to work with the new standard.Well, supposedly, you'd be able to use all those cores in games. Not that you'd find graphics cards that would be powerful enough to register significantly better performance (higher than 5-10%) than ye average i7 4790K or flagship FX CPU. You also wouldn't need to upgrade the CPU for a decade or so, but then again, your e-peen would shrink big time. Ouch.It is indeed likely that the R9 390X/Fiji is the only new core, just like Hawaii was when it was launched. However, judging by the "50-60% better performance than 290X" rumor, as well as the specs (4096 shaders, for starters), I don't think those are ye regular GCN shaders. If Fiji indeed ends up 50-60% faster than Hawaii, while having only about 45% more shaders, it will be rather a amazing feat. Just look at Titan X, it has at least 50% more anything compared to a GTX 980, but it only manages 30% better real life performance.

And that's actually a bit above average as far as scaling goes. Due to the complexity of GPUs, increasing shader count and/or frequency will never yield the same percentage in real life performance gains. And yet, Fiji is coming to the table with a performance gain that EXCEEDS the percentage by which the number of shaders has increased. No matter how good HBM is, it cannot explain such performance gains by itself. If you agree that Hawaii had sufficient memory bandwidth, then the only two things that can account for the huge gain: 2GHz shader frequency (which is extremely unlikely) or modified/new shaders. My money's on the shaders.No, the fact that most of the new 300 series are rebrands wouldn't be an issue. AMD focused all their efforts on Fiji and lo and behold, they got to use HBM one generation before nVidia. They now have the time and expertise required to design new mainstream/performance cards that use HBM and put them into production once HBM is cheap enough (real mass production). Besides, where's the rush? There are no DX12 titles out yet, Windows 10 isn't even out yet. By the time you'll have a DX12 game out there that you'd want to play, you'll be able to purchase 400 series cards.

As for AMD's financial troubles, people have been saying that they're gonna go bankrupt for a good long while now. And guess what, they're still alive and kicking. I'm thankful that AMD price their cards so that they're affordable for most people, not just the rich and silly that are more than willing to pay a thousand bucks or more for a card just because it has nVidia's logo and Titan writing on it. And it also makes sense for them: a lot more people are likely to buy their cards since a lot more people can afford to do so. I'm sorry, but you'll have to live with the fact that your shiny new graphics card is overpriced beyond reason. Don't try to convince the others that they should pay more for their cards just so you can feel good with yourself.Bingo. We have a winner here. I wholeheartedly agree.

Well, supposedly, you'd be able to use all those cores in games. Not that you'd find graphics cards that would be powerful enough to register significantly better performance (higher than 5-10%) than ye average i7 4790K or flagship FX CPU. You also wouldn't need to upgrade the CPU for a decade or so, but then again, your e-peen would shrink big time. Ouch.

quote

Wel, that was what i was planning in the first place when i got me these CPUs, to not need to change the CPU setup for a lot of time, sure the GPUs will needto be refresehd now nad then, but the CPUs could stay in place for a lot of time, i reckoned.

It seems my logic was good, as usually my logic allmost always is.

Now to choose the proper GPU setup.

Probably if the apps are coded right, one would or at least could get a big boost of performance even from a Crossfire 295x2 Setup, with 4 GPUS and unified 16gb of RAM.

To bad there isnt a dual gm200 board outthere, that would have been my choice as a gpu.

As it is right now, i will probably go with titan X SLI as a i allready own a GSync Monitor, however its going to take i thin two months before i will actually aquaire them. Untill now i hope i will learn more about the 390x and see if it is worth it.