Monday, March 23rd 2015

AMD Bets on DirectX 12 for Not Just GPUs, but Also its CPUs

In an industry presentation on why the company is excited about Microsoft's upcoming DirectX 12 API, AMD revealed its most important feature that could impact on not only its graphics business, but also potentially revive its CPU business among gamers. DirectX 12 will make its debut with Windows 10, Microsoft's next big operating system, which will be given away as a free upgrade for _all_ current Windows 8 and Windows 7 users. The OS will come with a usable Start menu, and could lure gamers who stood their ground on Windows 7.

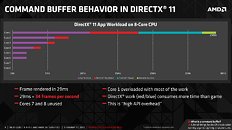

In its presentation, AMD touched upon two key features of the DirectX 12, starting with its most important, Multi-threaded command buffer recording; and Asynchronous compute scheduling/execution. A command buffer is a list of tasks for the CPU to execute, when drawing a 3D scene. There are some elements of 3D graphics that are still better suited for serial processing, and no single SIMD unit from any GPU architecture has managed to gain performance throughput parity with a modern CPU core. DirectX 11 and its predecessors are still largely single-threaded on the CPU, in the way it schedules command buffer.A graph from AMD on how a DirectX 11 app spreads CPU load across an 8-core CPU reveals how badly optimized the API is, for today's CPUs. The API and driver code is executed almost entirely on one core, and this is something that's bad for even dual- and quad-core CPUs (if you fundamentally disagree with AMD's "more cores" strategy). Overloading fewer cores with more API and driver-related serial workload makes up the "high API overhead" issue that AMD believes is holding back PC graphics efficiency compared to consoles, and it has a direct and significant impact on frame-rates.DirectX 12 heralds a truly multi-threaded command buffer pathway, which scales up with any number of CPU cores you throw at it. Driver and API workloads are split evenly between CPU cores, significantly reducing API overhead, resulting in huge frame-rate increases. How big that increase is in the real-world, remains to be seen. AMD's own Mantle API addresses this exact issue with DirectX 11, and offers a CPU-efficient way of rendering. Its performance-yields are significant on GPU-limited scenarios such as APUs, but on bigger setups (eg: high-end R9 290 series graphics, high resolutions), the performance gains though significant, are not mind-blowing. In some scenarios, Mantle offered the difference between "slideshow" and "playable." Cynics have to give DirectX 12 the benefit of the doubt. It could end up doing a better job than even Mantle, at pushing paper through multi-core CPUs.

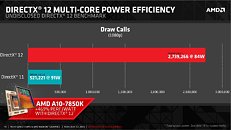

AMD's own presentation appears to agree with the way Mantle played out in the real world (benefits for APUs vs. high-end GPUs). A slide highlights how DirectX 12 and its new multi-core efficiency could step up draw-call capacity of an A10-7850K by over 450 percent. Sufficed to say, DirectX 12 will be a boon for smaller, cheaper mid-range GPUs, and make PC gaming more attractive for the gamer crowd at large. The fine-grain asynchronous compute-scheduling/execution, is another feature to look out for. It breaks down complex serial workloads into smaller, parallel tasks. It will also ensure that unused GPU resources are put to work on these smaller parallel tasks.So where does AMD fit in all of this? DirectX 12 support will no doubt help AMD sell GPUs. Like NVIDIA, AMD has preemptively announced DirectX 12 API support on all its GPUs based on the Graphics CoreNext architecture (Radeon HD 7000 series and above). AMD's real takeaway from DirectX 12 will be how its cheap 8-core socket AM3+ CPUs could gain tons of value overnight. The notion that "games don't use >4 CPU cores" will dramatically change. Any DirectX 12 game will split its command buffer and API loads between any number of CPU cores you throw at it. AMD sells you 8-core CPUs for as low as $170 (the FX-8320). Intel's design strategy of placing stronger but fewer cores on its client processors, could face its biggest challenge with DirectX 12.

In its presentation, AMD touched upon two key features of the DirectX 12, starting with its most important, Multi-threaded command buffer recording; and Asynchronous compute scheduling/execution. A command buffer is a list of tasks for the CPU to execute, when drawing a 3D scene. There are some elements of 3D graphics that are still better suited for serial processing, and no single SIMD unit from any GPU architecture has managed to gain performance throughput parity with a modern CPU core. DirectX 11 and its predecessors are still largely single-threaded on the CPU, in the way it schedules command buffer.A graph from AMD on how a DirectX 11 app spreads CPU load across an 8-core CPU reveals how badly optimized the API is, for today's CPUs. The API and driver code is executed almost entirely on one core, and this is something that's bad for even dual- and quad-core CPUs (if you fundamentally disagree with AMD's "more cores" strategy). Overloading fewer cores with more API and driver-related serial workload makes up the "high API overhead" issue that AMD believes is holding back PC graphics efficiency compared to consoles, and it has a direct and significant impact on frame-rates.DirectX 12 heralds a truly multi-threaded command buffer pathway, which scales up with any number of CPU cores you throw at it. Driver and API workloads are split evenly between CPU cores, significantly reducing API overhead, resulting in huge frame-rate increases. How big that increase is in the real-world, remains to be seen. AMD's own Mantle API addresses this exact issue with DirectX 11, and offers a CPU-efficient way of rendering. Its performance-yields are significant on GPU-limited scenarios such as APUs, but on bigger setups (eg: high-end R9 290 series graphics, high resolutions), the performance gains though significant, are not mind-blowing. In some scenarios, Mantle offered the difference between "slideshow" and "playable." Cynics have to give DirectX 12 the benefit of the doubt. It could end up doing a better job than even Mantle, at pushing paper through multi-core CPUs.

AMD's own presentation appears to agree with the way Mantle played out in the real world (benefits for APUs vs. high-end GPUs). A slide highlights how DirectX 12 and its new multi-core efficiency could step up draw-call capacity of an A10-7850K by over 450 percent. Sufficed to say, DirectX 12 will be a boon for smaller, cheaper mid-range GPUs, and make PC gaming more attractive for the gamer crowd at large. The fine-grain asynchronous compute-scheduling/execution, is another feature to look out for. It breaks down complex serial workloads into smaller, parallel tasks. It will also ensure that unused GPU resources are put to work on these smaller parallel tasks.So where does AMD fit in all of this? DirectX 12 support will no doubt help AMD sell GPUs. Like NVIDIA, AMD has preemptively announced DirectX 12 API support on all its GPUs based on the Graphics CoreNext architecture (Radeon HD 7000 series and above). AMD's real takeaway from DirectX 12 will be how its cheap 8-core socket AM3+ CPUs could gain tons of value overnight. The notion that "games don't use >4 CPU cores" will dramatically change. Any DirectX 12 game will split its command buffer and API loads between any number of CPU cores you throw at it. AMD sells you 8-core CPUs for as low as $170 (the FX-8320). Intel's design strategy of placing stronger but fewer cores on its client processors, could face its biggest challenge with DirectX 12.

87 Comments on AMD Bets on DirectX 12 for Not Just GPUs, but Also its CPUs

DirectX 12 Delivers: AMD, Nvidia, And Intel Hardware Tested With Awesome Improvements

Many major hardware news sites report about Samsung attempting to acquire AMD.

If this happens, the best news for quite a while.

Samsung To Allegedly Acquire AMD To Compete With Intel And Qualcomm Head On

Read more: wccftech.com/amd-allegedly-merge-samsung/#ixzz3VVRRd3g5

"Making super powerful mega multicore CPUs even more of a waste of money(than they are now)." To put you in your place since you probably have a absolutely horrible testing methods of your own. My Q9550 is 2 of your cpu's in 1 package. If you run any modern game in window mode while running task manager in the background, you'll see that your cpu is using alot more % than even mine. Mine uses 60-70% while running any modern AAA title. I am not going to get 60fps in bf4 at 1080p with my gtx760 even at lowest gfx settings because the cpu itself is a bottleneck.

On the other hand... perhaps your monitor is just garbage where you just cant see the clarity in motion compared to better monitors and that's why you are fine with it. If you had a more modern monitor to compare with yours side by side then you'd see the instant clarity in motion which you'll also see every other bit of imperfection. Furthermore 60fps isnt even quality either compared to 120fps consistent framerate.

www.web-cyb.org/images/lcds/blurbusters-motion-blur-from-persistence.jpeg

This will show you the difference in clarity while in motion

You fps whores really crack me up. Like it matters THAT much. As if. Just turn the fps monitor off and play your game. You see how much funner that is?

If it makes you feel better to have a more "powerful" CPU than mine, good for you. I happy for you. Whatever it does for you, that's great. Have at it. More "power" to you. Pardon the pun.

BTW, you haven't taught me anything I didn't already know. I'm not stupid. I've been doing this for a long time now. This is not my first rodeo. But I also have a life that doesn't revolve entirely around my computer. Frankly, I've got better things to do than play games...of any sort. But I've been addicted to video games since I was ~4 years old. So I doubt I'll ever stop playing them, at least occasionally.

I find BF4 very playable at as low as 35 fps. I laugh at idiots like xfaptor or levelcap or whoever these "pro" players might be running BF4 at low details just so that they can have 144 fps (anything less is extremely noticeable and significantly affects gameplay. pro note: they were saying/doing the same crap when 120Hz was the thing).

At the same time, Skyrim is absolutely unplayable for me when fps dips below 50 (I just get sick of how the image moves or something, I cannot explain it).