Monday, March 23rd 2015

AMD Bets on DirectX 12 for Not Just GPUs, but Also its CPUs

In an industry presentation on why the company is excited about Microsoft's upcoming DirectX 12 API, AMD revealed its most important feature that could impact on not only its graphics business, but also potentially revive its CPU business among gamers. DirectX 12 will make its debut with Windows 10, Microsoft's next big operating system, which will be given away as a free upgrade for _all_ current Windows 8 and Windows 7 users. The OS will come with a usable Start menu, and could lure gamers who stood their ground on Windows 7.

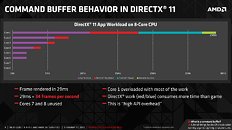

In its presentation, AMD touched upon two key features of the DirectX 12, starting with its most important, Multi-threaded command buffer recording; and Asynchronous compute scheduling/execution. A command buffer is a list of tasks for the CPU to execute, when drawing a 3D scene. There are some elements of 3D graphics that are still better suited for serial processing, and no single SIMD unit from any GPU architecture has managed to gain performance throughput parity with a modern CPU core. DirectX 11 and its predecessors are still largely single-threaded on the CPU, in the way it schedules command buffer.A graph from AMD on how a DirectX 11 app spreads CPU load across an 8-core CPU reveals how badly optimized the API is, for today's CPUs. The API and driver code is executed almost entirely on one core, and this is something that's bad for even dual- and quad-core CPUs (if you fundamentally disagree with AMD's "more cores" strategy). Overloading fewer cores with more API and driver-related serial workload makes up the "high API overhead" issue that AMD believes is holding back PC graphics efficiency compared to consoles, and it has a direct and significant impact on frame-rates.DirectX 12 heralds a truly multi-threaded command buffer pathway, which scales up with any number of CPU cores you throw at it. Driver and API workloads are split evenly between CPU cores, significantly reducing API overhead, resulting in huge frame-rate increases. How big that increase is in the real-world, remains to be seen. AMD's own Mantle API addresses this exact issue with DirectX 11, and offers a CPU-efficient way of rendering. Its performance-yields are significant on GPU-limited scenarios such as APUs, but on bigger setups (eg: high-end R9 290 series graphics, high resolutions), the performance gains though significant, are not mind-blowing. In some scenarios, Mantle offered the difference between "slideshow" and "playable." Cynics have to give DirectX 12 the benefit of the doubt. It could end up doing a better job than even Mantle, at pushing paper through multi-core CPUs.

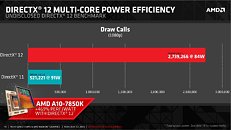

AMD's own presentation appears to agree with the way Mantle played out in the real world (benefits for APUs vs. high-end GPUs). A slide highlights how DirectX 12 and its new multi-core efficiency could step up draw-call capacity of an A10-7850K by over 450 percent. Sufficed to say, DirectX 12 will be a boon for smaller, cheaper mid-range GPUs, and make PC gaming more attractive for the gamer crowd at large. The fine-grain asynchronous compute-scheduling/execution, is another feature to look out for. It breaks down complex serial workloads into smaller, parallel tasks. It will also ensure that unused GPU resources are put to work on these smaller parallel tasks.So where does AMD fit in all of this? DirectX 12 support will no doubt help AMD sell GPUs. Like NVIDIA, AMD has preemptively announced DirectX 12 API support on all its GPUs based on the Graphics CoreNext architecture (Radeon HD 7000 series and above). AMD's real takeaway from DirectX 12 will be how its cheap 8-core socket AM3+ CPUs could gain tons of value overnight. The notion that "games don't use >4 CPU cores" will dramatically change. Any DirectX 12 game will split its command buffer and API loads between any number of CPU cores you throw at it. AMD sells you 8-core CPUs for as low as $170 (the FX-8320). Intel's design strategy of placing stronger but fewer cores on its client processors, could face its biggest challenge with DirectX 12.

In its presentation, AMD touched upon two key features of the DirectX 12, starting with its most important, Multi-threaded command buffer recording; and Asynchronous compute scheduling/execution. A command buffer is a list of tasks for the CPU to execute, when drawing a 3D scene. There are some elements of 3D graphics that are still better suited for serial processing, and no single SIMD unit from any GPU architecture has managed to gain performance throughput parity with a modern CPU core. DirectX 11 and its predecessors are still largely single-threaded on the CPU, in the way it schedules command buffer.A graph from AMD on how a DirectX 11 app spreads CPU load across an 8-core CPU reveals how badly optimized the API is, for today's CPUs. The API and driver code is executed almost entirely on one core, and this is something that's bad for even dual- and quad-core CPUs (if you fundamentally disagree with AMD's "more cores" strategy). Overloading fewer cores with more API and driver-related serial workload makes up the "high API overhead" issue that AMD believes is holding back PC graphics efficiency compared to consoles, and it has a direct and significant impact on frame-rates.DirectX 12 heralds a truly multi-threaded command buffer pathway, which scales up with any number of CPU cores you throw at it. Driver and API workloads are split evenly between CPU cores, significantly reducing API overhead, resulting in huge frame-rate increases. How big that increase is in the real-world, remains to be seen. AMD's own Mantle API addresses this exact issue with DirectX 11, and offers a CPU-efficient way of rendering. Its performance-yields are significant on GPU-limited scenarios such as APUs, but on bigger setups (eg: high-end R9 290 series graphics, high resolutions), the performance gains though significant, are not mind-blowing. In some scenarios, Mantle offered the difference between "slideshow" and "playable." Cynics have to give DirectX 12 the benefit of the doubt. It could end up doing a better job than even Mantle, at pushing paper through multi-core CPUs.

AMD's own presentation appears to agree with the way Mantle played out in the real world (benefits for APUs vs. high-end GPUs). A slide highlights how DirectX 12 and its new multi-core efficiency could step up draw-call capacity of an A10-7850K by over 450 percent. Sufficed to say, DirectX 12 will be a boon for smaller, cheaper mid-range GPUs, and make PC gaming more attractive for the gamer crowd at large. The fine-grain asynchronous compute-scheduling/execution, is another feature to look out for. It breaks down complex serial workloads into smaller, parallel tasks. It will also ensure that unused GPU resources are put to work on these smaller parallel tasks.So where does AMD fit in all of this? DirectX 12 support will no doubt help AMD sell GPUs. Like NVIDIA, AMD has preemptively announced DirectX 12 API support on all its GPUs based on the Graphics CoreNext architecture (Radeon HD 7000 series and above). AMD's real takeaway from DirectX 12 will be how its cheap 8-core socket AM3+ CPUs could gain tons of value overnight. The notion that "games don't use >4 CPU cores" will dramatically change. Any DirectX 12 game will split its command buffer and API loads between any number of CPU cores you throw at it. AMD sells you 8-core CPUs for as low as $170 (the FX-8320). Intel's design strategy of placing stronger but fewer cores on its client processors, could face its biggest challenge with DirectX 12.

87 Comments on AMD Bets on DirectX 12 for Not Just GPUs, but Also its CPUs

Its purpose is to shift the main performance improvement cause from almost entirely relying on IPC improvements to using all available hardware resources properly, loading all transistors as simultaneously as possible and not waiting for one thread to finish its task, so another takes the queue.

Actually, the shift to Windows 10 and DirectX 12 will be the main requirement for future 4K gaming.

Can't wait for this because this is the light in the end of the tunnel.

I don't care if new platform..not sure if DDR4 would be a good idea..

just something..

Dual-6-core CPUs on an OCing board would be OK with me.

Sorry...but I have to laugh now...:laugh: Why?

Because my "casual gaming rig" with an ancient dual core(E8600) and a 280X runs anything you've got at more than playable speeds. And when I run Star Swarm, D3D vs. Mantle, it's the "slideshow" vs. "playable"(if it were) scenario. Soo...

BTW, I've already shifted to Windows 10. I've had it running on said machine for a couple months now.:pimp:

I've got some more interesting info to share, about the Windows beta testing, switching from mobile, please standby...

O.k., back with my news. I tried running Star Swarm on Windows 10(first time) and...wait for it...Start Swarm(public version) appears to use DX12.

No longer is there a significant difference between runs with Mantle or D3D on my machine. In fact...D3D is now looking slightly faster!!!Whereas with DX11, D3D was WAY slower than Mantle.I'm going to run it a few more times to verify. Anyone else want to join in the experiment?

Alrighty then. I've seen enough. I'm going to go ahead and call it, Mantle and D3D12 are nearly the same in terms of performance. At least on my machine, running Star Swarm. But I don't see it being a much different story on any machine, frankly. ;)

UPDATE

I screwed that up massively. Too embarrassed to admit what I did. But it made a huge difference in the comparison testing. And it turns out I'm eating my words as we speak. Because after correcting for idiocy, Mantle is now significantly faster than D3D12, on my machine, running Star Swarm. As it now looks like Mantle is around 50%-75% faster in this case/scenario. No real big surprise there I guess, considering it's meant to glorify Mantle. But, on the other hand, D3D12 is still MUCH faster than D3D11. I'd say by AT LEAST 100%, possibly more...IIRC(since I'd have to downgrade to DX11 to compare results now, and I don't want to).

..or 9 or 15 even.

I think Mantle is not good enough.

*See previous post.

Dual 6 core CPUs on an OCing board for a PC Game is overkill x2... It probably won't be worth it.Just going off of what you and AMD Scorpion said, R9-390x is rumored to be performing at 50% to 60% above R9-290x at the same TDP as the R9-290x, and this is probably thanks to HBM. Whether it needs better GCN architecture is somewhat irrelevant right now. What AMD needs is to release it's next line of Graphic Cards faster, and continue to do so with the 30% to 50% increase each generation with less mess-ups. This in turn increases their revenue so they have more cash in their pockets. The better GCN architecture thing can come down the line in the next 1 or 2 generations. AMD has a 1 to 2 generation gap lead ahead of the competition because NVidia is basically going to release Volta (it's own version of HBM in a generation or two) in the not to distant future. Expectations of Maxwell GTX Titan-X has been overall, a flop in my opinion. Someone quoted that Maxwell GTX Titan-X is performing only 30% greater than GTX 980, someone else in another TPU threads was quoting 23% gains over a GTX 980. Overall, my point is AMD needs to push out Graphic Cards faster, and they need to stay reliable without the GTX 970 b.s. that NVidia pulled recently.

As for AMD's CPU cores, that's pretty much the situation with Bulldozer modules. AMD's next architecture will be quite different, it's going to have much more powerful cores and something along the lines of Hyper-Threading. Either way, what always matters most is what you can get for the money.Oh, believe me, I never underestimate marketing. I know they can be very "generous" with their numbers, I've seen it happen plenty of times before. That's why I was saying that IF the rumors about 390X being 50-60% faster than 290X on average, then Fiji definitely has vastly improved GCN shaders. The vastly improved bandwidth and latencies that HBM brings to the table are not enough to explain such a huge boost in performance.

And yeah, AMD does need improved GCN shaders. Just don't expect to see them in the 300 series (except for Fiji, that is). They are going to wait for a new process node to bring out the good stuff for all the market segments. 300 series cards are going to be built on 28nm, and we won't have to wait much longer for 20nm to become available. There's no point in taping out the same cores twice (once on 28nm, and then on 20nm). Personally, unless I'll get a very sweet deal on Fiji, I'm going to wait for 20nm cores before I upgrade.

A lot of games that are using D3D11, are very well multi-core optimized.

For example, DA:Inquisition, BF, etc

www.dsogaming.com/pc-performance-analyses/dragon-age-inquisition-pc-performance-analysis/

But look at that ugly Windows 8, the ugliest operating system ever! :eek:

Microsoft with this design created a vast pile of bull crap. :D :(

1. We have a problem with very slow consoles which will spoil the fun, and progress.

2. I have read somewhere that it isn't necessarily a development target to design photo-realistic gaming environments. Say goodbye to progress and hallo to ugly animations.

3. AMD is in some sort of agreement with Intel and Microsoft to spoil the development. It doesn't make sense that they gave up on competing with Intel.

Maybe for the US market and economy it is better to have an active monopoly, so AMD will always be the weak underdog.

AMd have multiple opportunities to progress but they don't do it.

techreport.com/news/28017/sec-filing-says-samsung-is-fabbing-chips-for-nvidia