Thursday, July 9th 2015

AMD Halts Optimizations for Mantle API

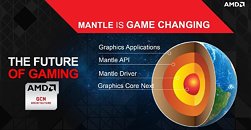

AMD has halted optimizations for its Mantle 3D graphics API, for current and future graphics cards. The cards will retain Mantle API support at the driver-level, to run existing Mantle applications, but will not receive any performance optimizations from AMD. Launched around 2013, Mantle had a short stint with AAA PC games, such as Battlefield 4, Thief, Sniper Elite III, and Star Citizen, offering noticeably higher performance than DirectX 11. The API improves the way the CPU-end of 3D graphics rendering is handled, particularly with today's multi-core/multi-threaded processors, bringing about significant increases to the number of draw-calls that can be parsed by a GPU.

AMD will now focus on DirectX 12 and Vulkan (OpenGL successor by Khronos Group). Why the company effectively killed its own 2-year old and promising 3D API is anyone's guess. We postulate that Mantle could have been used by AMD to steer Microsoft to introduce vital bare-metal optimizations it reserved for the console, to the PC ecosystem with DirectX 12. It appears to have served that purpose, and as if to hold up to its end of a bargain, AMD 'withdrew' Mantle. DirectX 12 will feature a super-efficient command-buffer that scales across any number of CPU cores, and will have huge increases in draw-calls over DirectX 11. The new API makes its official debut with Windows 10, later this month. AMD's Graphics CoreNext 1.1 and 1.2 GPUs support DirectX 12 (feature level 12_0), as do rival NVIDIA's "Maxwell" GPUs. The company will continue to nurture Mantle as an "innovation base" for its upcoming tech, such as LiquidVR.

Source:

PC Gamer

AMD will now focus on DirectX 12 and Vulkan (OpenGL successor by Khronos Group). Why the company effectively killed its own 2-year old and promising 3D API is anyone's guess. We postulate that Mantle could have been used by AMD to steer Microsoft to introduce vital bare-metal optimizations it reserved for the console, to the PC ecosystem with DirectX 12. It appears to have served that purpose, and as if to hold up to its end of a bargain, AMD 'withdrew' Mantle. DirectX 12 will feature a super-efficient command-buffer that scales across any number of CPU cores, and will have huge increases in draw-calls over DirectX 11. The new API makes its official debut with Windows 10, later this month. AMD's Graphics CoreNext 1.1 and 1.2 GPUs support DirectX 12 (feature level 12_0), as do rival NVIDIA's "Maxwell" GPUs. The company will continue to nurture Mantle as an "innovation base" for its upcoming tech, such as LiquidVR.

69 Comments on AMD Halts Optimizations for Mantle API

You might remember tessellation as the big feature of Direct3D 11, but did you know it was actually planned for Direct3D 10? Both AMD and Nvidia made their hardware implementations (example), AMD provided Direct3D extensions, and both provided OpenGL extensions. For some reason this was left out of the final spec, and a more mature version arrived instead in Direct3D 11.

so its not that big of a leap ;)

this is how Vulkan was created

Khronos we need a new api, opengl next is going nowhere

AMD: take mantle spec, please dont mess it up to much

Khonos: Thanks, well fork it!

this is a true story, you can read about it and watch the vulkan presentations, even D.I.C.E (EA) didnt hide that mantle was vulkan

if you compare vulkan and mantle, most of the spec is the same, there might be differences here and there, much like between a fork of a project

You can compare mantle and vulkan to openssl and libressl (a fork that removed some code and added some code) (ignore the security mess that is openssl)

The root of the problem is the image quality setting in Nvidia control panel. Check the difference (and proof) here:

http://hardforum.com/showpost.php?p=1041709168&postcount=84

there seems to be around 10% perf. drop after setting the quality to highest.

Vulkan is essentially Mantle with modifications.

AMD donated their efforts to the Khronos group and some of the relationship is obvious from the name.

i myself use AF everywhere with gtx670 usually set to sufficient value of 8. the picture would be blatantly blurred from a sideview for all to see. but i cant compare titanx vs furyx myself in a benchmark, im too poor to get ahold of 'em :laugh: :cry:

2009-2015 is what?

Idk..maybe it's been too long,They should release a decent chip like..2 years ago to be halfway right.

FAIL,FAIL,make me mad, FAIL.

Now everyone using win7/8/8,1 can upgrade to win10 freely at first year , DX 12 will be adopted much much faster and also has huge advantages.

On AMD, default = higher quality, but it hurts performance.

On NV, default = worse quality, but it runs faster (10% according to BF4 tests).

Do a detailed analysis in recent games, image quality comparisons on default driver settings (since its what you test on), same settings in-game, same location. Then do it forced on quality in CC/NVCP.

You will get a ton of site hits from everyone interested in this issue. If it turns out to be true, then basically NV has "optimized" their way to extra performance for years at the expense of IQ.

The originator of that comparative video, clarified the issue almost a week ago.Maybe you should have bothered checking past the sensationalist fanboy salivating and looked the original posting at OcUK Forums - the whole issue was cleared up this time last week.

BTW (and on topic); What happened to the "over 100 game development teams who signed up for Mantle" ? Sorry guys, start over with DX12, it's all good!

Thought it only fair to redo this battlefield 4 bench with the exact same settings (Max Quality in the NCP). With the IQ exactly the same and settings exactly the same

The issue is with NVIDIA's default settings i.e. "out-of-the-box" settings. Web sites like Hardocp uses driver's default settings for their PC benchmarks.

PS; I do have MSI TwinFrozr GeForce 980 Ti OC. By default, the texture level is set to "quality".

I do wear glasses, but watching that video didn't really see anything too noticeable in graphic quality. Really IMO if the graphic difference is so minor that it doesn't immediately pop out then doesn't matter. Kinda like tressfx in tomb raider, didn't really matter since it you were playing whole game watching her hair move around.

If you look at AMD's entire product stack for PC and realize the serial nature of DX11 was really holding it back, it's not too hard to understand the motivation behind Mantle and connect the dots to DX12.