Monday, August 10th 2015

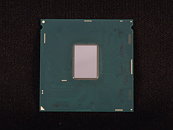

Intel Skylake De-lidded, Reveals Tiny Die

When Japanese tech publication PC Watch got under the hood (lid) of a Core i7-6700K quad-core processor, what they found was an unexpectedly small silicon, that's shorter in proportion to its width, than previous dies from Intel, such as Haswell-D, and Ivy Bridge-D. It's smaller than even the i7-5775C, despite the same 14 nm process, because of its slimmer integrated graphics core with just 24 execution units (compared to 48 on the i7-5775C), and the lack of an external 128 MB SRAM cache for the iGPU.

The substrate Intel is using on the i7-6700K was found to be slimmer than the one on the i7-4770K, at 0.8 mm thick, compared to 1.1 mm on the latter. The thicker IHS (integrated heatspreader) makes up for the thinner substrate, so it shouldn't cause problems with using your older LGA1150 coolers on the new socket. Intel is using a rather viscous silver-based TIM between the die and the IHS. The die is closer to the center of the IHS than its predecessors were. PC Watch swapped out the stock TIM with Prolimatech PK-3 and Cool Laboratory Liquid Pro, and found some impressive drops in temperatures at stock speed (4.00 GHz) and with a mild overclock (4.60 GHz).

Source:

PC Watch

The substrate Intel is using on the i7-6700K was found to be slimmer than the one on the i7-4770K, at 0.8 mm thick, compared to 1.1 mm on the latter. The thicker IHS (integrated heatspreader) makes up for the thinner substrate, so it shouldn't cause problems with using your older LGA1150 coolers on the new socket. Intel is using a rather viscous silver-based TIM between the die and the IHS. The die is closer to the center of the IHS than its predecessors were. PC Watch swapped out the stock TIM with Prolimatech PK-3 and Cool Laboratory Liquid Pro, and found some impressive drops in temperatures at stock speed (4.00 GHz) and with a mild overclock (4.60 GHz).

112 Comments on Intel Skylake De-lidded, Reveals Tiny Die

Looking forward to Zen even more now that Intel have shafted us with a wad of glue and TIM again, instead of solder. April can't come soon enough ...

Intel may be guilty of using its clout in unfair ways (like influencing benchmark tools to favour its CPUs) but AMD has only itself to blame for their griefs.

Sounds like communist thinking. Are you for real?

Fear not though, AMD will not disappear, since a monopoly would not be allowed. So if they don't find a way to be profitable again, or if someone doesn't buy them, they will get propped up by government before being allowed to fold, so in effect, the poor little guy will get rewarded for countless bad decisions that put them where they are.

Don't take my words wrong, I want them to be successful and regain status. It's better for their employees, the economy and for all consumers due to lower prices on Intel and Nvida products. I'm just able to tell you, without emotion, what the truth behind their downfall is.

P.S. Intel is part of the big Judeo-Masonic conspiracy

It doesn't make sense for people with AMD's products nicknames to be such.

This thread is becoming a general nonsense one.

I'm also wearing a double tinfoil helmet (a simple hat would be useless here).

Intel HD Graphics 1st gen was introduced in January 2010 with Clarkdale/Arrandale. True, it was a multi die design, but it shared the CPU's package. One full year before AMD. In January 2011, Intel's HD Graphics gen 2 was launched with Sandy Bridge.

Just calling it an "APU" doesn't make it special in any way, the CPU and GPU work mostly independently for both AMD and Intel.

So who was first?

And I don't even know what to say about Sony Xperia S... The only logical explanation is that he's Richard Huddy. But not exactly Huddy, but some kind of failed clone. If we take Aliens for example, and we consider that it takes 8 tries to get a clone right(ish), then Sony Xperia S is attempt number 4. You know, the clone that is rejected before it reaches the factory for Soylent Green.

Sony, dude, your ramblings hurt even the brains of the most avid AMD fans. If we are to believe you, Intel and nVIDIA are to blame for everything that's wrong with the world. And I'm not saying it is not possible, but the way you fabricate illogical conspiracies in your convoluted mind... there's not enough tin foil in the universe.

Peace!

This needs to stop. This was about the new quad-core Skylake die being delidded. Why is it that out of the past 10 threads in which I've participated/come across, half of them have to do with me doing my best to stop the bullshit nonsense that propagates from people like Sony Xperia S, rather than discussing something productive?

I'm not going to mince words. No one invited conspiracy theorists and far-left socialists into this thread. This thread is about the new Skylake die. Whoever made the connection between die size and the lack of competition from AMD needs to go home with his tail between his legs and be silenced permanently. Why is it that some people think that you can have a Mount Everest of silicon on a CPU without it having side effects pertaining to heat and power consumption? Hello? Are physics different in your universe? I assume that most users here are level-headed and are able to understand why Skylake's die is so small, unlike the nutjob who assumed that pressure from AMD will cause Intel to shit its pants and drop massive amounts of silicon onto every CPU. AMD doesn't even have a goddamned iGPU on the quad-module CMT die and it's so damned big! It ain't Intel's fault that AMD is stuck on 32nm for its "enthusiast" platform and has no plans to go further. Oh wait, it probably is, according to all the conspiracies here. Want more cores? AMD has AM3+, and Intel has LGA2011. There's the door. You know what to do.

Moreover, when Intel was behind in '04-05, they knew that they could not sustain their business by crapping in their pants and adding more stuff on the die, because there were already more than enough heat-generating components on die. Intel's engineers found out the hard way, with Pentium D (as AMD's engineers are experiencing with Bulldozer), that they needed a fresh new design that directly addressed their problems: a terrible pipeline, heat and power consumption. 2011's release of Bulldozer had AMD looking the opposite direction instead; K10 was good, but it could use improvements in the cache speed and size (with Phenom X6, cache was no longer abundant per core), as well as a new process and small improvements elsewhere in the core. AMD's solution? Bulldozer said to K10 "you know what, f**k your problems, I'm going to take a dump all over them so we have to clean up both things later."

Free speech is a beautiful thing. It looks like some idiots are hell bent on pushing it to the boundaries of what common sense allows. The problem with all these random, shitty claims of foul play on Intel's part is that it spreads misinformation, metric tons of shit at a time.

Oh, and what about the guy who created an account here, more or less, for the sole purpose of shitting on this thread, which actually featured some interesting information about Skylake? Are YOU for real? How about you ask yourself the same question before dismissing others over their supposedly flawed political views? Hey, why don't you go and work for AMD? I'm sure they'd like a helping hand in political, conspiratorial bullshit with their upcoming Zen architecture. And color me surprised if you don't end up blaming Intel if Zen doesn't turn out that great.

One doesn't need to be an Intel fanboy to see how AMD has disastrously managed itself over the past few years. They literally sacrificed their CPU division by throwing away K10. That has nothing to do with Intel's shady dealings with OEMs.

*calms down, braces for inevitable shitstorm of incoming ignorance regarding more of how Intel and Intel solely is to be the death of AMD"

Now that thats overwith. @tabascosauz hit the nail on the head. Now lets talk about Delidding, and Skylake.

There are clear and good reasons to be dissatisfied with Intel, and what it has to offer. Deal with it.

Banias - Pentium M <------------ Basically the origin of the Core architecture, here the development started to supplement P4 because it was so bad and Intel was getting its ass handed to it by AMD | Hebrew word

Dothan - 2nd gen Pentium M <--------------- 2nd iteration | Hebrew word

Yonah - First Core family, the Core Duos and Core Solos <------------- First appearance of the superior Core over Netburst, the fruits of Intel Israel's labours | Hebrew word?

Merom - Core 2 Duo mobile family, was to mobile as Conroe was to desktop (no shit Sherlock, this thing sitting beside me is called a T7100 and I'm well aware of the family of CPUs to which it belongs) <--------------- Core 2 is released to market, finally ending Pentium 4 | Hebrew word

Big deal. So Intel wanted to kill off Netburst, and Intel Israel did most of the work. What's the problem with honouring the country that turned Intel around by developing out of the old Pentium M? So, you tell me; what's your problem?

Disclaimer: I do not support Israel's political agendas, but I don't see a problem with honouring the work of the Israelis, which paid off with Core 2 after many long years and brought us the architecture that we know of today.

Like I said, why don't you send an email to Lisa Su and tell her about your opinions? I'm sure she'll be happy to hear what you have to say, since you're an extremely important driving factor in the industry. In any case, take your "deal with it" attitude somewhere else. We come here to get our images of the 6700K's die, not to endure you.

It's about time someone came around and locked this thread. There isn't much to be said about Skylake (BORING in itself), which is the topic of the news post, since we know everything already and hardly anything has changed about delidding since Haswell (unless...but that's for another news post). This is going on and on, and this newcomer, who we would've otherwise welcomed, has led this thread off topic for long enough.

and then we are speaking bout attitudes :(

Back to tech. First and last request.

Holy crap, look at those margins. I think a price reduction to somewhere between 100 and 200 $ would fit perfectly in order to make me think about purchase.