Monday, August 24th 2015

AMD Radeon R9 Nano Nears Launch, 50% Higher Performance per Watt over Fury X

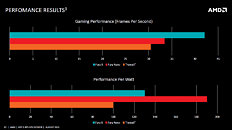

AMD's ultra-compact graphics card based on its "Fiji" silicon, the Radeon R9 Nano (or R9 Fury-Nano), is nearing its late-August/early-September launch. At its most recent "Hot Chips" presentation, AMD put out more interesting numbers related to the card. To begin with, it lives up to the promise of being faster than the R9 290X, at nearly half its power draw. The R9 Nano has 90% higher performance/Watt over the R9 290X. More importantly, it has about 50% higher performance/Watt over the company's current flagship single-GPU product, the Radeon R9 Fury X. With these performance figures, the R9 Nano will be targeted at compact gaming-PC builds that are capable of 1440p gaming.

Source:

Golem.de

106 Comments on AMD Radeon R9 Nano Nears Launch, 50% Higher Performance per Watt over Fury X

And, IMO, the better overclocks are coming from the better PCB designs, not necessarily anything to do with the GPUs themselves.

Either way, a little bit of patience and we'll see.

Also I do not think the better PCB designs are the only reason because we had non-reference 290X's and 290's that didn't yield automatic higher clock unfortunately.

But, I'd buy one, heck I'd buy a quartet of FuryX, if I could find them on the local market. I doubt that will happen soon since FuryX isn't even here yet.

Today seeing the "refresh" of the 300 Series as a pertinent adjustment, it distressing that they didn’t (juggled against selling down 290's) just released the 390; even if 290’s ($270) still had to have a place in the product stack. As all the refresh cards are furnishing the opposition a decent buzz in the market, getting them out early would’ve be advantageous. Even if it meant having the 200 Series below them, and the 300 Series above at the same point in time. In retrospect it doesn’t seem that problematic as not having something to maintain some usable PR on the front pages. Imagine AMD 390 in the market mid-April touting 8Gb and vying the 970, while the a Memory Allocation was somewhat still a sore subject. Then the 390X showing mid-May, finally the 380 like two week later.

A nice staggered release of new reviews leading up several week before the FuryX , then 3 weeks from that Fury, and now Nano. All that perhaps taking pressure from the drumming Fiji/HBM. AMD might have had to be less "talkative" on that subject, holding to "it's planned for a July release". AMD would’ve almost assuredly had more and favorable reviews (AMD needs to provide more reviewer samples), but no they choose "months" of silence and the mounting pressure to "do something". It all came apart at the seams as the "where’s Fiji/HBM" overtook their own PR with crazy speculation in the forums, all the while the "re-brand tag" really got unwanted traction. If AMD had a glut of 200 Series inventory in the channel, that was unhealthy, but the 6 months of their silence and the web's speculation was just as life-threatening.

videocardz.com/57409/amd-radeon-r9-nano-confirmed-to-feature-4096-stream-cores

check out "memory express", they are just one of the shops I tend to visit on a near weekly basis. Since they price match any online retailer that is based on Canada, they've cornered the market for me since I get the price of other shops, but never pay shipping fees, even if just buying thermal paste (which is something I buy monthly).

Read more here:

hardforum.com/showthread.php?t=1861353&page=4

There was another article on a tech site not on a forum, however I cannot find it now ....

News flash. AMD is the one who has been giving more value for money than nVidia. All GCN Radeons (but especially GCN 1.1 and newer) will tend to be MUCH faster in DX12 than they were in DX11, since GCN was designed to for compute performance fed by multi-core CPUs running multi-threaded code. nVidia GPUs, including the current Maxwell, are designed to be fed by a single-core in serial. In other words, all current nVidia GPUs are rendered obsolete by DX12, if you'll excuse the pun.

Don't belivee me? Read this explanation by a retired AMD GPU engineer. This guy REALLY knows what he's talking about, he's no fanboy, he's an actual, bonna fide expert, his posts (go ahead and read through the thread and be prepared to be blown away) should silence the idiotic nVidia fanboyism on here:

www.overclock.net/t/1569897/various-ashes-of-the-singularity-dx12-benchmarks/400#post_24321843

Both AMD and Nvidia are deceitful at times so I would rather wait until some DX12 games drop before deciding.

I made many AMD GPU purchases on tech they touted as new and all that, but not once did they ever actually deliver on it until many many months later, if at all (Crossfire (broken cursor), Eyefinity (First was Crossfire running Crysis on three dell 30-inch screens, never did work in native res although they had live demos running at LANs and when I was there, they wouldn't let me see the back of the monitors or PC) + (Frame time problems that I complained about for years before they actually acknowledged, and only did when they had ZERO choice), CTM (never made it to prime-time, precursor to DX12), Mantle (New CTM, backed by better code, still not used by more than 10 or so games right now).

It's not that I favor AMD, it is that they fail to deliver. While for me personally and my own purchases, NVidia HAS delivered. NVidia touts features...and they work! What a novel idea!

Until AMD actually PROVES that what they hype before a lunch is actually a true statement, it'll be hard for most anyone to take them seriously.

That same post about ashes was also made here on TPU, but yet you link to another site... Interesting.

While I understand what our AMD fellow is getting at, this is the WHOLE POINT. I could buy AMD now, have a DX12 AAA title or a few come out in the next 2 years and have better performance in those. OR I can buy a different card now that has better performance in the VAAAAAAAAAAAAAAST majority of games. Then, in two years when more DX12 titles are available in the market, then look at who's top dog and make a purchase then.

SO what AMD seems to have higher performance in a game that was original built using their 3rd party locked up API. That performance transfered over to DX12 which nvidia is starting from square 1. AMD had what almost a year heard start working with that were i doubt nvidia had more the a month or 2. Please don't say nvidia had access to source for a year, they have had access to DX11 but DX12 for it likely much difference story.

Last fact is this is ONE game in ALPHA stages, Doesn't mean very much.