Thursday, September 17th 2015

NVIDIA "Pascal" GPUs to be Built on 16 nm TSMC FinFET Node

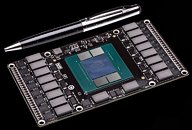

NVIDIA's next-generation GPUs, based on the company's "Pascal" architecture, will be reportedly built on the 16 nanometer FinFET node at TSMC, and not the previously reported 14 nm FinFET node at Samsung. Talks of foundry partnership between NVIDIA and Samsung didn't succeed, and the GPU maker decided to revert to TSMC. The "Pascal" family of GPUs will see NVIDIA adopt HBM2 (high-bandwidth memory 2), with stacked DRAM chips sitting alongside the GPU die, on a multi-chip module, similar to AMD's pioneering "Fiji" GPU. Rival AMD, on the other hand, could build its next-generation GCNxt GPUs on 14 nm FinFET process being refined by GlobalFoundries.

Source:

BusinessKorea

52 Comments on NVIDIA "Pascal" GPUs to be Built on 16 nm TSMC FinFET Node

Finally, a graphics card that does not cooling or anything. I just wonder how I would connect that to my PC. :confused:

I'm still on the fence as to GloFo making AMD GPU's, but if they do I think we have a wield time ahead. This will mean less of the "me-too" that has been the GPU business for many years. I think perhaps this will shake-up of a lot of things; release dates, what markets are attacked first, and with what memory type. All the forum chatter will be a spinning torrent..:ohwell:.

Is TSMC holding the line on price (not like they did on the 28nm), when one (or their) biggest customer had talk of going elsewhere (Samsung), while perhaps another good customer already moved new business out? Can TSMC (shareholders) seeing lower starts stomach under-cutting the scrappy GloFo, who probably able to work aggressive margins just to get traction as anything they provide is a boom to the stock?

Could AMD move first in the $170-450 price point, with say two different chips that are (XT/Pro) so 4 variants. The lower part will hold to GDDR5 the higher part will be HBM2. But all the hinges what foundry and how soon production starts. That's the segment today where AMD is clawing back market share, and using products that have re-bands. If they stay in the control the channel, and hit with a XT mainstream card that gets up into the 980Ti they they'd ride a nice boon. That means they can let Fiji go EoL which I think is a drain on them now, and come back with a replacement Top part after couple of months.

Meh, it just comes down to how they use it in the end.

IMO, the watercooling idea should be standard on these 650+ cards. the titan x would have been even better with a water or hybrid cooler.

As far as HBM, it was a joint venture between Hynix and AMD. The intention is to use less power than DDR4, which is less than the power hungry GDDR5. They also effectively increase bandwidth, removing memory access as a block on performance: electroiq.com/blog/2013/12/amd-and-hynix-announce-joint-development-of-hbm-memory-stacks/

AMD provided research and production assistance with the product, along with demonstration of the technology. The trade was sole access to HBM1 (realistically this was supposed to make Nano/Fury), and early/prime access to HBM2. It's a huge leap forward on power consumption (GDDR5 is functionally power hungry DDR3), and it provides direct access memory. Both of these, theoretically, mean HBM should make high VRAM using applications run much better.

The "news" part of this story is those extra 2nm. AMD having those 2nm doesn't sound like a lot, but it means a 50% reduction in size rather than a 40%. That extra 10%, along with AMD ironing out the HBM issues with Fury and Nano, could spell a significant real world advantage with Arctic Islands. Be real here people, this is the closest to bashing Nvidia some people have ever come.

Edit:

Water cooling shouldn't be needed on a GPU. It should be an option, but not necessary. The point of HBM and die shrinking is exactly that. More transistors, but less voltage, means less overall heat. While I love AMD's offerings, the most recent batch of heaters they've put out are excessive. I want overclocking headroom, not a card that is already pushing thermal limits when I get it.

Intel's 14nm values for the three constituent parts (interconnect, gate pitch, gate length for those wondering) that make up a transistor are pretty well documented as are SRAM area/volume- at least the minimums are

...and the other two vendors ( I'd assume that UMCwon't be a factor in the GPU market) are also pretty well documentedif you look around:

I suppose you could hold out hope for the terminally late-to-the-party folks at GloFo, but I suspect AMD and Nvidia don't want to have to wait a few years for that to happen.

btw

mhm

These VGAs connect to the Mac Pro using the header block on the far left of the bottom pic. The "PASCAL" shown by NVidia uses the same connector (you can catch a glimpse of it in the speech video, even).

Picture credits:

www.ifixit.com/Teardown/Mac+Pro+Late+2013+Teardown/20778#s56812

980 Ti is 8 billion.

The "architecture" difference in terms of efficiency is marginal at best and not even clear which company has the upper hand especially if you take the whole DX11/DX12 optimization into account.