Wednesday, December 23rd 2015

AMD "Fiji" Dual-GPU Graphics Card Delayed

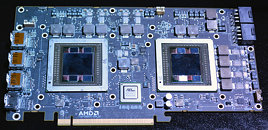

Originally expected to unveil/launch some time in December-January, AMD's upcoming flagship dual-GPU graphics card based on its "Fiji" silicon could now be scheduled for a Q2-2016 (April-June) launch. In its E3 livecast, AMD CEO Lisa Su stated that the dual-GPU "Fiji" product could launch as early as Christmas 2015. We now know that it isn't happening.

Responding to a question by Hardware.fr, AMD stated that it's pegging the launch of the dual-GPU "Fiji" card to commercial availability of HMDs (head-mounted displays), and a general sense of maturity in the VR ecosystem. AMD is now expecting HMDs to be well proliferated no sooner than Q2-2016, and is hence "adjusting the Fiji Gemini launch schedule to better align with the market," to "ensure the optimal VR experience." AMD did state that samples of the card have been shipped to some of its B2B partners for internal testing within Q4-2015, and their response have been "positive." Could this be AMD buying time to re-engineer a non-Cooler Master cooling solution?

Source:

Hardware.fr

Responding to a question by Hardware.fr, AMD stated that it's pegging the launch of the dual-GPU "Fiji" card to commercial availability of HMDs (head-mounted displays), and a general sense of maturity in the VR ecosystem. AMD is now expecting HMDs to be well proliferated no sooner than Q2-2016, and is hence "adjusting the Fiji Gemini launch schedule to better align with the market," to "ensure the optimal VR experience." AMD did state that samples of the card have been shipped to some of its B2B partners for internal testing within Q4-2015, and their response have been "positive." Could this be AMD buying time to re-engineer a non-Cooler Master cooling solution?

74 Comments on AMD "Fiji" Dual-GPU Graphics Card Delayed

Titan is single GPU. GTX 690 / R9 295x2 is dual GPU in one card. Totally different ball game. Titan is the 'big chip' just like AMD's Hawaii.

1) VR isn't a normal usage of a GPU. VR is going to need to have a GPU pull data in, process it, add effects, and output it. This is way more than a regular GPU ever does.

2) Latency is going to be a killer for VR, so your GPU has to be bad ass, and communicate directly with your I/O device, requiring a large bus and high bandwidth.

3) AMD and Nvidia aren't going to be making bigger chips, as there are foundry limitations.

4) If this card was going to be vaporware, then they wouldn't just push the date. Your argument about the 690 isn't fully thought out, because you're not comparing things of equal merit. Let's try the same argument, but with an item targeted at one usage scenario. I'd recommend the Quadro and Firepro GPU lines for engineering, monsterously expensive but they sell and exist because a niche market (just like VR) purchases them because their unique features are needed. This isn't a 690, because the 690 was a card targeted to a market that didn't need its shenanigans, which is why it failed.

When you stack all of this together you can see why a single card dual GPU option makes sense. Communication with I/O is done once, and the card itself can divide up data to process. Absolutely all of the data is processed on the card, making overhead for transferring the data minimal. You've got to have the dual GPU, because you've got an insane amount of calculations being done, such that thousands of smaller processors (stream, not the monster x86 cores of a CPU) are the only way to process all of the data, and output usable images in a timely fashion.

I would guess off hand that your earlier bandwidth comment was in reference to the earlier article done here about PCI-e scaling for consumer GPU performance (www.techpowerup.com/reviews/Intel/Ivy_Bridge_PCI-Express_Scaling/24.html). While I don't debate that article at all, noting the test conditions is of use. They track the number of FPS, which is only tangentially related to the ability of the cards to receive enormous amounts of data and synthesize it into something useful. It's utilizing the wrong test to prove your point.

and yes, I could have been more precise writing "replaced with Titan" instead of "renamed to Titan", but still ... "Titan <xxxxx>" namespace for nvidia means the biggest and baddest GPU-s... be they single-chip or dual-chip builds ... which previously was GTX x90 thing.

I just edited because I remembered the ROG Mars, but that's another one of those odd releases (2x 760) with all the drawbacks and zero advantages versus stronger single cards.

edit: my bad that is 5am for ya.

Given the complexity of visual processing within the brain, and the fact that said processing is subconscious, even very mild discrepancies are prone to creating motion sickness. This is why something like adding a virtual nose in VR has increased the amount of time people are able to spend in VR before nausea is induced.

I might be barking up the wrong tree here, but that's how everything I've seen has been explained. This explanation jibes with the proposed plans I've seen, where the CPU is functionally not utilized in a lot of VR. The lack of processing cores, and the wider tolerance for imprecise calculations (in favor of low latencies between input and output), is why a monsterous GPU with a ton of cores is what VR needs.

Again, this is my interpretation. If it is incorrect, I'd love to hear the situation explained better. If that is not the case, I don't see why a dual Fiji card is "specialized VR hardware," as AMD seems to want to state. I'd suggest if that if massive processor count isn't necessary a 980 ti has already proven more than capable of running buttery smooth dual 1080p monitors, and likely for far less money than the dual Fiji monsters that AMD is pushing here.

VR is utilizing input from a user to generate a world which appears real via the ability to "look around" and generate images which your perception is fooled into thinking are 3d via perceptual tricks. The later part of that statement is easy, we've had it for decades. Printed pictures in books were "3d" by being slightly out of alignment for each eye. As soon as we could get high resolution images, we theoretically could have had "3d" experiences.

The first part of VR is the tricky one. But realistically, that was solved a long time ago by video game makers. If you're a child of the late 80's and early 90's you probably went to an arcade, and saw those cabinets with giant goggles. They render a "world" and allowed you to spin on one axis and shoot enemies (I think the one I remember was called Beachhead). That's technically VR, and it's 20+ years old. The reason it never caught on was price and limitations. It's easy to have a stationary player and generate a world, but VR is now branching out into rendering a believable world. A world in which you can move about, and see multiple sides of any object. Instead of rendering a surface, you've now got a body. That requires a heck of a lot of calculation. Viewer angle, distance, motion related to the viewer, dynamic lighting source, and dozens of other calculations make rendering a cube difficult (let alone a complex body).

Put simply, I can run two monitors easily on hardware 4+ years old. VR isn't out now, so explain to me how "easy" it is again. Explain to me why you believe this is "simple," and I demonstrate exactly how ignorant you are with either history or reality. History is fun and all, but reality is clear. If we don't have VR now there must be a reason. Whenever you've got a good answer to that challenge, we can talk.

Yes you can run easily 2 monitors on 4+ years hardware, BUT NOT AT STABLE 90+ FPS (NOT AVERAGE, BUT MINIMUM FPS has to be >= 90) at 2160×1200 to not make you nauseated or motion sick with stuttering and picture lag. And you don't want to play any games with minimum settings and bare naked polygons, but with actually good picture, right? Hardware that can run any current AAA games at Ultra settings and at minimum 90 fps IS NOT COMMON among people. You need at least some 980/980Ti for average games and much much more power for visually better games to do that. For example:

www.techspot.com/review/1006-the-witcher-3-benchmarks/page4.html

At ultra settings gtx 980 minimum fps = 28.

wccftech.com/witcher-3-initial-benchmarks/

At ultra settings Titan X minimum fps = 60 ... and this is NOT ENOUGH for good VR experience! To play Witcher 3 at Ultra settings in VR you need like 2x 980Ti or something. <= also as a side note, that's exactly where this dual-GPU fiji comes handy barely.

Now look, what hardware actually people have:

store.steampowered.com/hwsurvey

Oh wait... from all Steam users:

NVIDIA GeForce GTX 980 0.89% +0.89%

980Ti and Titan X and Radeon R9 Fury / 300 series owners didn't even make to the list because compared to everyone else they are close to 0%. That is THE main reasons why we don't have VR today among masses. And if you can't sell it to masses for profit in foreseeable future (like in 5 years), you are not going to invest any time/money to developing the entire infrastructure (totally new hardware, totally new software, totally new input devices, totally new everything) for some "add-on device", that people can't exactly add to anything (good enough computer) yet, right? The development started at exactly the time, it seemed, that average people could have the necessarily good enough computer "soon enough" for affordable price (that's still up to debate, but yeah).

Also 20 years ago, you didn't have those new nice 500+ dpi OLED display now, did you?

To make it possible today for less rich people, all the VR developers and NVIDIA and AMD and software developers are working fervently on their API-s and stuff to optimize the way picture is rendered for VR => to make good enough picture where it matters and less quality where it's less visible or entirely out of FOV (you don't need pixel perfect image all around the entire 2160x1200 picture, but there are areas that are more important). That tech didn't exist 4+ years ago ... because people were just starting to work on VR then again once again... all the stuff (both hardware AND software needs work still). And that's why we didn't have this 4+ years ago. duh. But there is only so much you can optimize the old hardware for better results. Ultimately you will need newer and better computers. I really hope Pascal or Artic Islands will give us good enough GPU (2x 980Ti power) in single card solution for VR and under 800€/$ ... only then I will start thinking about it really myself. Will this dual-GPU fiji be priced near 800$? I highly doubt it.

You'll excuse me, but you've managed to demonstrate such a fundamentally skewed perspective that I'm not sure where to start. Let's tick the boxes off in order.

1) Prove anything. I'll wait about three seconds, before you start calling your links proof before I ask you what the assumptions you made out of the gate are, and how you've built a very nice house of cards atop gelatin.

2) Why does VR need to be at 90 FPS? Link to any source which proves this.

3) Why do you have to have a specific resolution, and more importantly why this particular one? I'm betting you've got a specific piece of hardware in mind, but you've based arguments on vapor here.

4) What the hell is picture lag? I'm going to be brief here, but you've made up a term. Latency is the difference between input signal and output. Stuttering is a focus when the frame rate is not consistent. You've bastardized a bunch of terms to mean...something. I'm not even sure how you'd prove anything when you start from this disjointed of a point.

5) VR isn't playing the Witcher. Comparing the two is dumb, but I'll try to be tactful here. What exactly makes you believe the Witcher is a VR game? It's akin to believing that Solitaire and Chess are the same thing, because both of them are games.

6) Who required the visual settings be set at an arbitrary "Ultra" for VR? From what has been demonstrated nothing is nearly as complex as that. Maybe you should take this chance to look at the VR they've demonstrated to get a better grasp on the scope of the technology actually being brought forward.

7) Define VR concisely and precisely. I'm going to wait for a moment, because you have changed the definition every time you spoke. Let's just define this as the VR being brought forward now. It's fundamentally the same as the technology as was available readily in 2002, as proven here: www.arcade-museum.com/game_detail.php?game_id=12746 Would you care for another crack at defining how impossible VR is, if it was done more than a decade ago? Yes it was lower fidelity, but VR is VR as there exists no distinction of VR which cites fidelity that is not arbitrary.

8) We're talking about Fiji x2. We don't care about the fact that you want VR to be both cheap, and magically perfect. What we're discussing is a card which is designed for the VR market, not some sort of magical machine which would allow you to buy a GPU from 10 years on, that meets your arbitrary definition for VR. How does your information relate to Fiji x2?

9) Can you ever stick to one standard? At one time you pull out arbitrary resolutions. Then you pull out arbitrary monitor statistics. 20 years ago a CRT was cutting edge VR. Today an OLED is cutting edge. Shockingly enough, technology gets better. This is as stupid as saying silent movies are all worse than modern movies, because they don't have sound.

10) Steam statistics. Could you have found a less relevant piece of information? VR doesn't exist in the consumer market, so unsurprisingly the statistics for a tangentially related product show that it doesn't exist. This is a stupid point for a number of reasons, but I'll keep driving home the fact that "by my arbitrary standards" is not an argument. I'd also like to point out that "it doesn't exist, therefore it doesn't exist" is a tautology. Either stick with "it can't exist" or "numbers disprove it."

I'm going to try to be generous one more time. Come to the discussion with an argument of defined scope, terminology defined, and facts based on reasonable logical deduction or facts. If you can do that, you can discuss a point. What you've got right now is an argument with "facts" built upon nothing. Anyone can provide Steam statistic links, and anybody can say X proves Y. A good argument has defined the interlink between X and Y, used related facts to prove out X, and is capable of defining when Y is reasonably accurate.

If you'd care for just one more diversion, let's show how science defines the scope of Y. In macroscopic scale Newton's laws on motion all apply and are accurate. As you shrink down to microscopic levels these rules no longer apply, but we don't throw out Newton's laws. What we do is define them, so that we know their boundaries. If I were you I'd start be understanding how the VR headsets feed data back to the video card, define why specific resolutions and frame rates are a necessity (which may require brushing up on psychology and biology), and finally doing a little research before trumpeting your conclusions. I'd suggest for the last bit a quick trip to the "Virtual Reality" page of Wikipedia, and perusal of the International Arcade museum page to see just how long we've actually had VR: www.arcade-museum.com/. If that doesn't work, search out the Sega Megadrive.

TL;DR:

Sega, Nintendo, various arcade cabinet manufacturers, and a plethora of other companies have had VR on the market since 1991 (at various times). To argue VR doesn't exist is monumentally backwards, and to believe that you can show statistics for unrelated items to prove that a thing that doesn't exist doesn't exist is just silly.

But oh well... I can ramble offtopic also... it's like if you were saying:

lilhasselhoffer: "We put a man to the moon in 1969. We have had serious space exploration missions all over the galaxy going on for 46 years and saying anything else is monumentally backwards, and to believe that you can show statistics for unrelated items to prove that a thing that doesn't exist doesn't exist is just silly."

Right? No! I will try to answer your questions soon when I have more time, meanwhile you could google something. Read about articles and stuff about oculus and vive development etc. What were their biggest challenges and why things are how they are.

2) It's not that 90 is the best solution ever. It's just best current coming hardware is capable of. OFC stable 144Hz would be way better when it becomes available. Just that 90 is the point where most people almost don't get any motion sickness from picture juddering (you know... screen in your face makes the 60Hz really that much more noticeable than on your monitor or on TV)

www.technobuffalo.com/2015/11/20/playstation-vr-framerate-90fps-60fps-minimum/

www.technobuffalo.com/wp-content/uploads/2015/11/PS-VR-Framerate.jpg

iq.intel.com/the-technical-challenges-of-virtual-reality/

People talk in interwebs, that Oculus DK1 had 75Hz ... and caused a lot of motion sickness in people. DK2 had 90Hz and it was much better.edit: People talk in interwebs, that Oculus DK1 had 60Hz ... and caused a lot of motion sickness in people. DK2 has 75Hz and it is much better.

A bit offtopic, cause PlayStation only device, Sony's project "Morpheus", later renamed to "PlayStation VR", has already surpassed this 90 and its display refresh rate is 120Hz. en.wikipedia.org/wiki/PlayStation_VR

Also read my 4th answer.

This 90fps thing is more like a common knowledge today if you have payd ANY attention at all what's going on for the past year. Also read this:

wccftech.com/nvidia-gameworks-vr-large-scale-vr-adoption/

3) Yes. Both Oculus Rift and HTC Vive come with this resolution. Both are made for PC and are usable with this topics dual-GPU AMD card.

en.wikipedia.org/wiki/Oculus_rift

en.wikipedia.org/wiki/HTC_Vive

yes, there are different products in development also already, but the early birds have this specific 2160x1200 (1080x1200 per eye) resolution.

4) You are trolling on semantics. Don't do that, it makes you look more silly. Lag, latency, the delay between when something is happening in the game and when it's displayed on the screen. For ideal VR this has to be as close to 0ms as possible. Once again for reducing motion sickness. (Also the input lag from your controllers and motion tracking has to be near 0).

Stuttering isn't even just from "nonconsistent frame rate" but when the display is in your face, you start noticing judder in perfectly streamed 60Hz because even on 60Hz the delay between every new picture is 1000/60 = 16.67ms. And this is really huge when the display is so close to your eyes. That is also why you need like 90Hz (11.11ms delay between images.) to make the image and motion smoother.

5) VR can be applied to ANY already existing or new 3D game, if the developers (or pro modders) choose to do so. Or if you use some 3rd party program like VorpX ( riftinfo.com/go/vorpx-download ). Witcher 3 was just some random pick of late AAA 3D title, but you can replace this with Project Cars; Batman: Arkham Knight; ARK: Survival Evolved; Mad Max; GTA V or whatever really. Nearly none of those don't run at stable 90fps at max settings on some mediocre old hardware. On some cases the "VR" part might be only the actual 3D image, on better cases it also includes head and motion tracking, on ever better support games there are also included the special input from the special VR controllers (whatever they might add). Check the sticks the guy has:

6) Noone really. But most of us enjoy better visuals. On normal monitor I'd rather have ultra settings and 30 fps than low settings and 90 fps, but then again, I don't play competitive CS:GO or the likes either. And therefore I really don't want to reduce my already experienced pretty image settings, when I start using VR. But sadly on case of VR this isn't really an option to sacrifice fps for better quality because of motion sickness and whatnot...

8) Most games' fps today benefit mostly from GPU performance and very little from CPU. 2x Fiji is GPU => relates. 2x Fiji relates to this topic as "almost good enough for the first generation (yes yes, in your book it's like 20th something generation, but for us it's still the first.) of VR headsets" I spoke about in answer 3 as a single card solution.

9) yes yes, dear troll, strap CRT to your face for all I care. Please post picture about it here also. :)

riftinfo.com/oculus-rift-specs-dk1-vs-dk2-comparison

I'm going to hold you to your exact words. It only seems fair. As such, let's look through the quotes.

1) "(NOT AVERAGE, BUT MINIMUM FPS has to be >= 90) at 2160×1200 to not make you nauseated or motion sick with stuttering and picture lag."

I'll offer the benefit of doubt, and give you the word soup here as "lag between inputs and visual output causes motion sickness." What I'm not willing to give you is that the minimum FPS has to be 90 and its resolution has to be 2160x1200. You've taken an arbitrary goal set by a company, and made that a requirement. The reason I've brought history into the argument is that it completely disproves your point.

2) "Hardware that can run any current AAA games at Ultra settings and at minimum 90 fps IS NOT COMMON among people. You need at least some 980/980Ti for average games and much much more power for visually better games to do that."

This is a patently idiotic statement. It's patently idiotic because absolutely none of the current VR systems are promising that you'll play the latest AAA releases on their devices. What is being promised is relatively good graphical fidelity, with the focus on interaction rather than fidelity. Outside developers are trying to port over AAA games, but they're experiments that may work, not a developer promised feature: www.roadtovr.com/oculus-rift-games-list/

3) "At ultra settings Titan X minimum fps = 60 ... and this is NOT ENOUGH for good VR experience!"

This is the arbitrary crap I'm talking about, in a nutshell. You say VR doesn't exist, because you need some arbitrary specification. I say that the technology has been done before. You dismiss the former technology because of limitations, I respond that when the games were released they matched the visual fidelity of other things around at that time. You argue VR doesn't exist because the hardware isn't there, I argue that if there was a real market for the product the hardware would have evolved to suit the needs a long time ago. VR died because the costs were too high, and its now seeing a resurgence because hardware costs are dropping. VR isn't magic, it's just getting a kick in the pants with cheaper hardware.

4) "Also 20 years ago, you didn't have those new nice 500+ dpi OLED display now, did you?"

That is an immensely off topic and idiotic thing to ask. We didn't have them, and we didn't expect them. That's like looking back at this discussion in 10 years and asking why we didn't just play is on our PS6. You don't kill something because it doesn't meet arbitrary standards, you build the best compromise device possible with the technology available. You put that product to market, and it performs based upon how people perceive it. In the 90's VR was a standard definition screen, and we appreciated that because it was the cutting edge.

5) "yes yes, dear troll, strap CRT to your face for all I care. Please post picture about it here also. :)"

I see you're too lazy to check out any links, even the ones you post. I'f you'd read any of mine, you'd see that was exactly what was being done. I can't tell whether you're incapable of understanding that VR isn't new, or whether you have such a narrow definition of true VR that you just don't recognize that this technology is nearly identical to older versions. What I can say is that I was playing a SNES, and had a chance at one of the VR systems when I got to go to an arcade. The VR system there beat the pants off of the SNES, but by today's standard both systems are an archaic mess. Moving goals arbitrarily is a distinctly counterproductive way to make sure that "true" VR is not attainable until you want to call it that.

Let's tick the boxes on 90's VR hardware, versus 2016 hardware:

90's

CRT

Standard TV resolutions or less

24 Hz monitors (depending upon the console manufacturer)

Fixed axis, with 360 degree turning

Massive cost, and limited game availability

Nausea inducing if played too long

'16

LCD panel

HD resolutions (depending upon manufacturer, 1920x1080 doubled or similar)

Greater refresh rates (60-90 depending upon who manufactures and specifies the hardware)

Free along all three axis

Large, but more moderate cost

Nausea inducing if played too long

Seems to me like the only things changing on that list are monitor type, resolution, refresh rates, free axis, and cost. The first three issues aren't really issues, as they are simply the result of technological progress and different standards. The argument for increasing the free axis is better rendering and requiring three dimensional worlds. That last reason is the only reason we don't have a lot of VR. The costs to develop the systems are high, and people aren't willing to pay that for one game. It's a lot like the PS Vita. The technology was cutting edge, but Nintendo beat its sales by offering a lower price point. As the price point from Nintendo was lower, the hardware got into more hands; more hands meant a greater market potential, which allowed software to be supported. AMD is trying to be Nintendo here, releasing Fiji x2 in close proximity to Pascal and Arctic Islands not because of its power, but because it will be a proven and cheaper process making a specialized product for a niche market. You seem to want to think that Fiji x2 is special, but it isn't. Fiji x2 is just a low cost way to get a bunch of fast processor cores onto the market to allow a niche market (VR)to do what they need to do.

AMD wants to please the VR crowd because they are currently a money pinata. All the major players are getting behind VR headsets because the kickstarter for Oculus raised a ton of money. After Nintendo managed to print money with the Wii, everyone else is rushing to make sure whatever technology another company has they can mirror. In that mad rush AMD and Nvidia can both put out "VR focused hardware" at high margins, and cash in on the huge amount of money being thrown around to make sure nobody has a unique technology in the video game market. AMD pushing back the release date of Fiji x2 is suspicious (as the push back of Oculus was much earlier), but I'd chalk this up to poor communication with PR rather than your supposed release without a valid market.

So we're clear, that last little bit of a response was to the following quote by you: "mmmm... no they didn't, they renamed their segment into "titan" .... to make it sound cooler and sell more maybe? but is still ultimately pointless." Nvidia didn't rename the x90 cards to Titan, Titan is basically stripping down their highest end silicon (read: HPC and the like) for gaming performance and charging a premium. The x90 card have historically (5xx to 9xx series) been dual GPU monsters, designed to leverage cheaper consumer silicon, but doubled. The 690 series was less than great on sales because they charged an insane premium (while losing raw performance due to SLI overhead and requiring profiles). Consumer can't bear that premium, but specialized hardware (like VR) will gladly pay that premium to get their experimental hardware working better than anybody else's.

none of the motion tech, the screen tech made a usable headset viable until these past few years.

the wii is a great bit of kit to talk about here. it proved that people wanted a more interactive ways to play. people want to be more involved in their games. more immersed in the worlds and these next gen of headsets bring that in bucket loads.

to bring this back towards the topic :lol:

why would amd need to push a new gpu out when they already have great hardware that competes already?

290x is still beating all but the 980ti (which it matches in some titles) the furyx is leading the way on the bleeding edge and nvidia still aint got pascal out. makes sense for them to keep theirs cards close to their chest for now. gives them time to fill the bins and know what they have to work with.

sidenote

i cant wait for vr :D

Nvidia suggests that the actual image rendering resolution is even bigger than 2160x1200 for those 2 (3024x1680) because it kinda needs to bend it around for VR lenses ( wccftech.com/nvidia-gameworks-vr-large-scale-vr-adoption/ ).

Also there are other promising headsets in work, with different specs... but they aren't usually lower specced... usually even better. Like Starbreeze, with it's StarVR - 5120x1440; 90Hz. If you are going to build a PC for VR, you shouldn't shoot for the minimum specs, but actually give yourself some headroom. I myself am waiting for next gen GPU-s for that, because it would be a bit silly to build a rig for that so early.

So all in all, considering relevant upcoming products, it is actual requirement, not some "arbitrary goal", as you put it. At least for me, when building a new computer for VR, and I believe for many others also. And if you are going to buy either of those headsets, I would highly recommend you to be ready for those requirements (or "goals" on your case) also. And if you don't, I could really not care less. Honestly.Actually your statement is rather idiotic. Because VR don't promise anything - they are just glorified and headmounted DISPLAYS in the end ffs. It's like you are saying, none of your monitor companies, be it Dell or Benq or Sony or Asus or Acer or whatever, would be saying that you'll play the latest AAA releases on their devices. OF COURSE you are going to play latest AAA titles on their monitors AND the same way also with Oculus Rift or HTC Vive or whatever you will be buying, if you are. At least as long as it's 3D game. Saying anything else is just utter stupidity. (yes, some of the new titles obviously don't have 3D support at launch or whatever.... it will get the patch eventually. Developer or 3rd party hack, but it will be there!) It's even more so happening, because all of the prominent 3D engine developers have added / are adding, VR support to their engines - CryEngine, Unreal Engine, Unity, Source Engine, Frostbite etc. So it would be kind of in-built already and it would be utterly stupid from game developers to disable this feature for no reason at all in their game.I didn't say it doesn't exist (meaning like there is no VR at all, while we all know there already are various VR DevKits out there and people actually developing games for and on actually existing devices); I did say it doesn't exist, meaning, in masses, YET, on consumer level. Masses have not adopted it, YET. Do you have VR in your home? How many of your colleagues or friends or family have it in their home and using it? I sure don't know anyone around here who has ever had any VR yet. On the second part I agree, the prices of tech are dropping somewhat and that's why we are going to see VR soon hopefully.

And this was my last post on this topic. Peace and happy new year!

Let's make this clear, since you seem to have a fundamental misunderstanding. VR is not new. VR that consumers have access to is not new. It has been delivered to consumers by graphical hardware that makes the latest low end graphics hardware specifications look generations ahead of what they had. All of this is fact.

Oculus, Sony, and MS are all starting from artificial specifications. What they've done is started with a price point they believe consumers will pay for the hardware, and worked backwards to try and find which combination of hardware and software could be developed to meet those price points. The one exception to working backwards is the Oculus starting via kickstarter, and allowing people to bear the risk if the project came out half baked (spoilers: the development kits are half baked by the developer's own admissions, see your own comments above about the "final" hardware being capable of greater screen refresh speeds).

Why then do Sony and MS want to jump on the bandwagon of VR? I explained it before, but it's the Wii. A seemingly bizarre little peripheral that had the Wii selling out months after launch, while the PS3 and Xbox360 were slow to start. Sony and MS were late to the table, and their marketers are probably still blaming the failures of the Kinect and Move device because they didn't get to market fast enough. This is why whenever Oculus demonstrated its success on Kickstarter everyone else jumped aboard the VR bandwagon.

Let's finish off the discussion with a bit about specifications. I'd gather that you've never gotten a chance to experience VR, but correct me if I'm wrong. My experience with VR is with both the older 90's style stuff, and the development kits from Oculus. There are some very similar features, which need to be touched on. First off, extended play leads to headaches and in some cases mild distortions in vision. I've never been able to go two rounds in a VR game from the 90's without experiencing that, and the Oculus does the same (despite taking a bit longer). If you don't buy my experience, then maybe a brief search on Google for the keywords "drunk," frat," and "rift" will show you how much the Oculus has had to overcome during development. Based upon testing, Oculus has changed their specifications over time. You seem to be forgetting, but the Rift started to be distributed in 2012. People have literally been using the Rift for 3 years, without the need for Fiji or Fiji x2. Specifications change, yet the old Rift sets are still going. Either you've got to acquiesce to the fact that the old sets are somehow not VR, or the new "requirements" aren't actually a requirement. The new requirements are Oculus trying to work out lingering issues, without actually admitting they exist. Which is it? You don't seem to want to listen, but I'll offer you a rope here. Developing technology changes over time and as Oculus had time to try their technology they realized that certain specifications would produce a better experience. This demonstrates a continuing development of the technology, which first debuted in the early 90's. PR claims that these new things are required, but anybody capable of doing any research and critical thinking can demonstrate that the "requirements" are new and meant to address something else.

Let's get back on topic. Fiji x2 is an attempt at selling an otherwise finicky piece of technology (Crossfire) to a niche market where its unique features are less of a burden. A market that can charge a lot of money for a "complete" experience in a box, where a community has already developed and demonstrated that they are willing to pay a lot of money for their pursuits. This is exactly like model enthusiasts, who would pay $20 for model switch (railroading) that costs $2 to produce. They are willing to be gouged, because they want the 100% experience rather than the 95% experience. It's sad to state, but that's exactly what Fiji x2 is aiming for. They have the same release as VR hardware, so those flush with cash walk into the store (or the e-tailer) and walk out with their Rift and brand new GPU which will make it work. It'd be deplorable if this was a real GPU, but Fiji x2 isn't meant to be a GPU. Fiji x2 is meant to be a VR device, and is being sold as such. To miss how blatant this is, under the auspices that Fiji x2 might be for anything else, is to demonstrate a rather substantial lack of real world experience. Heck, go onto Newegg and order a motherboard, and surprisingly enough they recommend whichever CPU has the highest profit margin as a complementary purchase at checkout. Fiji x2 is waiting to be that recommended additional purchase.

Edit:

I understand this is after the discussion, but let's put a pretty bow on all of this promotions.newegg.com/vga/15-6604/index.html?cm_sp=Homepage-Top2015-_-P2_vga%2f15-6604-_-http%3a%2f%2fpromotions.newegg.com%2fvga%2f15-6604%2f759x300.jpg&icid=344539. Nvidia puts out advertising for VR that states VR requires 7x the graphical processing power of traditional 3d gaming. They do this, with a small notation at the bottom. The notation reads that "traditional" gaming is 1920x1080 with a 30 Hz refresh. VR has to be 1680x1512 at 90 Hz on two screens.

If that doesn't encapsulate all of the BS going on, I don't know what will. A bold statement by some marketer is entirely BS propaganda. By that logic, 4k resolutions should be possible with a single Fiji GPU, because 4k would be less than half of this theoretical 9k performance. That's the mathematics, but let's be real here. Who exactly is out there running a 4k monitor with a single Fiji card, and how much of a hit does it take on your settings? This is why VR is going for experience, rather than graphical fidelity. This is why VR is promising simple games, not The Witcher 3.

@Vayra86, I agree with you completely. Problem is, idiots deserve a chance to not be idiots. I don't believe anyone out there is completely informed about everything, and thus everyone has points in their information about which they are idiots. Arguing against an incorrect statement is largely thankless, but if someone walks away with a better understanding of reality it's a win.

I had hoped that presenting enough history and facts would inspire the slightest bit of desire to demonstrate why I was wrong, and show why Fiji x2 was anything less than a cash grab for VR. Unfortunately, I was wrong. I guess I lost this one, but maybe it helped somebody see exactly how not new VR technology is. Maybe it helped somebody recognize that VR is the next 3D television/movies (I do love that feature not being on any new TVs, while 2 years ago it was nearly mandatory), a trend which may only be a novelty in the long run. I can only hope.

FWIW, was still a good read altogether :)