Wednesday, July 13th 2016

DOOM with Vulkan Renderer Significantly Faster on AMD GPUs

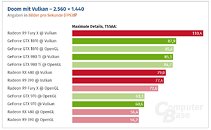

Over the weekend, Bethesda shipped the much awaited update to "DOOM" which can now take advantage of the Vulkan API. A performance investigation by ComputerBase.de comparing the game's Vulkan renderer to its default OpenGL renderer reveals that Vulkan benefits AMD GPUs far more than it does to NVIDIA ones. At 2560 x 1440, an AMD Radeon R9 Fury X with Vulkan is 25 percent faster than a GeForce GTX 1070 with Vulkan. The R9 Fury X is 15 percent slower than the GTX 1070 with OpenGL renderer on both GPUs. Vulkan increases the R9 Fury X frame-rates over OpenGL by a staggering 52 percent! Similar performance trends were noted with 1080p. Find the review in the link below.

Source:

ComputerBase.de

200 Comments on DOOM with Vulkan Renderer Significantly Faster on AMD GPUs

Consoles should profit from this pretty good no more downscaling

Surely the API is using the compute more efficiently instead.

On that front, OcUK have Fury X cards on pre-order at £150 under normal pricing. I'm tempted to build a Skylake rig running a Fury X. But then I think, why sell so cheap unless there's a Fury X replacement incoming...

FX5000 or Radeon 9000 series were not adequate for DX9 gaming, despite having support in place. Nvidia's 8 series or ATI's HD2000 series didn't have enough HP for DX 10. And we have DX11 titles bringing cards to their knees today.

So my guess is, we'll need something better than Pascal/Polaris for proper DX12/Vulkan gaming.

So yes, Vulkan is lower level than OpenGL. Yet neither are low level programming languages by any means (low level API is actually an oxymoron). And no, hardware cannot be low-level ready. Hardware is always accessed at a low level.

This is where AMD and nVidia differ most IMO: AMD thinks that being as forward thinking as possible is the way to go, while nVidia designs cards for games that are already out, with little regard to how the card will perform on API's that are yet to be released.

Both ideas have merit, and which one you prefer depends mostly on how frequently you change hardware.

I.E World of Warcraft launched with Direct3D 7/8/9 modes (and OpenGL) to ensure a wide range of GPU support, the higher the version used the better the effects but the bigger the performance hit (resulting in some users manually setting a lower version to boost their FPS) however when Direct3D 11 support was added it didn't bring any added effects, just increased FPS over Direct3D 9 due to higher efficiency.

If a developer designs their game with the aim to run at 60 FPS and look as good as possible on high end hardware using OpenGL/Direct3D11 then adds Vulkan/Direct3D12 support that will cause a big FPS bump. However if they instead design their game to run at 60 FPS and look as good as possible on high end hardware using Vulkan/Direct3D12 then the will be no big FPS bump there will just be a big effects/visual quality bump.

But I am guessing one of the main reasons the reference 480 is so cheap is because these are the low-binned yields GloFlo can spit out quickly, and their process isn't quite as matured as TSMC's slightly larger 16nm process.

In my experience AMD cards age WAY WAY better than Nvidia. The only exception imo is the power hungry Fermi cards, but that even that is only if you ignore the paltry amounts of VRAM on the high-end offerings (Which is a big deal).

I know here you come to say "Oh but AMD doesn't have tess..." - let me cut you off right there. AMD can run tessellation just fine (In fact better than most Nvidia cards at this point) because they don't have to emulate hardware.

I will make another analogy - what you are saying is the equivalent of someone going "This API is AMD biased because it allows the game to use 3GB of VRAM instead of just 2GB. A lot of people said this when BF4 came out about their 680's. Again, having larger textures isn't biased - it just allows the use of more hardware. If Nvidia users wanted Ultra textures they should have bought a card with more VRAM, but dont worry because you can simply turn the setting down. Nvidia cards gave RAM, just not as much. You can't "Turn down Async", you are better just turning it off because Nvidia doesn't have the hardware in any way.

I also hope W1z will include Vulcan Doom into his reviews from now on.

1) That park has green apples and red apples. Don't be so naive to think a company as large as Nvidia is 'out'.

2) On Vega. The GTX1080 smokes everything and it's only the 980 replacement. The GP100/102 chip is 'rumoured' 50% faster. That is Vegas competition.

Even without compute, Pascal's highly optimised and efficient core can run DX12 and Vulcan just fine. I'll wager with you, £10, through PayPal that Vega won't beat the full Pascal chip.

If I lose, I'll be happy because it should start a price war. If I win, I'll be unhappy because Nvidia prices will reach the stratosphere.

Please support your tesselation claim. Last I checked, AMD is far inferior in it due to a serial tesselator. I could be wrong though.You seem to mistake me for a fanboy. I am not. NVIDIA lacks async hardware. There, I said it. This has nothing to do with optimizing for a platform, which is the basis of DX12. You again, do not "make" a platform for DX12. You make your game for the platform. That's what "low level" means.