Monday, August 22nd 2016

PCI-Express 4.0 Pushes 16 GT/s per Lane, 300W Slot Power

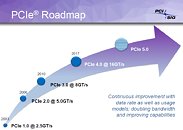

The PCI-Express gen 4.0 specification promises to deliver a huge leap in host bus bandwidth and power-delivery for add-on cards. According to its latest draft, the specification prescribes a bandwidth of 16 GT/s per lane, double that of the 8 GT/s of the current PCI-Express gen 3.0 specification. The 16 GT/s per lane bandwidth translates into 1.97 GB/s for x1 devices, 7.87 GB/s for x4, 15.75 GB/s for x8, and 31.5 GB/s for x16 devices.

More importantly, it prescribes a quadrupling of power-delivery from the slot. A PCIe gen 4.0 slot should be able to deliver 300W of power (against 75W from PCIe gen 3.0 slots). This should eventually eliminate the need for additional power connectors on graphics cards with power-draw under 300W, however, the change could be gradual, as graphics card designers could want to retain backwards-compatibility with older PCIe slots, and retain additional power connectors. The PCI-SIG, the special interest group behind PCIe, said that it would finalize the gen 4.0 specification by the end of 2016.

Source:

Tom's Hardware

More importantly, it prescribes a quadrupling of power-delivery from the slot. A PCIe gen 4.0 slot should be able to deliver 300W of power (against 75W from PCIe gen 3.0 slots). This should eventually eliminate the need for additional power connectors on graphics cards with power-draw under 300W, however, the change could be gradual, as graphics card designers could want to retain backwards-compatibility with older PCIe slots, and retain additional power connectors. The PCI-SIG, the special interest group behind PCIe, said that it would finalize the gen 4.0 specification by the end of 2016.

75 Comments on PCI-Express 4.0 Pushes 16 GT/s per Lane, 300W Slot Power

either way cpu2 is having its power come across the board a decent distance and there are no ill effects.

also a single memory slot may not be much but 64 of them is significant. 128-192 watts.

plus 6x 75 watt pcie slots. Now matter how you slice it server boards have significant current running across them.

Having the same on enthusiast builds won't suddenly cause components to degrade. After all some of these or 3-500$ US.

I'm in mainly a dell shop and those only have 1 8pin for dual cpu and 2 for quad cpu. Again the dual cpu only has the 8 pin by cpu1 and the quad has them next to the mainboard connector. Granting that even with the independent cpu power in that mobo a significant amount is passing through it.

Older tyan boards from the opteron days (or at least the days they were relevant) have them next to cpu 1 and 3 but not 2 and 4. Most of the time cpu2 is getting the power from cpu1 so the distance between is all something that should be subject to degradation and isn't. The boards can handle the extra power and the manufacturers are smart enough to route it properly.

having the connector next to the socket isn't the PWM section. Most boards will split the 8 pin to 4+4 for each CPU (if only a single between) boards like this are typically setup to one EPS per cpu and the additional 4 pin supplies the memory.

this would be the Tyan socket 940 opteron board you are talking about by the way.

On a more modern note, we have Supermicro's X10DGQ (used in Supermicro's 1028GQ):

There's 4 black PCIe 8-pin connections, one near the PSU (top left), and the remaining 3 all around near the 16x riser slots, 2 of em all the way at the front together with 3 riser slots. The white 4-pins are used for fans and HDD connections. These boards are out there, i production and apparently working quite well, if a bit warm for the 4th rear-mounted GPU (the other 3 are front-mounted). That particular board has over 1600W going through it when fully loaded, and it about the size of a good E-ATX board, so relax, and hope we can get 300W slots consumer-side as well as server side and make us some very neat-looking, easy to upgrade.

At 12V and 25A per slot though, the Vdroop is much less of an issue. On top of that, most cards have their own VRMs anyways to get their own supply of precisely-regulated barely above 1V power, so a bit of Vdroop won't be that much of an issue.

I think it will be a neath and proper design, leaving many cases with videocards without the power supply. Simular as putting 1 x 4 or 8 pins for your CPU and 1 x 4 or 8 pins for your GPU.

Right now simular motherboards share their 12V CPU as well for memory and PCI-express slots (75W configuration).

If that's how you answer honest questions. I'd prefer you not answer mine.. ty..

Or maybe you'd like to ask me about building standards or trolxer nuclear gauge testing and I can call you silly?

The 75 Watt limit is physical limit of the number of pins available for power and each pin power capacity. Increasing it would mean a slot redesign or pin reassignment for power delivery (which means more difficult back/fwd compatibility).

The four inductors near each of the connectors also suggest that these power most likely used locally (though 4 phase for 4 slot RAM is overkill, maybe Zen got multi rail power, who knows).

Asus hasn't been able to do a dual GPU card for a while now, probably due to the PCIe official spec limiting to 300W absolute max.

If the PCIe Spec is increased to 450+W, then the Dual GPU cards get the blessings of PCI-SIG.

In serverland on the other hand, NVLink is making inroads with it's simple mezzanine connector design pushing both all the power and I/O, and into the 8GPU per board segment starting with the DGX-1, not to mention all the benefits of having a dedicated, high-speed mesh for inter-GPU communications, in turn relegating PCIe to only CPU communications.

As you can observe: not a single PCIe power connector on the NVLink "daughterboard" - all the power goes through the board. All 2400W of it (and then some for the 4 PCIe slot).

The rest of the industry wants in on this neatness with PCIe, and by the sounds of it, they're willing to do it.

Desktop, no.

There is no reason for it, and no need for it.

Your example is yet another server tech, that won't be coming to Enthusiast desktop (NVLink).

The DGX-1 is fully built by Nvidia, there are no "common" parts in it at all, so it can all be proprietary... (mobo, NVLink board, etc...)

i can't even see the power connectors on the NVLink board, so they could be using any number of connectors on the left there, or on the other side of the board...

Edit: I see someone already mentioned this