Monday, August 22nd 2016

PCI-Express 4.0 Pushes 16 GT/s per Lane, 300W Slot Power

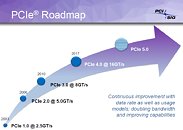

The PCI-Express gen 4.0 specification promises to deliver a huge leap in host bus bandwidth and power-delivery for add-on cards. According to its latest draft, the specification prescribes a bandwidth of 16 GT/s per lane, double that of the 8 GT/s of the current PCI-Express gen 3.0 specification. The 16 GT/s per lane bandwidth translates into 1.97 GB/s for x1 devices, 7.87 GB/s for x4, 15.75 GB/s for x8, and 31.5 GB/s for x16 devices.

More importantly, it prescribes a quadrupling of power-delivery from the slot. A PCIe gen 4.0 slot should be able to deliver 300W of power (against 75W from PCIe gen 3.0 slots). This should eventually eliminate the need for additional power connectors on graphics cards with power-draw under 300W, however, the change could be gradual, as graphics card designers could want to retain backwards-compatibility with older PCIe slots, and retain additional power connectors. The PCI-SIG, the special interest group behind PCIe, said that it would finalize the gen 4.0 specification by the end of 2016.

Source:

Tom's Hardware

More importantly, it prescribes a quadrupling of power-delivery from the slot. A PCIe gen 4.0 slot should be able to deliver 300W of power (against 75W from PCIe gen 3.0 slots). This should eventually eliminate the need for additional power connectors on graphics cards with power-draw under 300W, however, the change could be gradual, as graphics card designers could want to retain backwards-compatibility with older PCIe slots, and retain additional power connectors. The PCI-SIG, the special interest group behind PCIe, said that it would finalize the gen 4.0 specification by the end of 2016.

75 Comments on PCI-Express 4.0 Pushes 16 GT/s per Lane, 300W Slot Power

when you can just run it through a plug, that has already accounted for the additional power flow and been tested to handle it for years on end?

Does this at all transfer into better gpu performance?

They could set it a little lower, like 200W to encourage better power efficiency.

You will never get 300W through the slot.

I think he was saying that the official power allowed for a single card will go up, from a minumum of 300W.

ie. We will be seeing 300W+ cards, officially, and with PCI-SIG blessings.

They will still have 8-pin or 6-pin connectors though, just more of them.

I bet new motherboards are going to implement at least 8 phases of VRM just for the 1st x16 slot.

The mainboards will handle it just fine 4 socket server boards push much more power than that to all 4 cpu's all 64 memory slots, and all6 pcie slots. There will be no degradation that's just a silly line of thinking. Again servers have been pushing this amount of power for decades and the video cards are already receiving that amount of power, changing the how isn't going to suddenly degrade them faster.

What this will do is bring enthusiasts into the modern era and get away from cable redundancies. They really should have done this 10 years ago.

they don't have it wandering throughout the motherboard.

Any PCie "add-on" connector, is always right next to the slots.

They won't be running 300W to a single x16 slot, or every x16 slot thorugh the motherboard.

Or they can just make the cards to they can detect a PCI-E 4.0 slot and pull all their power from it, or if the card detects a 3.0 or lower slot it requires the 6 or 8-pin external power connector to be installed. So the card has an external power connector, but you don't have to use it if you plug the card into a PCI-E 4.0 slot.I'm more interested in how they are going to get the small pins in the slot itself to carry that much current.

Think about where the so-called chipsets would be in a year to three from now.

huh? got it? yeah.

These days, we're pushing well over 3000W through smaller surface areas, like the 3000W PSU in a Dell M1000e (60mm fan). (For those wondering, that PSU only carries power over the 4 thick connectors, 2 +12V, 2 GND. 1/10th of that is about the same amount of area that the PCIe power pins have combined)

Another example would be MXM modules, where the big 1080 and it's 180W of power is supplied through less than half the area available in a PCIe slot, or even crazier, the GK110 chips used in the TITAN supercomputer's MXM modules pushing over 200W over the MXM3.0 edge-connector.

In conclusion: the 300W will go over the existing pins, perfectly reliably and safely.. at most they'll replace a few of the currently unused/reserved/ground pins with +12V pins.External cabling has been around since the Tesla generation of GPUs (GeForce 8800 series), particularly used in external Tesla GPU boxes that you'd attach to beefy 1U dual-CPU boxes or workstations with limited GPU space.

In external situations, you only do I/O to the main box, with the external box providing it's own power for the cards, nice and easy, and a massive compatibility improvement.Check out an NVLink Pascal server sometime. There's no cables in most of them, just big edge-connectors to carry huge currents over, or really short power cables to jump from the CPU end to the PCIe/NVLink daughterboard.Tell me about it... Hell, I'm annoyed that all the higher-end cases faff around with 3 billion fans and LEDs everywhere and yet not a single one of them is willing to putout tool-less, hot-swappable drive cages as a standard feature... Even the massive 900D only does it for 3 of it's 9 bays.. fucking WHY?!Clearly, yes, else NVLink simply wouldn't exist. In HPC land at least. For home use, 3.0 x8 is plenty for now.Cards are pulling 300W right now, so there's no point in setting it any lower. Unless you want massive backlash from AMD, nVidia and Intel.. owait, all 3 are major members of the PCI-SIG, and essentially the driving force of the high-power end.Unlikely. There's been a lot of bitching already from the server (and some desktop) people that cabling is a mess for PCIe add-in cards - in 1U form factors, due to the location of the connector and which bit of the chassis fan a passive card gets, that cuts out 1/6-1/3 of the airflow over the card, resulting in a hotter running card needing to be throttled down.

Let's not even get into top-mounted power like most consumer cards.. those instantly drop you from 4 GPU to 3GPU in riser-equipped 1U, and out of 3U and into 4U for vertically mounted, non-riser setups., and that in turns means 25% more cost in terms of CPUs, mobos, chassis, racks, extra power for the extra systems, etc. top-mounted power is shit, and it's a damn shame it's the common setup now.They already do in 4GPU 1U servers like Dell's C4130 (1200 W goes from the rear PSUs, through the mobo and then out some 8-pin cables at the other end of the mobo into the 4GPUs) or Supermicro's 1028GQ-TRT.

On the big blade systems, they move even more power through the backplanes to the PSU (think in 9000W over 10U range, right next to 100gbit network interfaces)

Server boards, and NVLink are not the things of us mere enthusiast mortals.

They can have stuff that we can't because they will pay through the nose for it.

I still believe that this is a misunderstanding, and its more than 300W per-slot (Card), rather than through-the-slot.

edit : I think they are currently stuffed for dual gpu cards, and would both like to do them, and intel with KNL sucessors (maybe).

At least they would have the option to go mad :)

edit2 : If they really wanted power thru a slot for the card, it would be much easier to go the E-ISA way, and add a connector off the back end of the card for that, leaving the current slot untouched, and fully compliant..

I'm personally not too worried about the safety of pushing 300W through the PCIe slot: 300W is only 25A at 12V. Compare that to the literal hundreds of amps (150+W at less than 1V) CPUs have to be fed over very similar surface areas

I still wouldn't see that trickle down to enthusiast immediately, even if it were the case.

I could see the server people paying for a high layer-high power mobo, for specific cards, not necessarily GPU's.

I just don't see the need to change the norm for desktops.

Two 1080's on one card will take you over the PCIe spec.

I think this is just what the AIB's/Constructors/AMD/Nvidia etc and the others want.