Monday, April 3rd 2017

Rumored Intel Kaby Lake-G Series: Modular, Multi-Die, HBM 2, AMD Graphics IP?

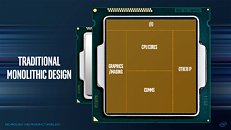

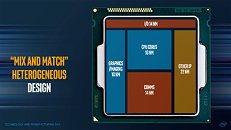

Rumors have been making the rounds about an as-of-yet unannounced product from Intel: a Kaby Lake-G series which would mark Intel's return foray to a multi-chip module in a singular package. The company has already played with such a design before with its Clarkdale family of processors - which married a 32 nm CPU as well as a 45 nm GPU and memory controller in a single package. Kaby Lake-G will reportedly make away with its simple, low-data rate implementation and communication between two parts, instead carrying itself on the shoulders of Intel's EMIB (Embedded Multi-die Interconnect Bridge), which the company claims is a "more elegant interconnect for a more civilized age."

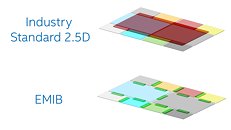

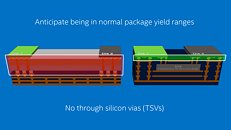

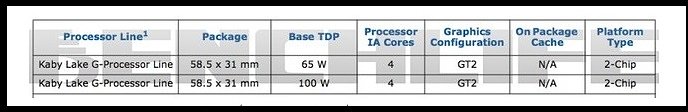

Instead of using a large silicon interposer typically found in other 2.5D approaches (like AMD did whilst marrying its Fiji dies with HBM memory), EMIB uses a very small bridge die, with multiple routing layers, which provide a good measure of price/data paths for the interconnected, heterogeneous architecture. This saves on the costly TSV (Through-Silicon Vias) that dot the interposer approach.For now, rumors peg these Kaby Lake-G as special BGA processors based on Kaby Lake, with an additional discrete GPU on the package. The TDP of these processors (at 65 W and 100 W) is well above the Kaby Lake-H's known 45 Watts. Which begs the question: what exactly is under the hood? This, including Intel's modular approach to chip design for which it developed its EMIB technology, could probably account for the AMD graphic's chip TDP - a discrete-level GPU which would be integrated on-die, EMIB's routing layers handling the data exchange between GPU and processor. This is where HBM 2 memory integration would also come in, naturally - a way to keep a considerable amount of high-speed memory inside the package, accessible by the silicon slices that would need to. Nothing in the leaked information seems to point towards this HBM 2 integration, however.Also helping these "AMD Radeon IP integration" story (besides TDP) is that the two chips that will be part of the Kaby Lake-G series will feature a package size of 58.5 x 31mm - bigger than a desktop Kaby Lake-S (37.5 x 37.5 mm) and the Kaby Lake-H series chips (42 x 28mm). The extra space would accommodate increased footprint of the GPU package - though for now, leaked information points only, again, to Intel's own GT2 graphics solution, though Benchlife seems to put much stock on the AMD side of the equation.The heterogeneous, modular approach to CPU development here would really benefit Intel thusly: it would allow it to integrate such external graphics solutions that could be produced in other factories entirely and then fitted onto the package; would allow Intel to save die space on their 10 nm dies for actual cores, increasing yields from their 10 nm process; and would allow Intel to recycle old processes with new logic inside the CPU package, permitting the company to better distribute production load across different processes, better utilizing (and extracting value from) their not-so-state-of-the-art processes.

If Intel advances with this modular approach, we stand to see some really interesting designs, with multiple manufacturing processes working in tandem inside a single package, giving Intel more flexibility in developing and implementing its fabrication processes. What do you think about this take on CPU development?

Sources:

BenchLife, Computerbase.de

Instead of using a large silicon interposer typically found in other 2.5D approaches (like AMD did whilst marrying its Fiji dies with HBM memory), EMIB uses a very small bridge die, with multiple routing layers, which provide a good measure of price/data paths for the interconnected, heterogeneous architecture. This saves on the costly TSV (Through-Silicon Vias) that dot the interposer approach.For now, rumors peg these Kaby Lake-G as special BGA processors based on Kaby Lake, with an additional discrete GPU on the package. The TDP of these processors (at 65 W and 100 W) is well above the Kaby Lake-H's known 45 Watts. Which begs the question: what exactly is under the hood? This, including Intel's modular approach to chip design for which it developed its EMIB technology, could probably account for the AMD graphic's chip TDP - a discrete-level GPU which would be integrated on-die, EMIB's routing layers handling the data exchange between GPU and processor. This is where HBM 2 memory integration would also come in, naturally - a way to keep a considerable amount of high-speed memory inside the package, accessible by the silicon slices that would need to. Nothing in the leaked information seems to point towards this HBM 2 integration, however.Also helping these "AMD Radeon IP integration" story (besides TDP) is that the two chips that will be part of the Kaby Lake-G series will feature a package size of 58.5 x 31mm - bigger than a desktop Kaby Lake-S (37.5 x 37.5 mm) and the Kaby Lake-H series chips (42 x 28mm). The extra space would accommodate increased footprint of the GPU package - though for now, leaked information points only, again, to Intel's own GT2 graphics solution, though Benchlife seems to put much stock on the AMD side of the equation.The heterogeneous, modular approach to CPU development here would really benefit Intel thusly: it would allow it to integrate such external graphics solutions that could be produced in other factories entirely and then fitted onto the package; would allow Intel to save die space on their 10 nm dies for actual cores, increasing yields from their 10 nm process; and would allow Intel to recycle old processes with new logic inside the CPU package, permitting the company to better distribute production load across different processes, better utilizing (and extracting value from) their not-so-state-of-the-art processes.

If Intel advances with this modular approach, we stand to see some really interesting designs, with multiple manufacturing processes working in tandem inside a single package, giving Intel more flexibility in developing and implementing its fabrication processes. What do you think about this take on CPU development?

32 Comments on Rumored Intel Kaby Lake-G Series: Modular, Multi-Die, HBM 2, AMD Graphics IP?

A strong Nvidia can have a devastating effect in Intel's future, promoting the ARM platform everywhere with the rest of the companies creating ARM processors and eventually, in a future where AMD will be irrelevant in the GPU business, locking it's GPUs into the ARM platform, making that platform the de facto gaming platform. By also promoting GPUs as the most important factor in a system, Intel's future will not be guaranteed. Not to mention that Nvidia is a strong competitor in the autonomous market.

Anyway, Intel should be pretty capable of making a decent iGPU. Iris Pro has shown us that.

Its hard to build a building when somebody else posses all the land to build on.

And the actually tried building GPU based on reduced instruction Pentium 4 cores.

They stuck like 70-80 cores together. Did their benchmarks, they were waaay behind both AMD and Nvidia and they decided to call it a day.