Thursday, September 14th 2017

AMD Raven Ridge Ryzen 5 2500U with Vega Graphics APU Geekbench Scores Surface

A Geekbench page has just surfaced for AMD's upcoming Raven Ridge APUs, which bring both Vega graphics and Ryzen CPU cores to AMD's old "the future is Fusion" mantra. The APU in question is being tagged as AMD's Raven Ridge-based Ryzen 5 2500U, which leverages 4 Zen cores and 8 threads (via SMT) running at 2.0 GHz with AMD's Vega graphics.

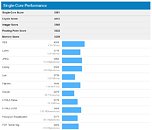

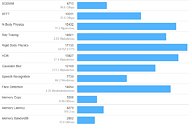

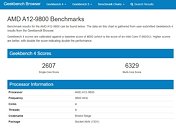

According to Geekbench, the Ryzen APU scores 3,561 points in the single-core score, and 9,421 points in the multi-core score. Compared to AMD's A12-9800, which also leverages 4 cores (albeit being limited to 4 threads) running at almost double the frequency of this Ryzen 5 2500U (3.8 GHz vs the Ryzen's 2 GHz), that's 36% better single-core performance and 48% better multi-core performance. These results are really fantastic, and just show how much AMD has managed to improve their CPU (and in this case, APU) design over their Bulldozer-based iterations.

Source:

Guru3D

According to Geekbench, the Ryzen APU scores 3,561 points in the single-core score, and 9,421 points in the multi-core score. Compared to AMD's A12-9800, which also leverages 4 cores (albeit being limited to 4 threads) running at almost double the frequency of this Ryzen 5 2500U (3.8 GHz vs the Ryzen's 2 GHz), that's 36% better single-core performance and 48% better multi-core performance. These results are really fantastic, and just show how much AMD has managed to improve their CPU (and in this case, APU) design over their Bulldozer-based iterations.

54 Comments on AMD Raven Ridge Ryzen 5 2500U with Vega Graphics APU Geekbench Scores Surface

I believe APU performance could be improved even better

but their GPU cluster has to able fit inside silicon so its make sense to discard L3

The desktop Ryzen chip is around 192nm in die size, And this will have half the cores and half the L3 cache? Or will AMD skip on that as they always have on APUs.

Also is this the same Zen core revision or is it slightly improved also as Apus were in the past(half a generation/revision ahead)?

Also will AMD scrap their old heterogeneous apu interconnect now that they have infinity fabric? Or is infinity fabric the final result of all the experience and development from HSA. Im certain infinity fabric will be incorporsted as both vega and zen are compatible with it, but how does that go along with hsa?

Im the past the whole hsa interconnect seemed to take massive die space that could've been way more useful to end users had it been used for gpu cache/memory so I am hoping AMD made the right choices this time around

I do think they will do L3 but likely cut to something like 3mb...

I'm more excited about These than I am with the HEDT lines.

Also, saying a GPU in a a laptop is useless because your phone or tablet can play games too is ... I don't understand your point actually. What is your point? Can you play Fallout New Vegas on your phone? Can you play WoW or Overwatch on your phone? What about Dota? And I honestly don't believe a phone GPU is as powerful as a high end Iris GPU.

This is the equivalent of an i5 on regular laptops, except that the IGP should be much faster.There's some in geekbench you can lookup ~

browser.geekbench.com/v4/compute/search?utf8=✓&q=2500u

It's comparable to some of the previous gen Iris parts ~

browser.geekbench.com/v4/compute/search?utf8=✓&q=Iris+graphics

As for the GPU, assuming it won't have IF seems odd to me. IF is integral to the design of Ryzen - it's PCIe lanes double as IF lanes, after all. Why on earth would they disable this and tack on an older GPU interconnect? That doesn't seem to make sense. Or are you saying that it would be cheaper/easier to entirely redesign the PCIe part of RR compared to Ryzen, to exclude IF? Again: that seems highly unlikely. For me, the only question is how wide the IF bus between CPU and GPU will be - will they go balls-to-the-wall, or tone it down to reduce power consumption? IF is supposedly very power efficient, so it could still theoretically be a very wide and fast bus.

Another question: as RR has Vega graphics, does it have a regular memory controller, or a HBCC? If GPU memory bandwidth and latency are negatively affected by having to route memory access through the CPU's memory controller and the CPU-GPU interconnect, wouldn't it then make sense to use a HBCC with a common interface to both parts of the chip (such as IF?)? Is the HBCC too power hungry or physically large to warrant use in an APU?

As for power, they were asked to run in the same envelope that intel runs at. Bulldozer is incapable of scaling with power properly, and we were left with garbage.Keep in mind that the dGPU in question was weaker in every way compared to AMD's APU. Fewer cores, lower clock speed, half the memory bus, slower vRAM.

Yer it was 60+% faster then the APU. It really showed how bad AMD's bulldozer gimped the iGPUs performance, and why OEMs just didnt bother with it, bulldozer was a junk chip.

None of this was an issue with llano.

Or any games that required decent memory bandwidth (see RTS games in particular).

Any any non gaming task the bulldozers got destroyed. And battery life was far worse.

Take a look at this , GTA 5 a game that is know not only to use a lot of CPU but also blatantly favors Intel CPUs.

Yeah...

The GPUs AMD puts inside of their APUs are several orders of magnitude better , the gap in terms of GPU power is so big it didn't matter they had inferior CPUs and memory bandwidth.

That being said I expect the new APUs with Vega cores to bury Intel's iGPUs. Intel seriously needs to reconsider their strategy with these things , there is no point in dedicating so much die space on every single chip for something that is useless , just limit these things to basic display adapters.

Of course what I want most of all is a 25-35W cTDP-up mode (or just high TDP SKUs) that favor the GPU explicitly.

Considering that Vega is a massive GPU and getting it integrated into a single package with Zen is not going to be a simple process, it may very well end up that AMD has to run the clocks on both CPU + GPU at really low numbers to make them not suffer a meltdown when run together. Then there's the massive unanswered question of how Vega will perform when it has to take the massive latency and bandwidth hit of going to shared system memory, as opposed to dedicated HBM2.

AMD has thrown a left hook at Intel with Zen; making Raven Ridge work would be the right hook that could potentially floor the giant. At the very least, Intel would have to do something drastic about its iGPU... I wonder if they're already talking to NVIDIA?

Simply put , I don't see how Intel can come up with competitive integrated graphics , they haven't being able to do it for ages. They lack the know-how most likely , GPU architects are very scarce and most end up being snatched by AMD and Nvidia anyway.

I mean just look at this , die shot of a i7 6700. That GPU occupies an insane amount of space , yet it's performance is so bad in comparison. The efficiency in using die space for their GPUs is rock bottom compared to both AMD and Nvidia.

The only way Nvidia would sit in a table with Intel is with x86 licenses at the center.

I was more thinking a straight licensing situation, whereby Intel CPUs are allowed to integrate NVIDIA's GT 1030 GPU under the following conditions:

* NVIDIA won't allow the CPU-with-GT 1030-iGPU to go into production unless they are satisfied with the performance (in other words, they are sure that it won't damage their brand name)

* If the hybrid chip does enter production, Intel has to shutter their own integrated grpahics divison for good, to preclude any potential theft of NVIDIA's GT 1030 intellectual property

* Intel has to share all modifications/optimisations they make to GT 1030 to make it work as an iGPU

* Intel is not allowed to use or sell anything they learn from integrating GT 1030 into their CPU

* Intel has to put NVIDIA GeForce branding everywhere (website, CPU boxes, hell probably even a GeForce logo etched onto the heatspreader)

* And of course, Intel has to NVIDIA pay massive royalties for every GT 1030 iGPU they produce, which means that Intel will have to potentially take a loss on each CPU they sell just to be competitive on price

Unless AMD is feeling spectacularly suicidal and decides to license the Vega iGPU to their archrival, NVIDIA is literally the only option Intel's got. Which means that NVIDIA gets to dictate the terms of the agreement.

Instead of having a customer buy 1000 Intel CPU nodes they now buy just 100 because they can spend the rest of the money on Nvidia GPUs and have a computer cluster that is many times more powerful and efficient and cheaper.

That hurts Intel badly , trust me. They aren't happy about that synergy at all. This is why Nvidia wont licence any of their GPU IPs , because it is the only thing that manages to keep them ahead by miles in these particular sectors.