Tuesday, October 10th 2017

AMD "Navi" GPU by Q3-2018: Report

AMD is reportedly accelerating launch of its first GPU architecture built on the 7 nanometer process, codenamed "Navi." Graphics cards based on the first implementation of "Navi" could launch as early as by Q3-2018 (between July and September). Besides IPC increments with its core number-crunching machinery, "Navi" will introduce a slew of memory and GPU virtualization technologies.

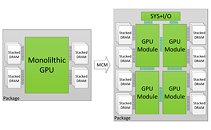

AMD will take its multi-chip module (MCM) approach of building high-performance GPUs a step further, by placing multiple GPU dies with their HBM stacks on a single package. The company could leverage its InfinityFabric as a high-bandwidth interconnect between the GPU dies (dubbed "GPU module"), with an I/O controller die interfacing the MCM with the host machine. With multi-GPU on the decline for games, it remains to be seen how those multiple GPU modules are visible to the operating system. In the run up to "Navi," AMD could give its current "Vega" architecture a refresh on a refined 14 nm+ process, to increase clock speeds.

Source:

TweakTown

AMD will take its multi-chip module (MCM) approach of building high-performance GPUs a step further, by placing multiple GPU dies with their HBM stacks on a single package. The company could leverage its InfinityFabric as a high-bandwidth interconnect between the GPU dies (dubbed "GPU module"), with an I/O controller die interfacing the MCM with the host machine. With multi-GPU on the decline for games, it remains to be seen how those multiple GPU modules are visible to the operating system. In the run up to "Navi," AMD could give its current "Vega" architecture a refresh on a refined 14 nm+ process, to increase clock speeds.

74 Comments on AMD "Navi" GPU by Q3-2018: Report

When nothing has a set date, there is no set date, just an intent to release something at some point. But we knew that two years ago.

Fact remains, RTG has been underperforming like nobody's business so far, GPU did better when it still had the AMD tag, and it is a fact that AMD has separated RTG from the core business because of risk concerns for their successful Zen product. Read between the lines, that'll be all thanks :)

IF AMD is really pushing Navi as the next best thing, this can really go only way direction, and that is pushing Nvidia harder on Volta, resulting in Volta single-die GPUs battling it out with MCM solutions from AMD. So basically where AMD needs two, three or four dies, Nvidia bakes one to match its performance. I'm sure that'll help the margins alot. AMD literally has one year now to refine GCN to a point that it remains viable WITHOUT resorting to MCM. If they do not, well, say hi to stagnation and price inflated GPUs again.

Another vital issue arises, and that is tremendous amounts of work for driver teams and engineering too, because MCM is never going to work the same as blunt ol' Crossfire, it'll talk over Infinity Fabric, and it will be utilizing VRAM differently. So by then AMD has THREE radically different architectures in the marketplace to support one stack of products. Again, not the best example of efficiency.

It'll be another year of agony.

Have all of you forgotten how the news outlets spread the rumor about Vega launching last October?

AMD have said they hope to tape out Navi by end of 2017, usually products arrive one year after that. It took almost 13 months from tape out until Vega FE launched, about 14 months for the consumer product. Vega was a fairly minimal design improvement over Fiji, and was produced on a mature node. Navi will have more radical changes and be produced on a brand new node. GloFo 7nm is not planned to enter volume production until H2 2018, and takes another ~4 months from a wafer to a finished product.Operating at such low voltages will not give you a stable product.Simply because Coffee Lake is a better choice."12nm" GloFo is just another refresh of "14nm", similar to TSMC "12nm" used by Nvidia for Volta.

So many people fall for this pathetic attempt of troll bait. Good to see some don't.

On topic : if they find way to distribute the workload efficiently and most importantly make it so that to an API the GPU still appears as a single pool of resource , it will be a home run. With or without high clocks or GloFo's questionable nodes.

And you would know because you've had it and tried? I stress tested for 8 hours and then gamed on it, it is stable contrary to your uneducated opinion.

Source articles said.Not a story nor did they include one.Wait what happen to your exclusive?

The entire piece is speculation and TPU bite on it for what ever reason. Then we get this drawn out debate on something that's just speculative.

Sites win. Made you click and gain traffic...

Sorry for being so bad with the terms, but I think my point still stands. Radeon's chips, in particular Vega, is doing less work with more resources...

A stream processor was also also an abstract name given to a class of processors that rely heavily on data level parallelism.

Stop getting stuck in meaningless semantics.

You shouldn't be like that. It's a site where people talk. If somebody struggles it's good to straighten him up with information.

Even if the bridge will let software access to indipendent modules, DX12 are here for indipendent multiple processing.

I have been building computers for people since the early 1990s. My first IBM-compatible was actually an AMD 386/40MHz, which was faster than the Intel 386/33MHz, and $60 cheaper. Guest which one I bought? With few variations, it's been the same DAMNED STORY ever since. AMD gives you more for your money. Their CPUs, with only a few varations, are just as fast for all intents and purposes. When you need benchmark software to show you you're hypothetically getting better performance with an Intel chip because you CAN'T FEEL IT yourself when you actually use the computer, that's telling you something you really need to be listening to.

I also actually game, and I have a Ryzen 7 1700 o'c'd to 3.8GHz and an R9 290 Radeon card, and they're both more than fast enough in every game I throw at them. I'm playing the very latest game, Wolfenstein II: New Colossus with this system, and it's buttery smooth. AMD CPU. AMD GPU. It's UTTER NONSENSE that you need Intel or an nVidia GPU to game really well. They charge more, but that's all. You're not getting anything for that price premium. End of story.Intel has pushed 10nm to 2018. They said something they can't back up. It's official.

www.techpowerup.com/forums/threads/intel-10-nm-cpus-to-see-very-limited-initial-launch-in-2017.238307/

Limited production in 2017.

Notice, Radeon products have not won a generation based on a shear power and performance level since the 1000XT and 2000XT series of cards, and going back before that. This is because Radeon is no longer ATI, but AMD and AMD has a completely different marketing method. ATI was of a competitive enthusiast card back in the day, but with 3DFX it was a tough battle. Nvidia, not terribly popular at the time, acquired 3DFX and their tech and ascended to glory. Still, ATI competed.

As far as CPU goes AMD has Never won on a power/ performance level, but has won CPU generations based on a price-to-performance level and only just recently done so once again with its Ryzen line of CPUs, since the days of the Phenom. Thread Ripper, like the Phenom IIs, doesn't quite rival or beat out Intel's upper end of CPUs(in this case the i9s), but gets us close at a better cost. This is why AMD is allowed to remain. They are not a threat to either Intel or Nvidia, but a pain in the a$$ to either company's midrange market, which is the majority. Once Thread Ripper is over and done with people will be trying to buy them all up and run them for the next 8 years, just like the Phenom X6 1100T, after people will pour over to the next more powerful of the Ryzen CPUs until those are gone, and everyone will speak of the Ryzen days, just as people do the Phenom days. AMD will then fade into unpopularity and will then again produce another memorable moment in another near decade. They've done this ever since they've been around. AMD Athlon - Thunderbird, a popular CPU at its time for cost-to -performance, as well as their unlocked nature(circa 2000), AMD wouldn't repeat this popularity again until the Phenoms roughly 8 years later...hmmm

The AMD's GPU line now echos their CPU market. AMD produces a kick-a$$ piece of silicon that nearly rivals what Intel and Nvidia are doing in their respective markets, but at a lower price point.