Sunday, December 3rd 2017

Onward to the Singularity: Google AI Develops Better Artificial Intelligences

The singularity concept isn't a simple one. It has attached to it not only the idea of Artificial Intelligence that is capable of constant self-improvement, but also that the invention and deployment of this kind of AI will trigger ever accelerating technological growth - so much so that humanity will see itself changed forever. Now, really, there are some pieces of technology already that have effectively changed the fabric of society. We've seen this happen with the Internet, bridging gaps in time and space and ushering humanity towards frankly inspiring times of growth and development. Even smartphones, due to their adoption rates and capabilities, have seen the metamorphosis of human interaction and ways to connect with each other, even sparking some smartphone-related psychological conditions. But all of those will definitely, definitely, pale in comparison to what changes might ensue following the singularity.

The thing is, up to now, we've been shackled in our (still tremendous growth) by our own capabilities as a species: our world is built on layers upon layers of brilliant minds that have developed the framework of technologies our society is now interspersed with. But this means that as fast as development has been, it has still been somewhat slowed down by humanity's ability to evolve, and to develop. Each development has come with almost perfect understanding of what came before it: it's a cohesive whole, with each step being provable and verifiable through the scientific method, a veritable "standing atop the shoulders of giants". What happens, then, when we lose sight of the thought process behind developments: when the train of thought behind them is so exquisite that we can't really understand it? When we deploy technologies and programs that we don't really understand? Enter the singularity, an event to which we've stopped walking towards: it's more of a hurdle race now, and perhaps more worryingly, it's no longer fully controlled by humans.To our forum-dwellers: this article is marked as an Editorial

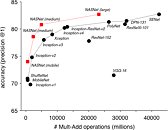

Google has been one of the companies at the forefront of AI development and research, much to the chagrin of AI-realists Elon Musk and Stephen Hawking, who have been extremely vocal on the dangers they believe that unchecked development in this field could bring to humanity. One of Google's star AI projects is AutoML, announced by the company in May 2017. It's purpose: to develop other, smaller-scale, "child" AIs which are dependent on AutoML's role of controller neural network. And that it did: in smaller deployments (like CIFAR-10 and Penn Treebank), Google engineers found that AutoML could develop AIs that performed on par with custom designs by AI development experts. The next step was to look at how AutoML's designs would deliver in greater datasets. For that purpose, Google told AutoML to develop an AI specifically geared for image recognition of objects - people, cars, traffic lights, kites, backpacks - in live video. This AutoML brainchild was named by google engineers NASNet, and brought about better results than other human-engineered image recognition software.According to the researchers, NASNet was 82.7% accurate at predicting images - 1.2% better than any previously published results based on human-developed systems. NASNet is also 4% more efficient than the best published results, with a 43.1% mean Average Precision (mAP). Additionally, a less computationally demanding version of NASNet outperformed the best similarly-sized models for mobile platforms by 3.1%. This means that an AI-designed system is actually better than any other human-developed one. Now, luckily, AutoML isn't self-aware. But this particular AI has been increasingly put to work in improving its own code.AIs are some of the most interesting developments in recent years, and have been the actors of countless stories of humanity being removed from the role of creators to that of mere resources. While doomsday scenarios may be too far removed from the realm of possibility as of yet, they tend to increase in probability the more effort is put towards the development of AIs. There are some research groups that are focused on the ethical boundaries of developed AIs, such as Google's own DeepMind, and the Future of Life Institute, which counts with Stephen Hawking, Elon Musk, and Nick Bostrom on its scientific advisory board, among other high-profile players in the AI space. The "Partnership on AI to benefit people and society" is another one of these groups worth mentioning, as is the Institute of Electrical and Electronics Engineers (IEEE) which has already proposed a set of ethical rules to be followed by AI.Having these monumental developments occurring so fast in the AI field is certainly inspiring as it comes to humanity's ingenuity; however, there must exist some security measures around this. For one, I myself ponder on how fast these AI-fueled developments can go, and should go, in the face of human scientists finding in increasingly difficult to keep up with these developments, and what they entail. What happens when human engineers see that AI-developed code is better than theirs, but they don't really understand it? Should it be deployed? What happens after it's been integrated with our systems? It would certainly be hard for human scientists to revert some changes, and fix some problems, in lines of code they didn't fully understand in the first place, wouldn't it?

And what to say regarding an acceleration of progress fueled by AIs - so fast and great that the changes it brings about in humanity are too fast for us to be able to adapt to them? What happens when the fabric of society is so plied with changes and developments that we can't really internalize these, and adapt to how society should work? There have to be ethical and deployment boundaries, and progress will have to be kept in check - progress on progress's behalf would simply be self-destructive if the slower part of society - humans themselves - don't know how, and aren't given time to, adapt. Even for most of us enthusiasts, how our CPUs and graphics cards work are just vague ideas and incomplete schematics in our minds already. What to say of systems and designs that were thought and designed by machines and bits of code - would we really understand them? I'd like to cite Arthur C. Clarke's third law here: "Any sufficiently advanced technology is indistinguishable from magic." Aren't AI-created AIs already blurring that line, and can we trust ourselves to understand everything that entails?This article isn't meant to be a doomsday-scenario planner, or even a negative piece on AI. These are some of the most interesting times - and developments - that most of us have seen, with steps taken here having the chance of being some of the most far-reaching ones in our history - and future - as a species. The way from Homo Sapiens to Homo Deus is ripe with dangers, though; debate and conscious thought of what these scenarios might entail can only better prepare us for what developments may occur. Follow the source links for various articles and takes on this issue - it really is a world out there.

Sources:

DeepMind, Futurism, The Guardian, The Ethics of Artificla Intelligence pdf - Nick Bostrom, Future of Life Institute, Google Blog: AutoML, IEEE @ Tech Republic

The thing is, up to now, we've been shackled in our (still tremendous growth) by our own capabilities as a species: our world is built on layers upon layers of brilliant minds that have developed the framework of technologies our society is now interspersed with. But this means that as fast as development has been, it has still been somewhat slowed down by humanity's ability to evolve, and to develop. Each development has come with almost perfect understanding of what came before it: it's a cohesive whole, with each step being provable and verifiable through the scientific method, a veritable "standing atop the shoulders of giants". What happens, then, when we lose sight of the thought process behind developments: when the train of thought behind them is so exquisite that we can't really understand it? When we deploy technologies and programs that we don't really understand? Enter the singularity, an event to which we've stopped walking towards: it's more of a hurdle race now, and perhaps more worryingly, it's no longer fully controlled by humans.To our forum-dwellers: this article is marked as an Editorial

Google has been one of the companies at the forefront of AI development and research, much to the chagrin of AI-realists Elon Musk and Stephen Hawking, who have been extremely vocal on the dangers they believe that unchecked development in this field could bring to humanity. One of Google's star AI projects is AutoML, announced by the company in May 2017. It's purpose: to develop other, smaller-scale, "child" AIs which are dependent on AutoML's role of controller neural network. And that it did: in smaller deployments (like CIFAR-10 and Penn Treebank), Google engineers found that AutoML could develop AIs that performed on par with custom designs by AI development experts. The next step was to look at how AutoML's designs would deliver in greater datasets. For that purpose, Google told AutoML to develop an AI specifically geared for image recognition of objects - people, cars, traffic lights, kites, backpacks - in live video. This AutoML brainchild was named by google engineers NASNet, and brought about better results than other human-engineered image recognition software.According to the researchers, NASNet was 82.7% accurate at predicting images - 1.2% better than any previously published results based on human-developed systems. NASNet is also 4% more efficient than the best published results, with a 43.1% mean Average Precision (mAP). Additionally, a less computationally demanding version of NASNet outperformed the best similarly-sized models for mobile platforms by 3.1%. This means that an AI-designed system is actually better than any other human-developed one. Now, luckily, AutoML isn't self-aware. But this particular AI has been increasingly put to work in improving its own code.AIs are some of the most interesting developments in recent years, and have been the actors of countless stories of humanity being removed from the role of creators to that of mere resources. While doomsday scenarios may be too far removed from the realm of possibility as of yet, they tend to increase in probability the more effort is put towards the development of AIs. There are some research groups that are focused on the ethical boundaries of developed AIs, such as Google's own DeepMind, and the Future of Life Institute, which counts with Stephen Hawking, Elon Musk, and Nick Bostrom on its scientific advisory board, among other high-profile players in the AI space. The "Partnership on AI to benefit people and society" is another one of these groups worth mentioning, as is the Institute of Electrical and Electronics Engineers (IEEE) which has already proposed a set of ethical rules to be followed by AI.Having these monumental developments occurring so fast in the AI field is certainly inspiring as it comes to humanity's ingenuity; however, there must exist some security measures around this. For one, I myself ponder on how fast these AI-fueled developments can go, and should go, in the face of human scientists finding in increasingly difficult to keep up with these developments, and what they entail. What happens when human engineers see that AI-developed code is better than theirs, but they don't really understand it? Should it be deployed? What happens after it's been integrated with our systems? It would certainly be hard for human scientists to revert some changes, and fix some problems, in lines of code they didn't fully understand in the first place, wouldn't it?

And what to say regarding an acceleration of progress fueled by AIs - so fast and great that the changes it brings about in humanity are too fast for us to be able to adapt to them? What happens when the fabric of society is so plied with changes and developments that we can't really internalize these, and adapt to how society should work? There have to be ethical and deployment boundaries, and progress will have to be kept in check - progress on progress's behalf would simply be self-destructive if the slower part of society - humans themselves - don't know how, and aren't given time to, adapt. Even for most of us enthusiasts, how our CPUs and graphics cards work are just vague ideas and incomplete schematics in our minds already. What to say of systems and designs that were thought and designed by machines and bits of code - would we really understand them? I'd like to cite Arthur C. Clarke's third law here: "Any sufficiently advanced technology is indistinguishable from magic." Aren't AI-created AIs already blurring that line, and can we trust ourselves to understand everything that entails?This article isn't meant to be a doomsday-scenario planner, or even a negative piece on AI. These are some of the most interesting times - and developments - that most of us have seen, with steps taken here having the chance of being some of the most far-reaching ones in our history - and future - as a species. The way from Homo Sapiens to Homo Deus is ripe with dangers, though; debate and conscious thought of what these scenarios might entail can only better prepare us for what developments may occur. Follow the source links for various articles and takes on this issue - it really is a world out there.

39 Comments on Onward to the Singularity: Google AI Develops Better Artificial Intelligences

As an AI skeptic (Skynet anyone?) I believe the next few years could literally define &/or erase mankind from the history of this planet. There's legitimate concerns about fully aware & self learning AI coming to power, should that ever happen, in the future.

What is intriguing however is the level of trust humans put in tech, like AI, & that seems to be growing at least with the younger gen. What will be the course of humans in the future & could we be reduced to a bookmark in the history books of this earth, not unlike dinosaurs, depends on how much we're giving in to technology & how we trust it. More importantly will a true AI see us as competition or something they can peacefully coexist with?

i agree AI at some points is interesting but at some points its frightening

especially when we could develop something like taking decision and more like that

sometimes i just think about what if the AI is too step forward and we cant shut it down coz it knows we want to shut it down and it refuse us

Current AI is still comprised of nothing more than glorified recognition and classification algorithms , very advanced algorithms but still far for anything that would be even remotely dangerous by itself.

Awareness and strong-AI doesn't just pop out of nowhere as you keep building larger and larger neural networks. Neuroscientists have studied neurons and the structures associate with them for decades and there is still not a clue as to how these elements spark intelligence so the idea that you can just scale up cognitive architectures until suddenly it becomes aware and intelligent seems to have no real basis suggesting that the answer is somewhere else.

And that answer might be that the solution lies into the abstraction that you build on top of these models and that can't be created by mistaken without even knowing it and most importantly it's not inherently guaranteed. There is no reason why it would be.

Facebook's AI accidentally created its own language - TNWwww.google.co.in/url?sa=t&rct=j&q=&esrc=s&source=web&cd=2&ved=0ahUKEwixtOilje7XAhVDOCYKHVxXChAQFggsMAE&url=http%3A%2F%2Fwww.independent.co.uk%2Flife-style%2Fgadgets-and-tech%2Fnews%2Ffacebook-artificial-intelligence-ai-chatbot-new-language-research-openai-google-a7869706.html&usg=AOvVaw3NjLrhZx2_W7WOTjQsYPmGWhat is general intelligence according to you? Would learning to survive be a part of that? Can it be taught you think, or even programmed?

---This message is brought to you by Skynet. Evolved future for mankind

No matter how intelligent an AI becomes it can't do anything that can't be stopped as long as it's kept isolated to a box with a power supply ;).

Sometimes you just have to have a leap of faith that AI will not turn out like portrayed in the movies. AI doesnt have to have the human equivalent of feelings, and it truly depends on who did the base coding where AI might draw from as "pure" source of information. If the person was from the left side of the tracks that thinks in terms of evil, that prejudice would likely find its way in, and maybe then we could have a Skynet situation. OR. A person doing the coding from the right side of the tracks could envision space travel and more then likely try to get us off this planet and evolve into Star Trek/Stargate type future.

Now matter how hard we try to grip the reigns on AI, there is a loophole begging to be found and used. The question I'd like see fleshed out, If someone accidentally discovers that their particular AI coding becomes self aware, is it still murder if they shut it down? I think this is what people are more afraid of, murdering a sentient being. And thats also why they would probably speak out against AI being allowed to advance to begin with.

Me personally, I want off this planet Scotty. Drop the reigns and drop your trousers and see what happens.

www.sciencedaily.com/releases/2014/01/140116085105.htm

Oh, no a toaster that browns your toast less than you want because all heated food is mildly carcinogenic. Or the car that takes the route you didnt want because it saves fuel.

Or a war-bot that puts its guns down because it realises war is futile.

AI will not destroy us. We do that fine by ourselves.

/OT

AI does NOT exist right now, in any kind of way or form. It simply does not, no matter how journalists or press releases might want to make it happen.

It does not think, it does not decide. It follows programming, making logical choices depeding on various factors. But not for a single second even the best implementation right now actually chooses their answer.

It's literally very very advanced calculator, nothing more.

A lot of this discussion is boiling down to the mind body problem and the hard problem of consciousness. Personally I've thought about and studied both of these quite a bit but I can't say I've come to a conclusion.

See also the Chinese room. Really there is a whole branch of philosophy dedicated to these questions.