Tuesday, October 16th 2018

NVIDIA Releases Comparison Benchmarks for DLSS-Accelerated 4K Rendering

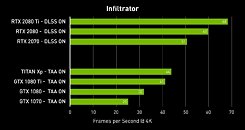

NVIDIA released comparison benchmarks for its new AI-accelerated DLSS technology, which is part of their new Turing architecture's call to fame. Using the Infiltrator benchmark with its stunning real-time graphics, NVIDIA showcased the performance benefits of using DLSS-improved 4K rendering instead of the usual 4K rendering + TAA (Temporal Anti-Aliasing). Using a Core i9-7900X 3.3GHz CPU paired with 16 GB of Corsair DDR4 memory, Windows 10 (v1803) 64-bit, and version 416.25 of the NVIDIA drivers, the company showed tremendous performance improvements that can be achieved with the pairing of both Turing's architecture strengths and the prowess of DLSS in putting Tensor cores to use in service of more typical graphics processing workloads.

The results speak for themselves: with DLSS at 4K resolution, the upcoming NVIDIA RTX 2070 convincingly beats its previous-gen pair by doubling performance. Under these particular conditions, the new king of the hill, the RTX 2080 Ti, convincingly beats the previous gen's halo product in the form of the Titan Xp, with a 41% performance lead - but so does the new RTX 2070, which is being sold at half the asking price of the original Titan Xp.

Source:

NVIDIA Blogs

The results speak for themselves: with DLSS at 4K resolution, the upcoming NVIDIA RTX 2070 convincingly beats its previous-gen pair by doubling performance. Under these particular conditions, the new king of the hill, the RTX 2080 Ti, convincingly beats the previous gen's halo product in the form of the Titan Xp, with a 41% performance lead - but so does the new RTX 2070, which is being sold at half the asking price of the original Titan Xp.

43 Comments on NVIDIA Releases Comparison Benchmarks for DLSS-Accelerated 4K Rendering

SotTR devs didn't even have enough time to implement RT in all the scenes in the demo (game will/did launch without RT, to be added in a later patch).

But but but it's supposed to be so easy to implement ... :rolleyes:

And there are upcoming titles making use of DLSS, make no mistake about that: nvidianews.nvidia.com/news/nvidia-rtx-platform-brings-real-time-ray-tracing-and-ai-to-barrage-of-blockbuster-games

SotTR is one of them.

Already released titles won't do it because they've already got your money.

Mantle was in the exact same position and look how that turned out ...

We can talk all we want about upcoming titles that are supposed to hit the market with the tech enabled but, until they hit the market and we can actually find out if it's worth it from both the visual and the performance aspects, it's all talk.

nVidia has the advantage of being in a higher market position, when compared to AMD @ the time they were introducing Mantle, and that can have a leverage effect.

We shall see ...

I clearly remember the presentation and the difference could be described as "night and day".

If they made "only" very noticeable improvements, the performance hit wouldn't be as big and so even lower 2000 cards could work, meaning developers wouldn't just be catering to enthusiasts but mainstream as well and would therefore would be far more inclined to have the technology enabled in their games from the get go, and even patching already released games.

From what we know of nVidia is that they don't sell for low profit: @ least not huge die chips.

But it's true that they are most likely having yield issues, due to the die's size. That's the problem with huge dies: just ask Intel about it, regarding high end server chips.

For example, the 2080 has a die size of 545 mm2. If we get the square root, it's just under 23.35 and we'll round that to 23.4 for this example.

If we use the die per wafer calculator for this, and assuming a low density defect for a mature process (which i'm not entirely sure of), we get:

Less then 60% yield rate, and that's before binning, because there are "normal" 2080s, FE 2080s and AIB 2080s. This adds to the cost as you said, but then there's "the nVidia tax" which inflates stuff even more.

You're not actually seeing images being rendered @ 4K but rather @ a lower resolution, applied all the enhancements @ that resolution via DLSS, and then upscaled to 4K: is this not the same as watching a full HD clip in fullscreen but with a game instead of a video, minus the enhancements part?

Kudos to nVidia to have come up with a way to do it in real time, but it's still cheating, IMO.

Game developers simply give the finished game to Nvidia and Nvidia runs it through their computer and its done.

Look it up, I tell the truth.

news.developer.nvidia.com/dlss-what-does-it-mean-for-game-developers/

Actually what determines if the IQ of DLSS is equivalent to X resolution? The user eyes? A mathematical algo? The developer graphics settings? The NVIDIA's marketing team?