Tuesday, October 16th 2018

NVIDIA Releases Comparison Benchmarks for DLSS-Accelerated 4K Rendering

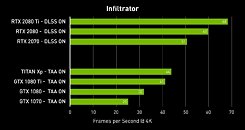

NVIDIA released comparison benchmarks for its new AI-accelerated DLSS technology, which is part of their new Turing architecture's call to fame. Using the Infiltrator benchmark with its stunning real-time graphics, NVIDIA showcased the performance benefits of using DLSS-improved 4K rendering instead of the usual 4K rendering + TAA (Temporal Anti-Aliasing). Using a Core i9-7900X 3.3GHz CPU paired with 16 GB of Corsair DDR4 memory, Windows 10 (v1803) 64-bit, and version 416.25 of the NVIDIA drivers, the company showed tremendous performance improvements that can be achieved with the pairing of both Turing's architecture strengths and the prowess of DLSS in putting Tensor cores to use in service of more typical graphics processing workloads.

The results speak for themselves: with DLSS at 4K resolution, the upcoming NVIDIA RTX 2070 convincingly beats its previous-gen pair by doubling performance. Under these particular conditions, the new king of the hill, the RTX 2080 Ti, convincingly beats the previous gen's halo product in the form of the Titan Xp, with a 41% performance lead - but so does the new RTX 2070, which is being sold at half the asking price of the original Titan Xp.

Source:

NVIDIA Blogs

The results speak for themselves: with DLSS at 4K resolution, the upcoming NVIDIA RTX 2070 convincingly beats its previous-gen pair by doubling performance. Under these particular conditions, the new king of the hill, the RTX 2080 Ti, convincingly beats the previous gen's halo product in the form of the Titan Xp, with a 41% performance lead - but so does the new RTX 2070, which is being sold at half the asking price of the original Titan Xp.

43 Comments on NVIDIA Releases Comparison Benchmarks for DLSS-Accelerated 4K Rendering

What would be interesting it to have a quality comparison of a capture of a very detailed scene - maybe something like a UHD or FUHD test pattern, one of the ones with lines and circles.

Of course if DLSS was run on such patterns it might just pull a full quality copy out of the air and present it.

I have a bit of an issue with this "pre-trained" stuff. It means apps from e.g. indie devs may never see it - or will nvidia train anyone's app for free?

DLSS requires many man hours for the game developers to place it in their games and because the cards that can use them (2070 and up: "jury's still out" on 2060 and below) are so expensive, only enthusiasts are supposed to buy them. Although many here @ TPU are enthusiasts, the vast majority are not, which means a lot of effort for only a small percentage "of audience".

Personally, i seriously doubt ray tracing will get traction with this generation of GPUs. Don't get me wrong: it's undoubtedly the future ... but that's still in the future ... how close that future is remains to be seen.

And yes, I agree everything won't turn ray-tracing all of a sudden. But this generation is the first that enables developers to at least start assessing ray-tracing. Whether this stands on its own or goes the way of the dodo (like Mantle before it) making way for a better implementation, doesn't concern me very much.

Agreed. I'd say nVidia would have a far better chance to have this succeed if they enable ray tracing to all 2000 series cards. I don't see that happening because even 2080ti struggles with it currently, meaning 2060 and below will have no chance.

The way i see it, nVidia is too ambitious and the difference between RTX On / Off is far too great, thus having a very serious dent on performance that not even the 2080ti can really account for. I see this as move to leave AMD's cards "in the dust" more than the drive to give better quality @ higher resolutions. If the difference were much smaller, even lower tiered 2000 series cards could enable it but AMD's cards could too, potentially. To "move in for the kill", nVidia is trying to make sure AMD has no chance, which is why not even the 2080ti is enough.

I would not believe a word either of them say about anything.

they never roll out a bad driver

When a new game launches (with or without Nvidia tech), older GPUs don't get the full optimization treatment, the way that the current ones would

We have seen multiple scenarios where old GPUs with more raw power get beaten by newer ones with less.

Temporal Anti Aliasing is the most popular AA method nowadays.

DLSS implementation is relatively easy and doesn't need that much effort.

who need's wahed up downscaled graphics and that with ray tracing?? lol this generation is a flawed and foolish investment

If you want to fault them, a better question would be: why bother with AA when your card can push 4k?

DLSS can only be implemented in games with TAA support, how the heck you can compare DLSS with MSAA when your specific game/application doesn't even support it?

you can't make a proper apples to apples comparison between DLSS and MSAA or any other AA method when your specific Game/Application doesn't support both methods at the same time.

Heck: Shadow of the Tomb Raider doesn't have it and this game was one of the few showcased with it enabled when Turing cards were released, during that presentation by nVidia's CEO. How long ago was that, exactly? If it were easy, as you claim, it should be already enabled, no?