Tuesday, June 4th 2019

AMD Announces the Radeon Pro Vega II and Pro Vega II Duo Graphics Cards

AMD today announced the Radeon Pro Vega II and Pro Vega II Duo graphics cards, making their debut with the new Apple Mac Pro workstation. Based on an enhanced 32 GB variant of the 7 nm "Vega 20" MCM, the Radeon Pro Vega II maxes out its GPU silicon, with 4,096 stream processors, 1.70 GHz peak engine clock, 32 GB of 4096-bit HBM2 memory, and 1 TB/s of memory bandwidth. The card features both PCI-Express 3.0 x16 and InfinityFabric interfaces. As its name suggests, the Pro Vega II is designed for professional workloads, and comes with certifications for nearly all professional content creation applications.

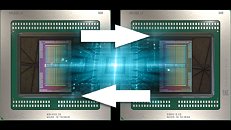

The Radeon Pro Vega II Duo is the first dual-GPU graphics card from AMD in ages. Purpose built for the Mac Pro (and available on the Apple workstation only), this card puts two fully unlocked "Vega 20" MCMs with 32 GB HBM2 memory each on a single PCB. The card uses a bridge chip to connect the two GPUs to the system bus, but in addition, has an 84.5 GB/s InfinityFabric link running between the two GPUs, for rapid memory access, GPU and memory virtualization, and interoperability between the two GPUs, bypassing the host system bus. In addition to certifications for every conceivable content creation suite for the MacOS platform, AMD dropped in heavy optimization for the Metal 3D graphics API. For now the two graphics cards are only available as options for the Apple Mac Pro. The single-GPU Pro Vega II may see standalone product availability later this year, but the Pro Vega II Duo will remain a Mac Pro-exclusive.

The Radeon Pro Vega II Duo is the first dual-GPU graphics card from AMD in ages. Purpose built for the Mac Pro (and available on the Apple workstation only), this card puts two fully unlocked "Vega 20" MCMs with 32 GB HBM2 memory each on a single PCB. The card uses a bridge chip to connect the two GPUs to the system bus, but in addition, has an 84.5 GB/s InfinityFabric link running between the two GPUs, for rapid memory access, GPU and memory virtualization, and interoperability between the two GPUs, bypassing the host system bus. In addition to certifications for every conceivable content creation suite for the MacOS platform, AMD dropped in heavy optimization for the Metal 3D graphics API. For now the two graphics cards are only available as options for the Apple Mac Pro. The single-GPU Pro Vega II may see standalone product availability later this year, but the Pro Vega II Duo will remain a Mac Pro-exclusive.

41 Comments on AMD Announces the Radeon Pro Vega II and Pro Vega II Duo Graphics Cards

I assume this is only for MAC and we are not talking about PC alternative of the same GPU?

www.amd.com/en/products/professional-graphics/radeon-pro-duo-polaris - April 2017

www.amd.com/en/products/professional-graphics/radeon-pro-v340 - August 2018

Up to 14 TFLOPS means it has a boost clock of 1700MHz. Not bad at all.

But it might well be between cards as well.

www.techpowerup.com/gpu-specs/radeon-pro-duo.c2828

wccftech.com/amd-radeon-pro-duo-announced-worlds-fastest-graphics-card-16-teraflops-compute/

As for AMD doing away with CF, it seems to me that this card throws all of the industry norms out the window, so I wouldn't read too much into its design in regards to more consumer-oriented products.

Note the weird little "plastic" blocks up front too, labelled with an exclamation mark and 1-2, 3-4 and 5-8. They look suspiciously like something to do with power as well.

BTW you can still game on that GPU no problem.

They are definitely looking at it for gaming tho, so these kinds of cards could give them some insight. Besides, we all know that when SLI/Crossfire works, it becomes an amazing thing.

www.pcgamesn.com/amd-navi-monolithic-gpu-design

SLI and CrossFire sure. But you know they don't scale double the speed of a single card yet you can still see an improvement.