Monday, December 14th 2020

Cyberpunk 2077 Does Not Leverage SMT on AMD Ryzen, Lower Core-Count Variants take a Bigger Hit, Proof Included

Cyberpunk 2077 does not leverage simultaneous multi-threading (SMT) on AMD Ryzen processors, according to multiple technical reviews of the game that tested it with various processors. The game does leverage the analogous HyperThreading feature on rival Intel Core processors. While this doesn't adversely affect performance of higher core-count Ryzen chips, such as the 16-core Ryzen 9 5950X or to a lesser extent the 12-core 5900X, lower core-count variants such as the 6-core 5600X take a definite performance hit, with PCGH reporting that a Ryzen 5 5600X is now matched by a Core i5-10400F, as the game is able to take advantage of HyperThreading and deal with 12 logical processors on the Intel chip, while ignoring SMT on the AMD one.

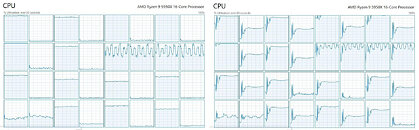

CD Projekt RED may have bigger problems on its hands than performance optimization for a PC processor, such as the game being riddled with glaring performance issues on consoles; but in the meantime, the PC enthusiast community swung to action with a fix. Authored by chaosxk on Reddit, it involves manually editing the executable binaries of the game using a Hex Editor, which tricks the game into using logical processors as cores. This fix has been found to improve frame-rates on AMD Ryzen machines. Before attempting the hex edit, make sure you back up your original executables. The screenshot below provides a before & after-patch view of Cyberpunk 2077 loading a Ryzen 9 5950X. You can learn more about this fix, and a step-by-step guide here.Update 08:28 UTC: We have some technical details on what's happening.

A Reddit post by CookiePLMonster sheds light on what is possibly happening with the game. According to them, Cyberpunk 2077 reuses AMD GPUOpen pseudo-code to optimize its scheduler for the processor. It was originally designed to let an application use more threads when an AMD "Bulldozer" processor is used; but has the opposite effect when a non-Bulldozer AMD processor is detected. The game looks for "AuthenticAMD" processor brand, and "family = 0x15" (AMD K15 or Bulldozer/derivative), and only then engages "logical processors" (as identified by Windows OS scheduler as part of its Bulldozer-optimization). When any other, including a newer AMD processor is detected, the code makes the game scheduler only send traffic to the physical cores, and not to their logical processors.

Our own W1zzard dug into the binaries to verify these claims, find a commented decompilation below. The game indeed uses this archaic GPUOpen code from 2017 to identify AMD processors, and this is responsible for its sub-optimal performance with AMD Ryzen processors. This clearly looks like a bug or oversight, not like it's intentional.

Sources:

chaosxk (Reddit), BramblexD (Reddit), PCGH, VideoCardz, CookiePLMonster (Reddit)

CD Projekt RED may have bigger problems on its hands than performance optimization for a PC processor, such as the game being riddled with glaring performance issues on consoles; but in the meantime, the PC enthusiast community swung to action with a fix. Authored by chaosxk on Reddit, it involves manually editing the executable binaries of the game using a Hex Editor, which tricks the game into using logical processors as cores. This fix has been found to improve frame-rates on AMD Ryzen machines. Before attempting the hex edit, make sure you back up your original executables. The screenshot below provides a before & after-patch view of Cyberpunk 2077 loading a Ryzen 9 5950X. You can learn more about this fix, and a step-by-step guide here.Update 08:28 UTC: We have some technical details on what's happening.

A Reddit post by CookiePLMonster sheds light on what is possibly happening with the game. According to them, Cyberpunk 2077 reuses AMD GPUOpen pseudo-code to optimize its scheduler for the processor. It was originally designed to let an application use more threads when an AMD "Bulldozer" processor is used; but has the opposite effect when a non-Bulldozer AMD processor is detected. The game looks for "AuthenticAMD" processor brand, and "family = 0x15" (AMD K15 or Bulldozer/derivative), and only then engages "logical processors" (as identified by Windows OS scheduler as part of its Bulldozer-optimization). When any other, including a newer AMD processor is detected, the code makes the game scheduler only send traffic to the physical cores, and not to their logical processors.

Our own W1zzard dug into the binaries to verify these claims, find a commented decompilation below. The game indeed uses this archaic GPUOpen code from 2017 to identify AMD processors, and this is responsible for its sub-optimal performance with AMD Ryzen processors. This clearly looks like a bug or oversight, not like it's intentional.

84 Comments on Cyberpunk 2077 Does Not Leverage SMT on AMD Ryzen, Lower Core-Count Variants take a Bigger Hit, Proof Included

So IF they even focused on anything, they were doing it on the wrong things. Sorry but I'm not buying that. Note the trend: this is not new to AMD, it happens every single time.

Its called negligence and/or lack of investment. And its strange because for Ryzen it somehow seemed they changed that to 'invested'... just not for gaming. All Zen-related fixes were gaming related in a way - CCX latency for example is a design choice that completely is not gaming oriented. There is a disconnect in AMD with the hardware they make and what target audiences are going to do with it, and how AMD can leverage that.

5 year analysis from Polish stock exchange (price in PLN):

You could probably match each drop in stock value with delay announcements.

Anyway, it's often said that nobody can consistently predict the stock market and expert are wrong all the time too.Ryzen is a success story though, seems like they knew what they were doing if they couldn't compete with Intel's IPC initially. Zen and Zen+ offered good productivity with more cores (which was also good for streaming afaik) and a cheap option for budget gaming, and with Zen 2 and especially 3 they caught up to Intel in games too.Why would that bother you when consumer copy doesn't have it?

I mean, I have no idea if it was not their focus, or if they just weren't able to compete on that front back then.

Maybe it was just a matter of priorities, and I can see no reason to fault them and call that a mistake, as they did get enough adopters to succeed.

Or maybe it really took those 3-4 years of RnD to match Intel at gaming despite their efforts.

Yeah, they keep having blunders, some of them pretty stupid, but I don't see any big failings (or too many smaller ones) with the Ryzen line.

The trick isnt placebo on a overclocked 1600X/1080@1440p

currently I have a GTX 1080 with a G-SYNC 1080p monitor and wont even try it and no regrets, as i am not interested in this game i was going to try it but after i read the reviews how sucks performance NO thanks...

Unlike Crysis at the time i bought 3 8800GTX in 3 way SLi just to play it and was happy because the game at that time was 10 years ahead of its time.

No DXR for Radeon 6000 series hold the phone why weren't they in there the same time nVidia were

No SMT on Ryzen CPU's yeah ok it's an easy self applied fix that should have been done before release by CDPR

No complete Key remapping. Did CDPR not learn from their mistakes same shit different game ( Witcher 3 which was eventually fixed) thank dog for the modding community who've already fixed it mostly

No QA testing well if there was it was very minimal aka does it run yes move on then

Poor memory allocation for PC's with much better resources available over consoles to offer the game engine but no lets just give them all the exact same amount also a easy self fix

Poor AI no real interactivity outside of the mission you're on

Bad Cops that blip in shoot you dead or do naff all if you hide for 10 seconds

Driving using KB is atrocious its either all or nothing and the dumb AI npc's that walk out in front of you LOL bye mofo squish

BUGS up the wazoo

CTD's up the wazoo

this all leads to a very piss poor excuse for an AAA game where the story is great the city is astounding but it's let down by gaming breaking crap that shouldn't have happened in a supposedly "GOLD" release I'm ashamed to say I spent $100NZD on this game

Remember this game is using the same base engine as The Witcher 3, which came out 5 years ago.

My question though is, does it really make a big enough difference to matter. Its my understanding that the GPU is the big thing holding people back in this game. The tests I see from other sites show a 3600x paired with a 3090. Yeah, there might be a noticeable difference there. But no one is pairing a 3090 with a 3600X.